Supplementary material for ICCV submission 667:

Understanding deep features with computer generated imagery

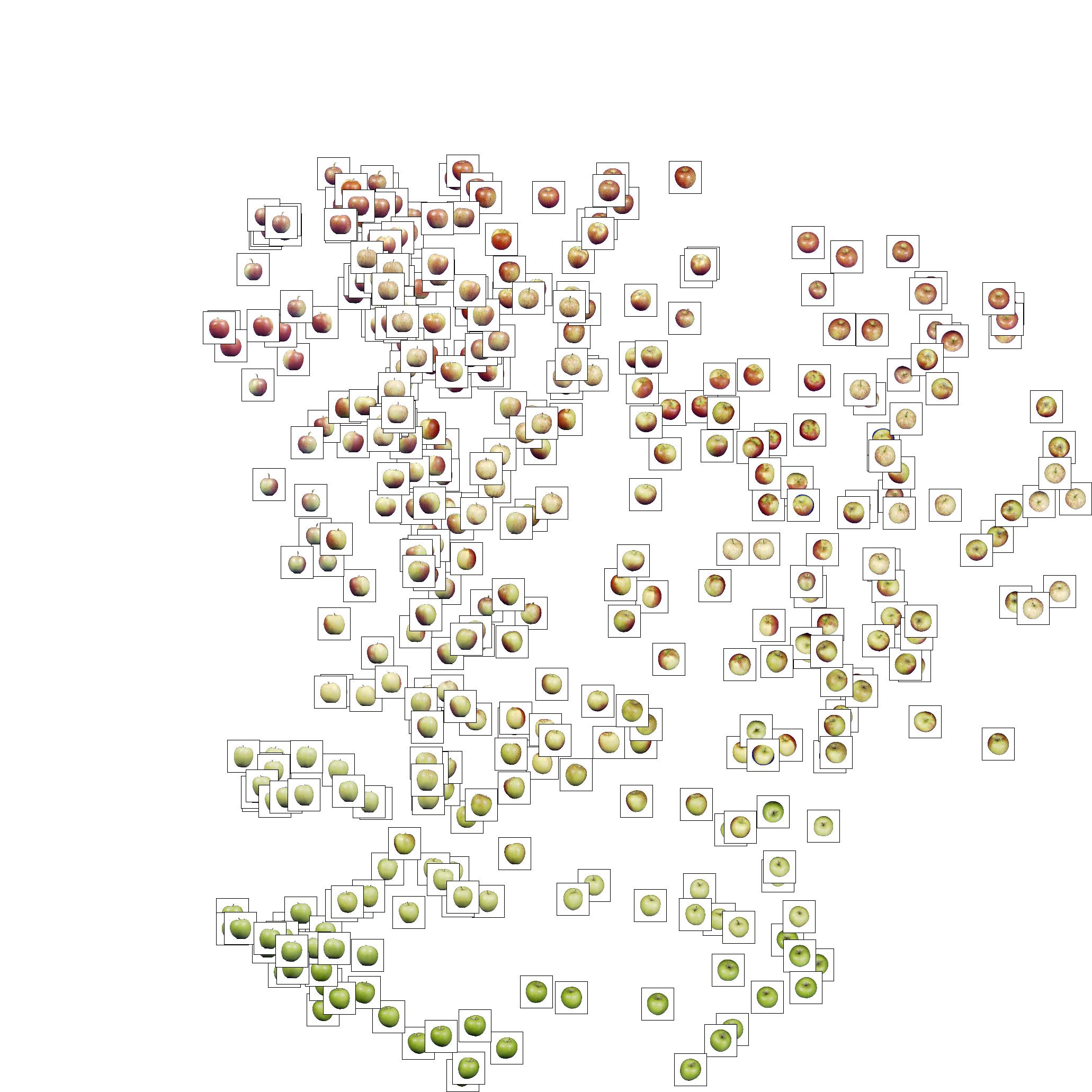

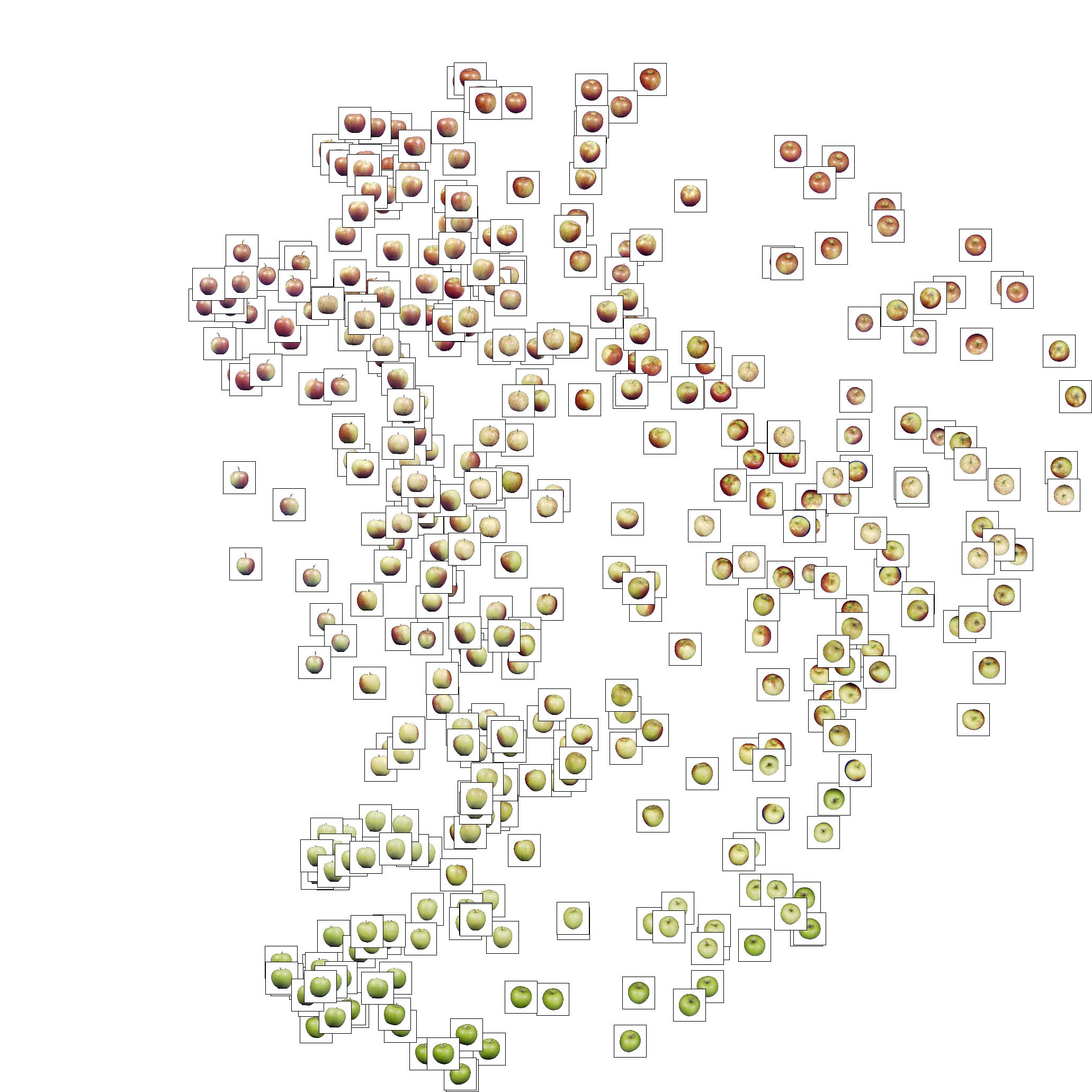

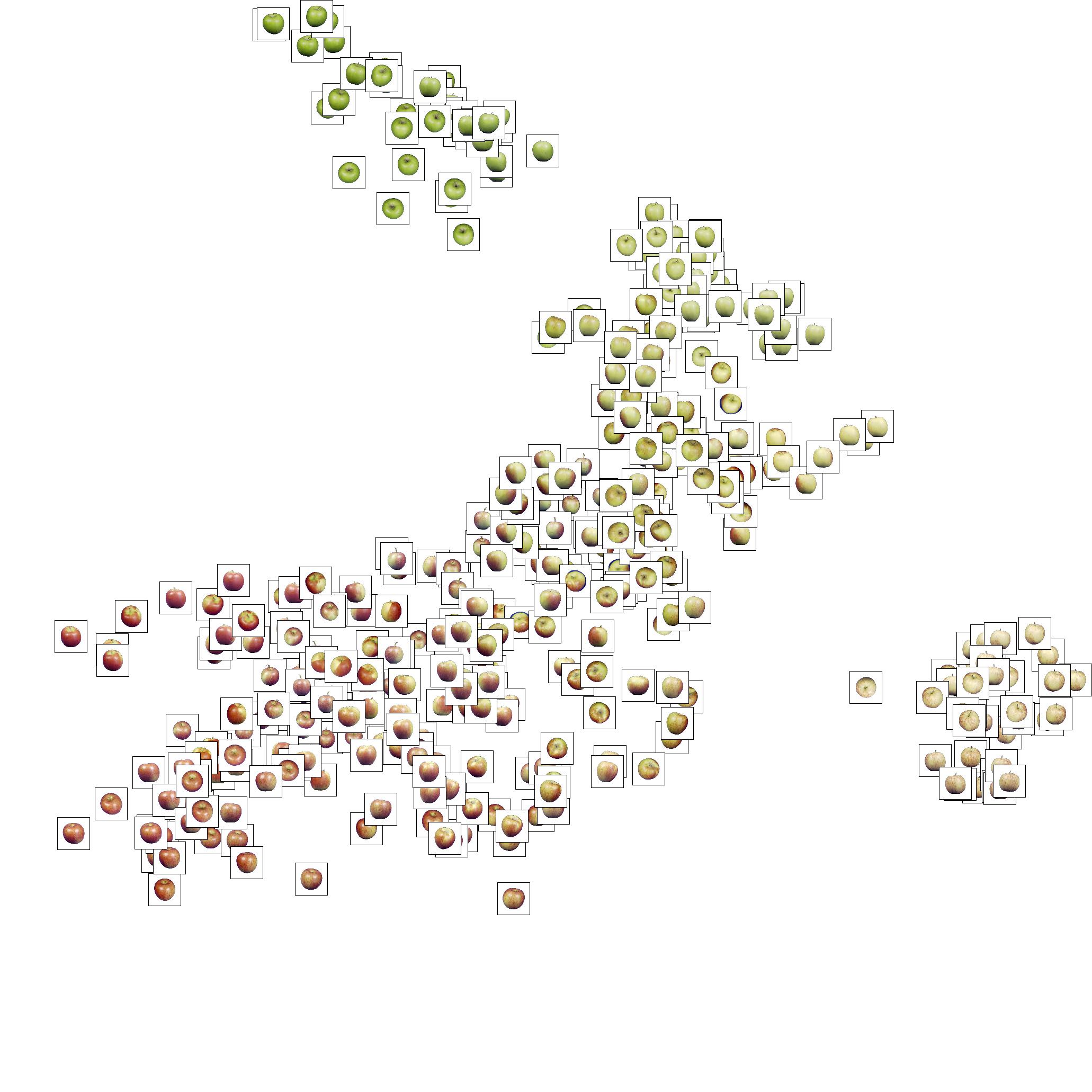

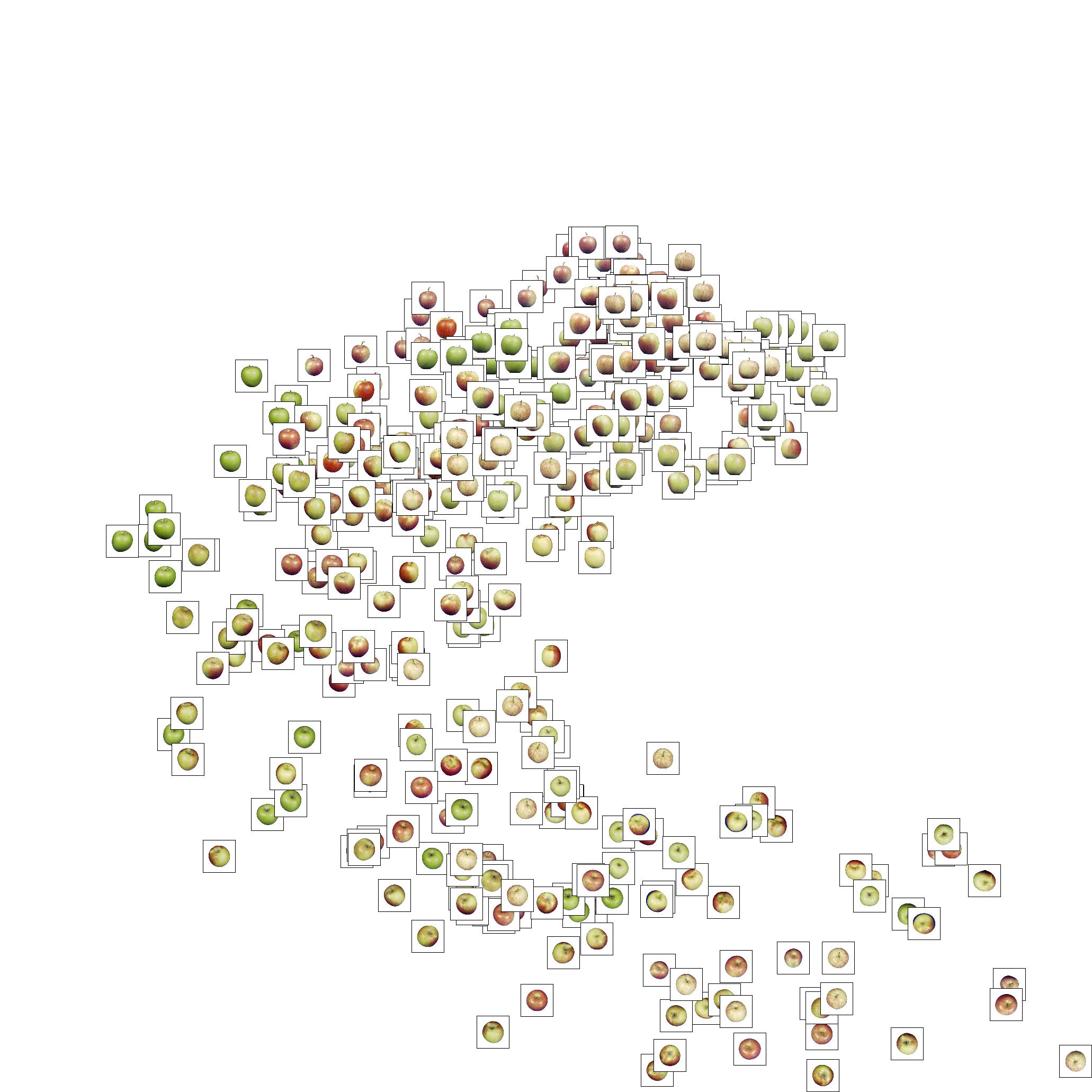

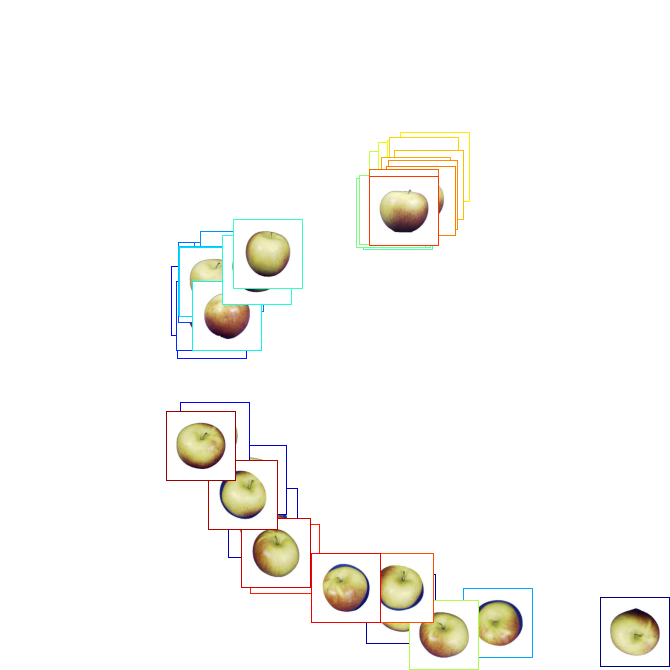

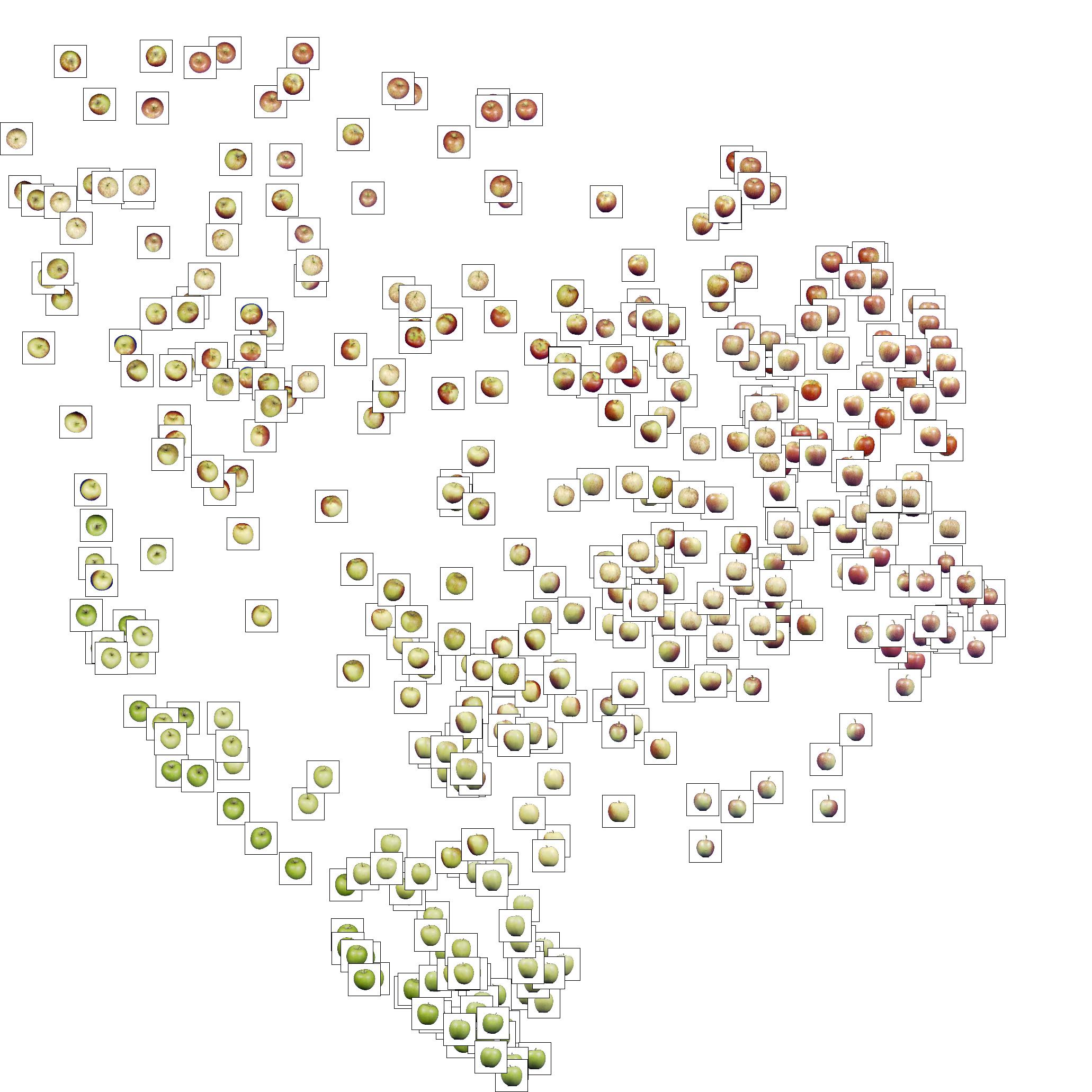

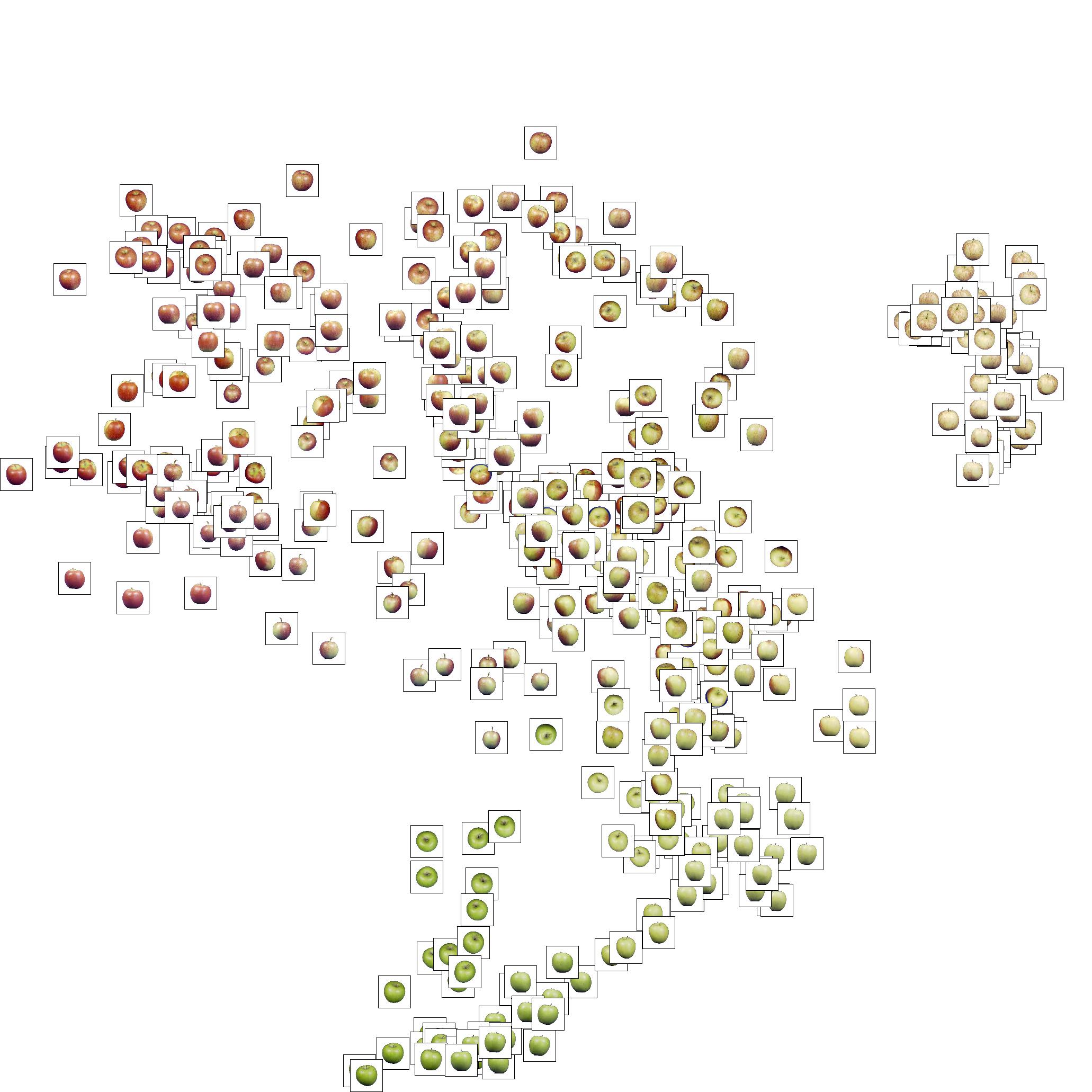

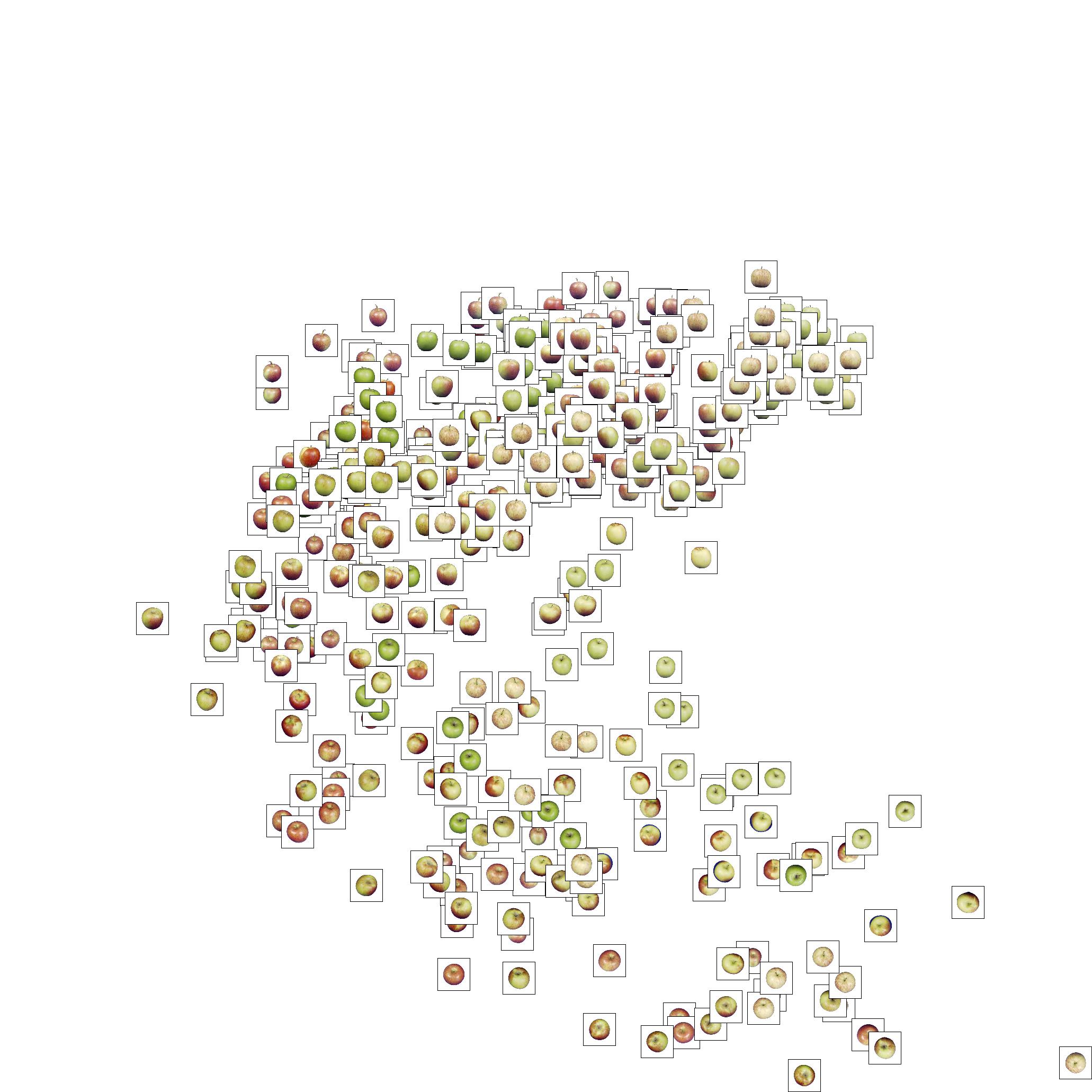

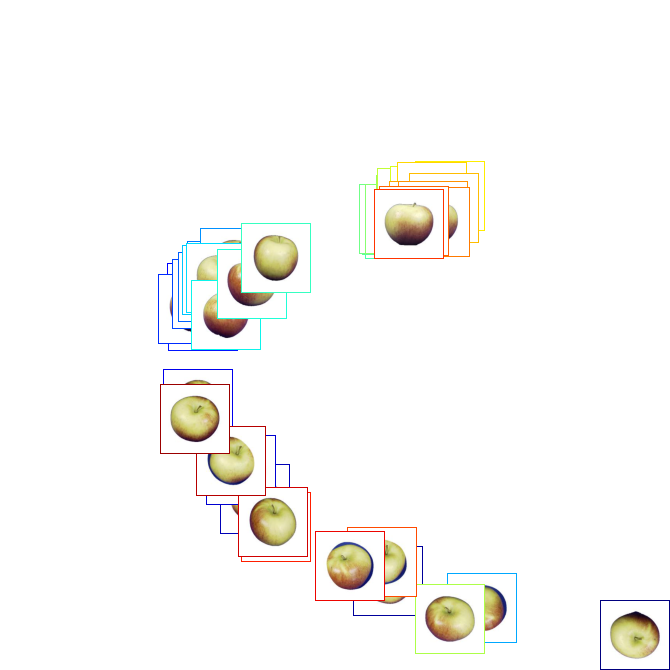

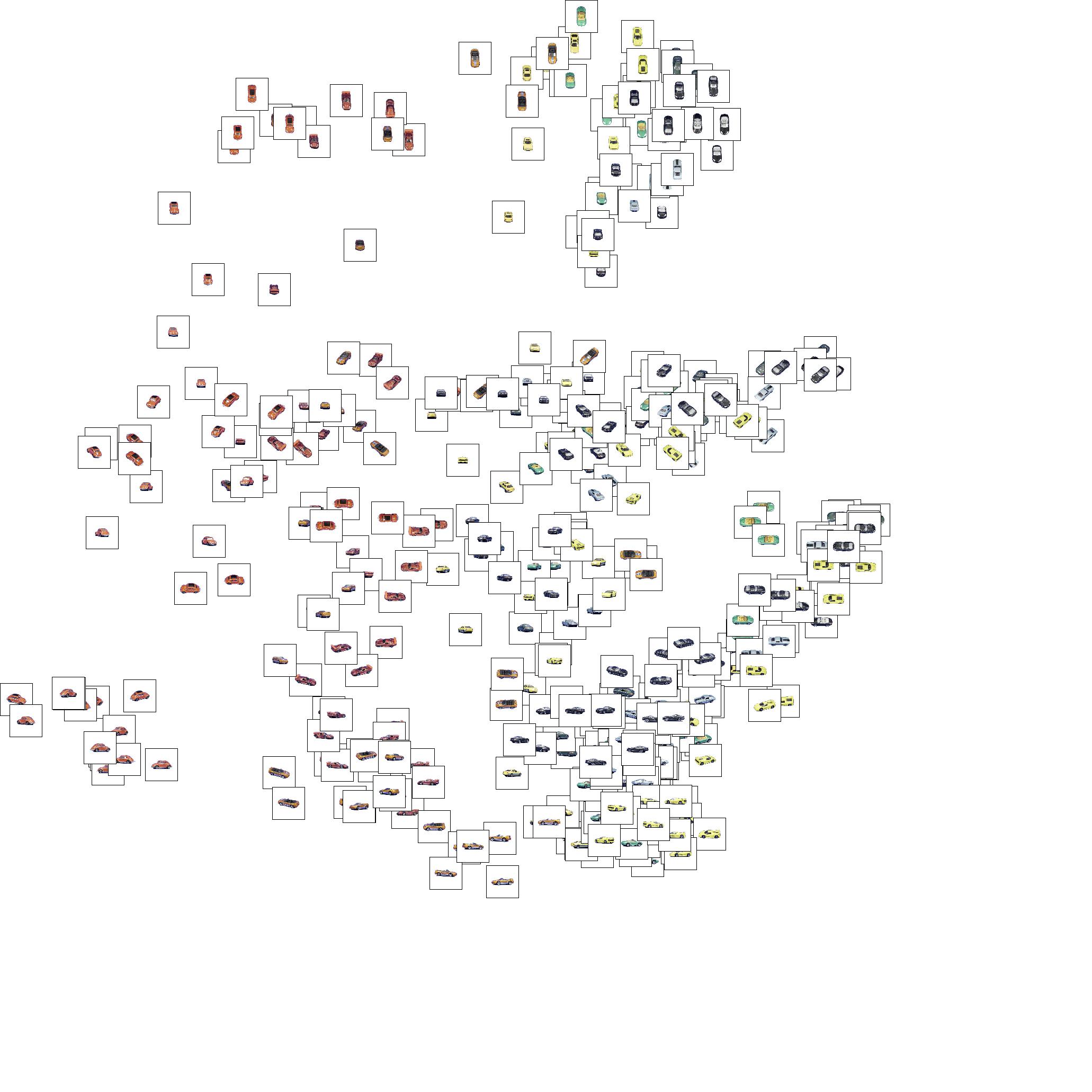

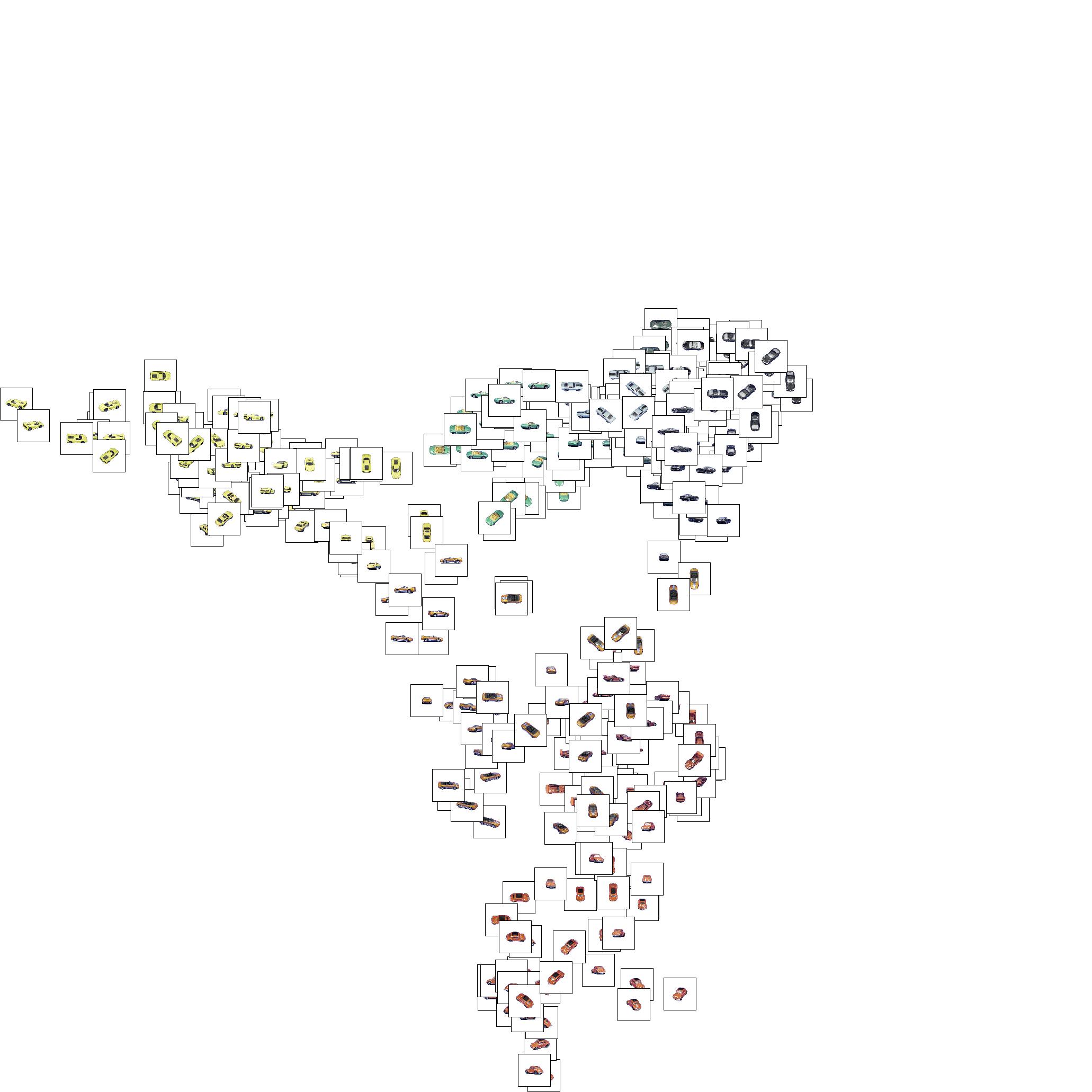

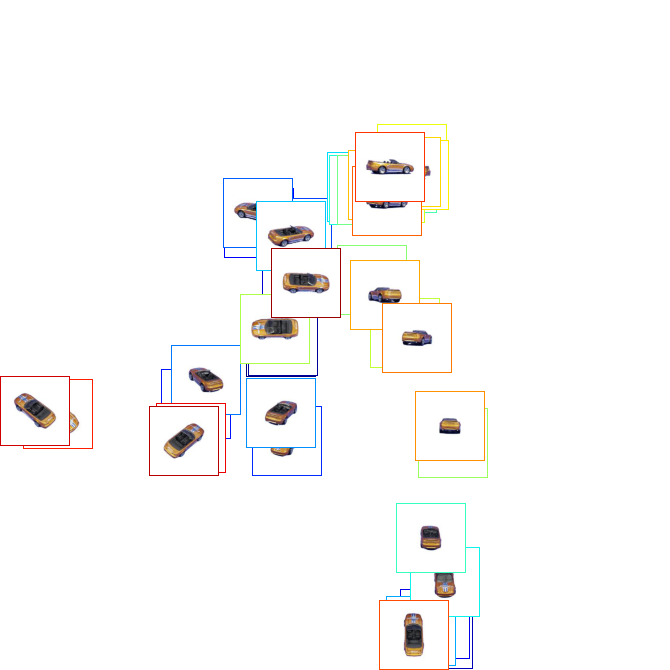

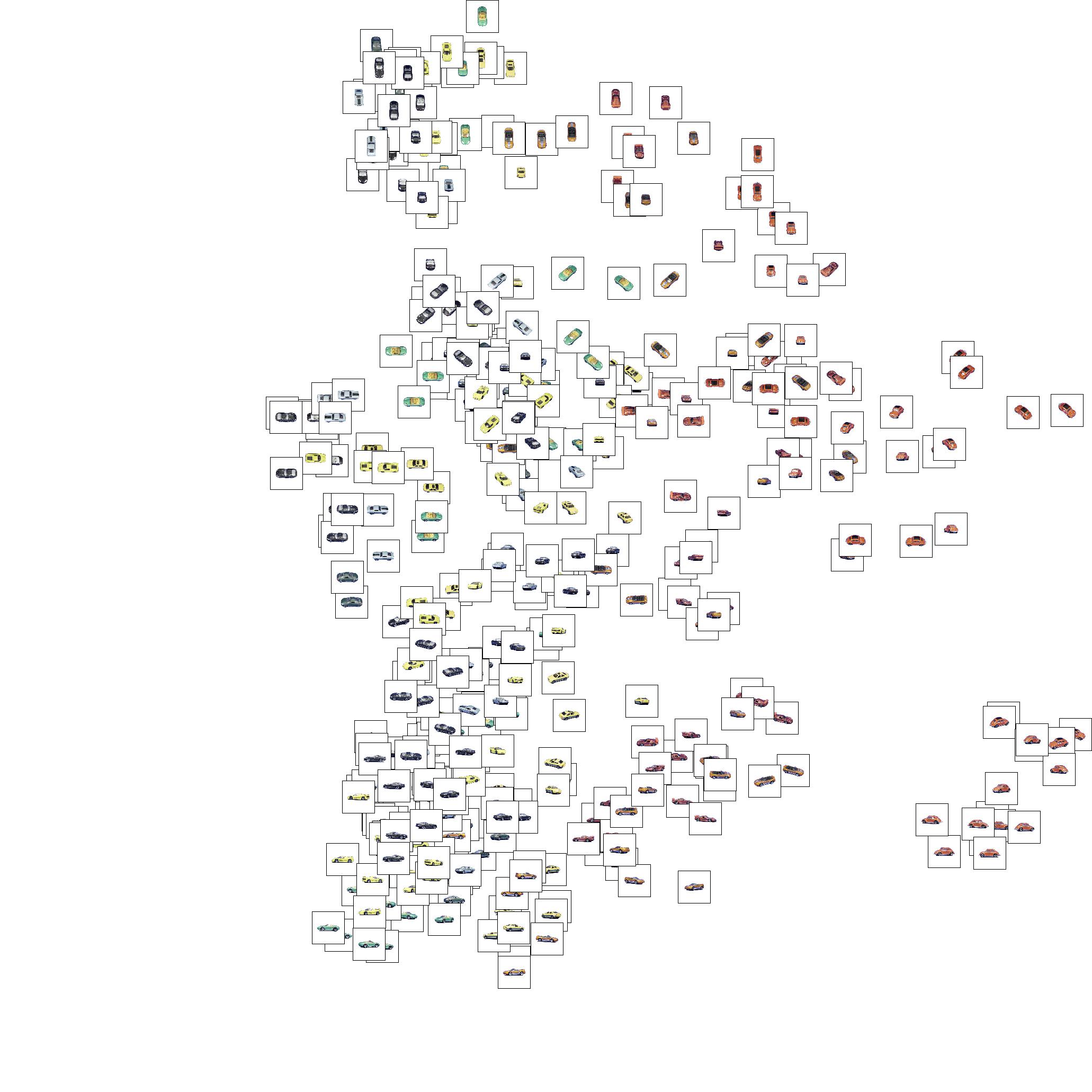

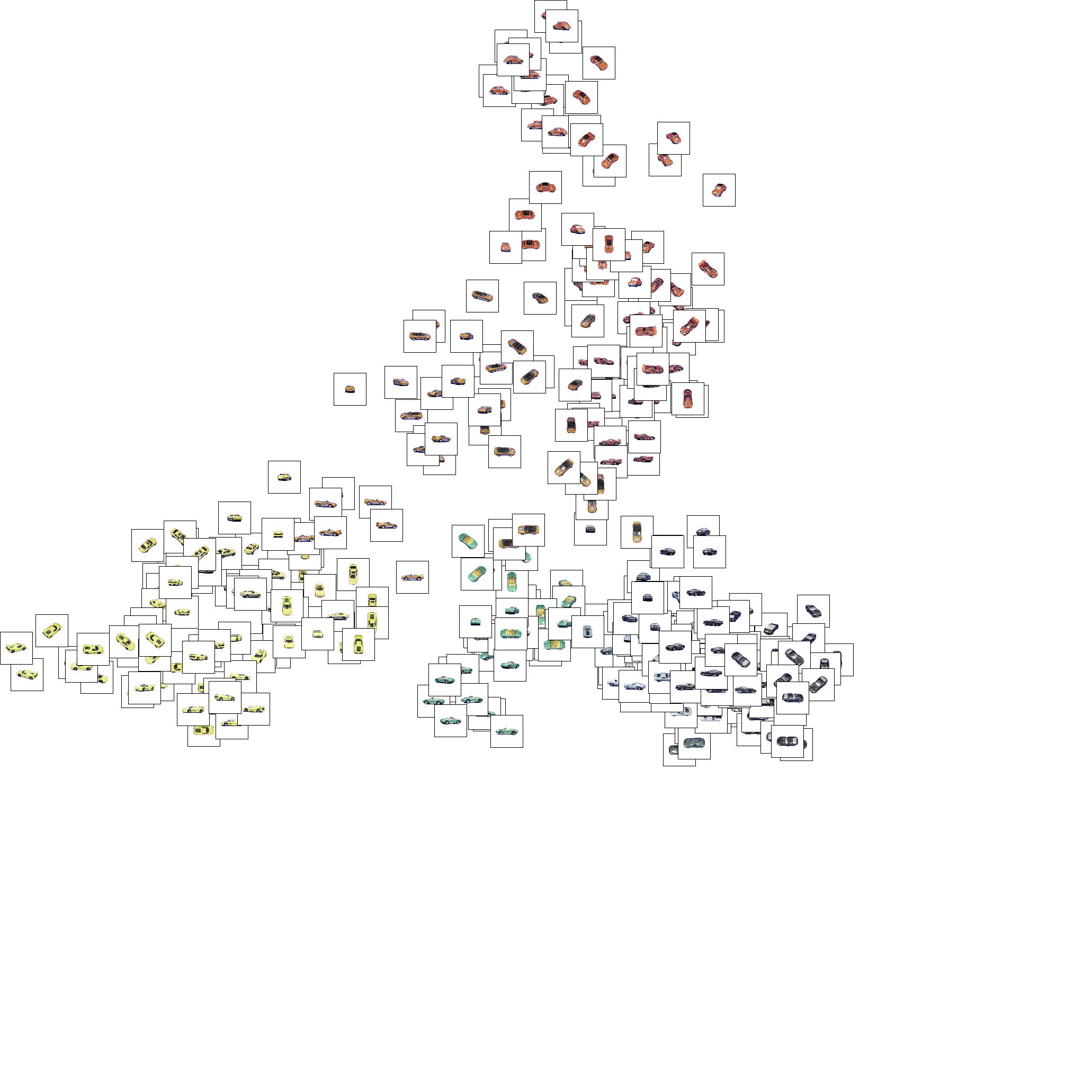

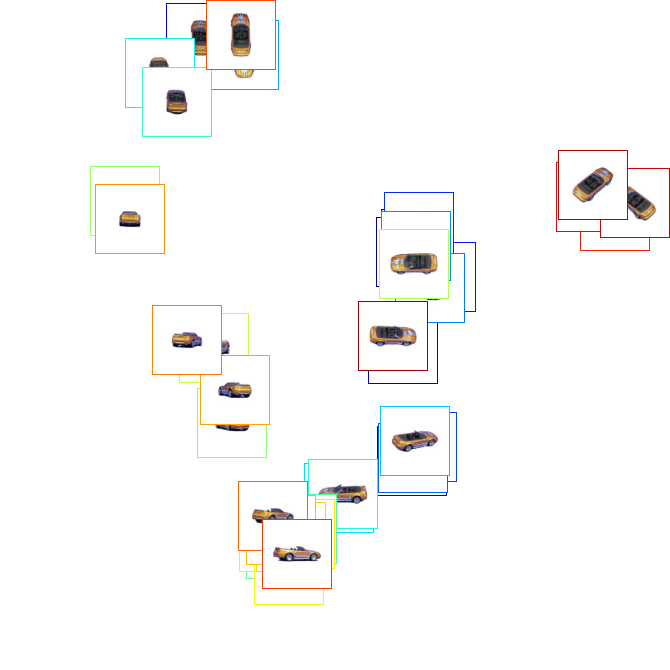

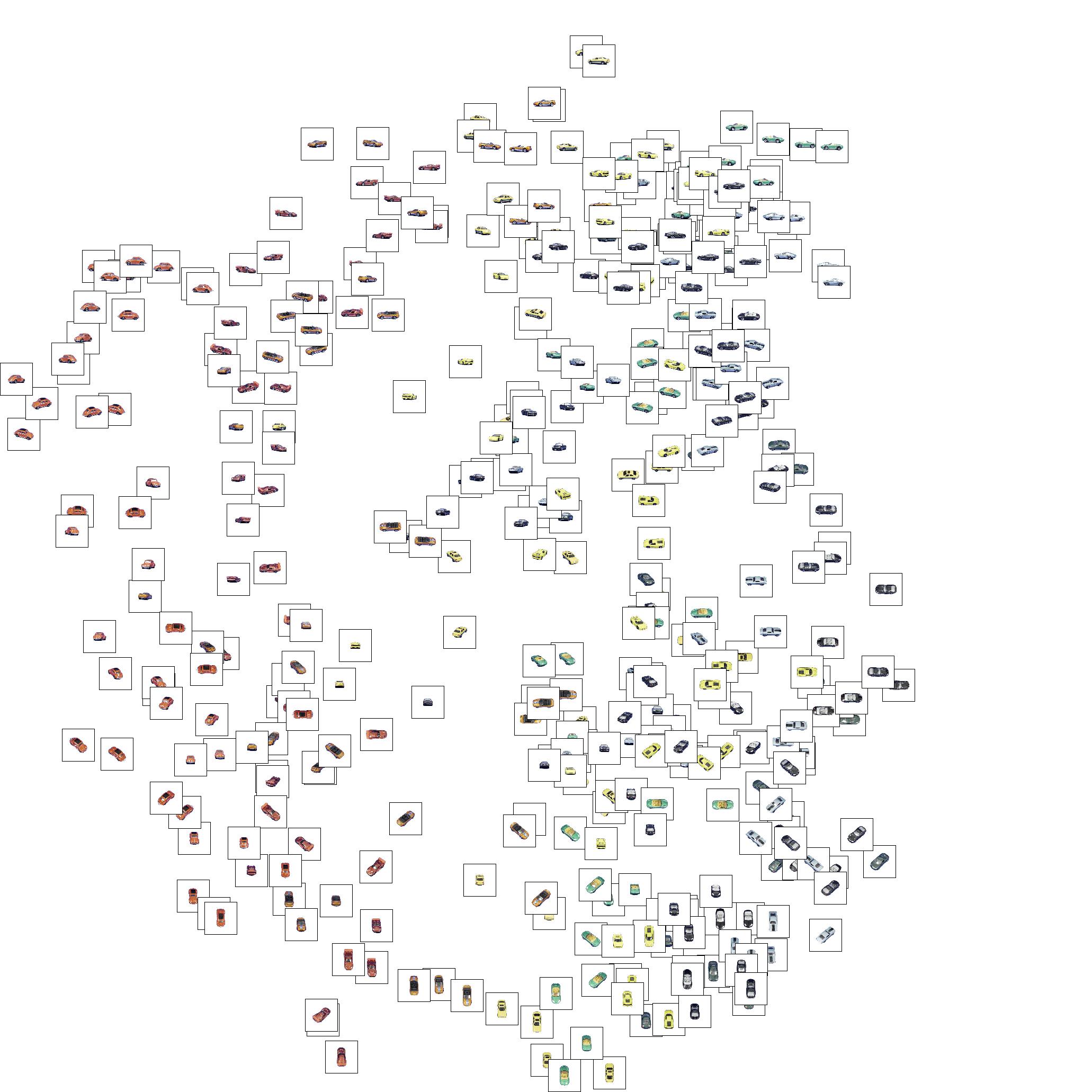

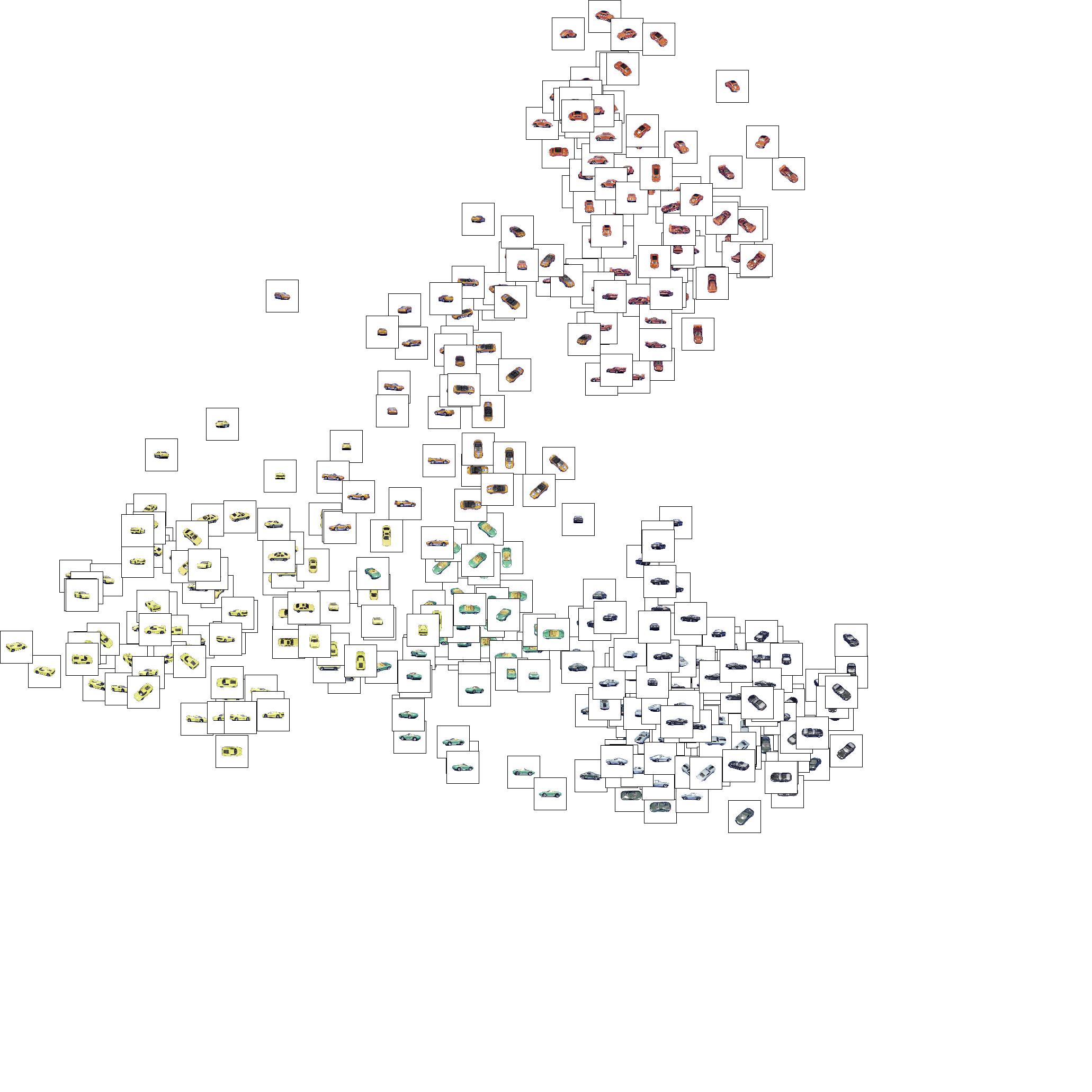

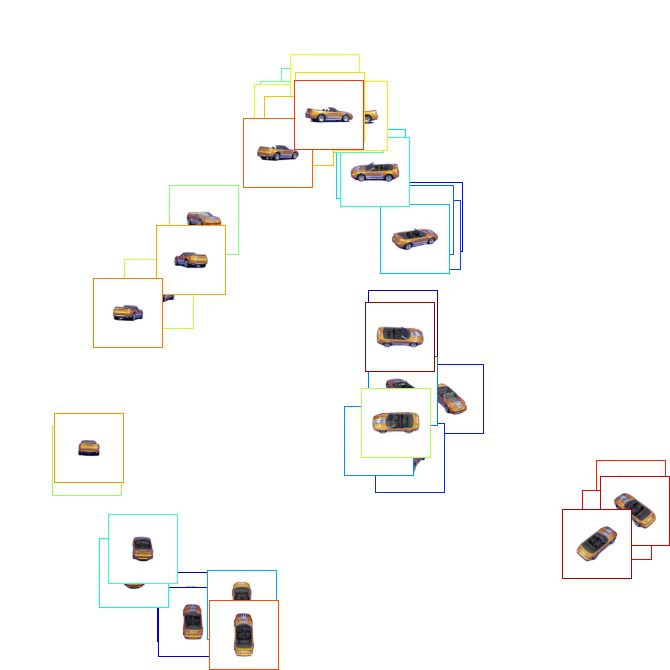

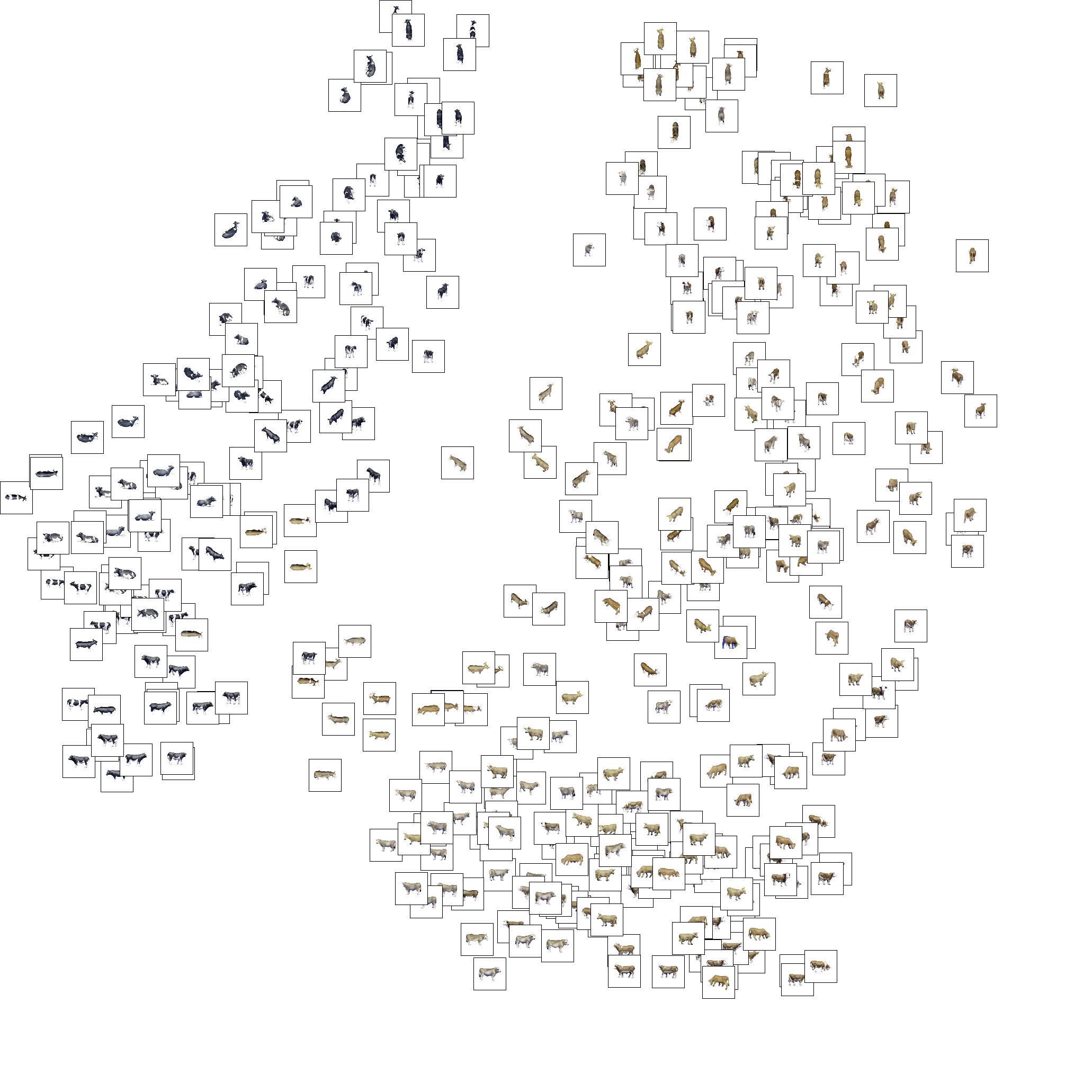

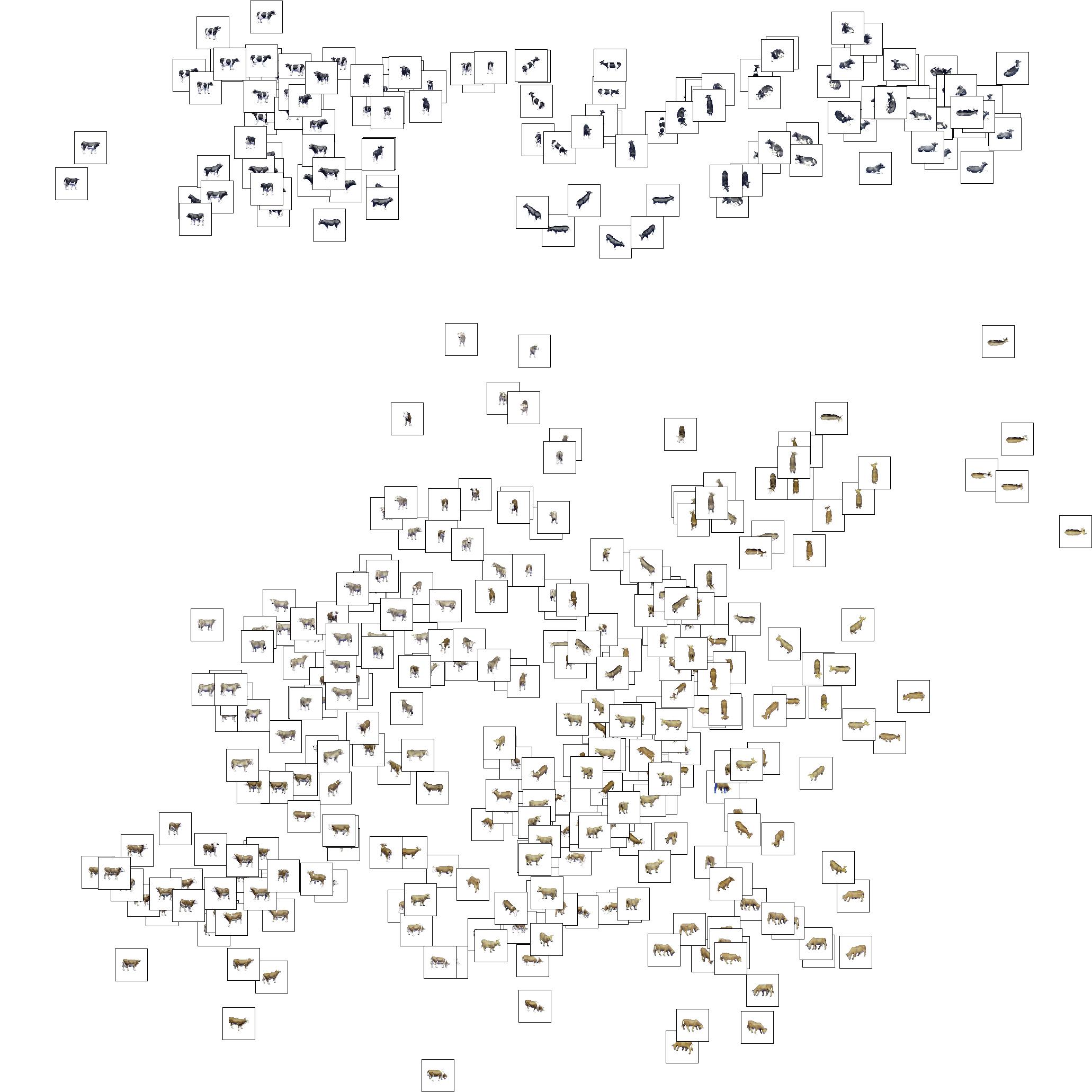

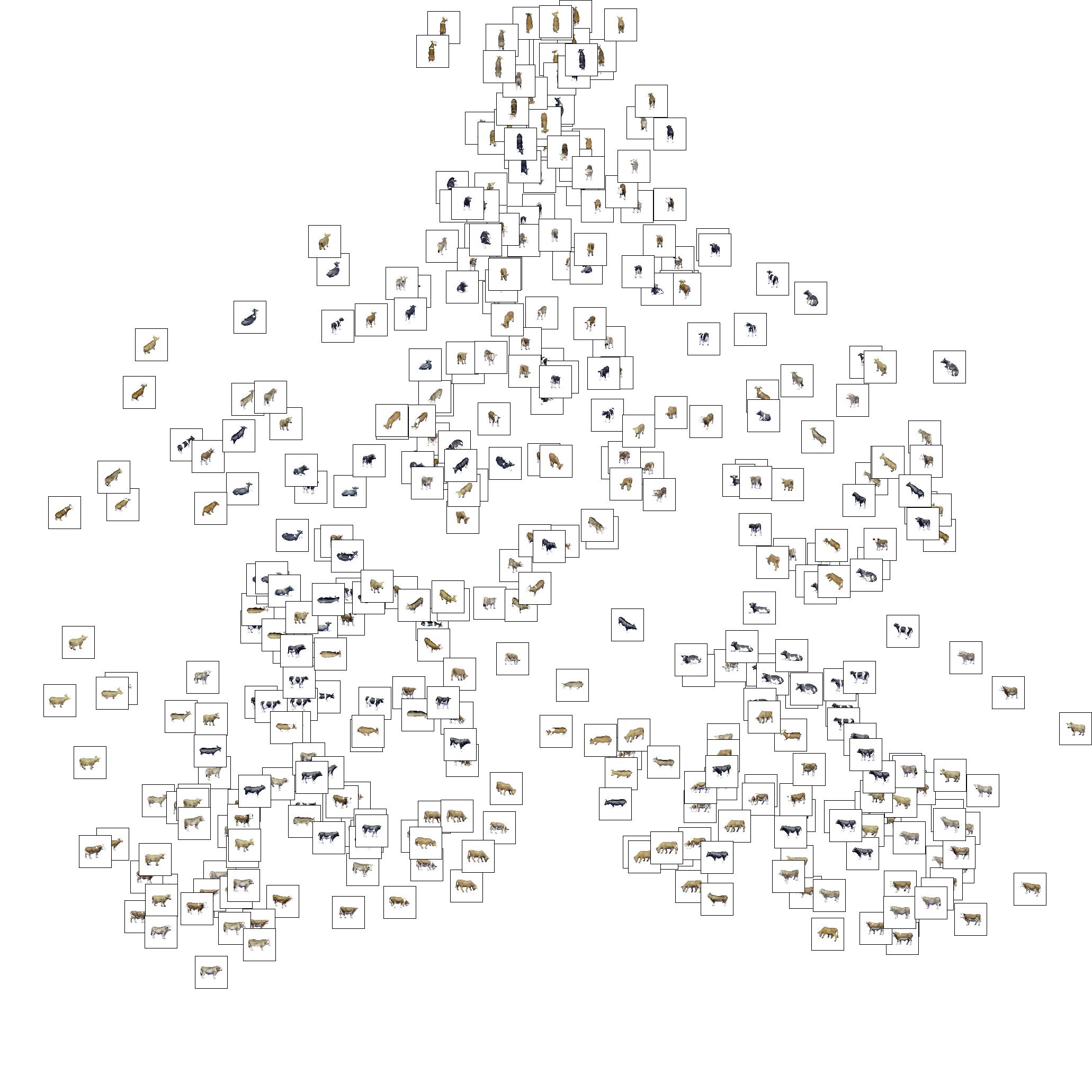

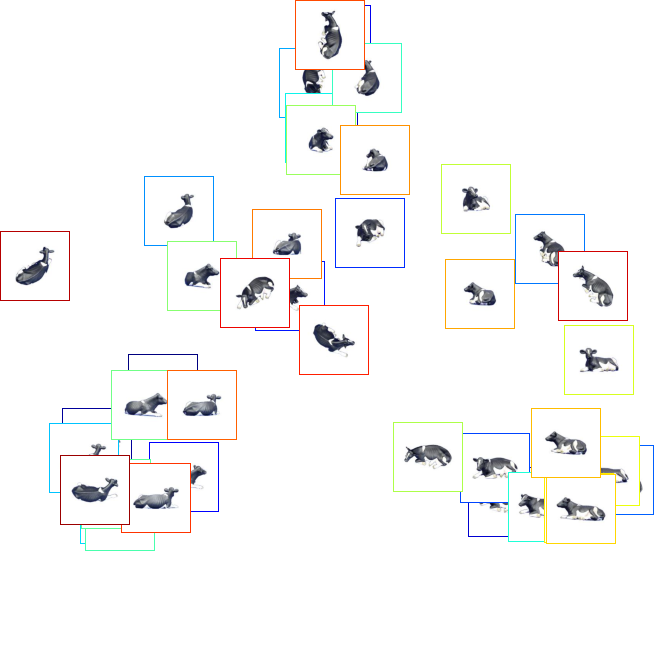

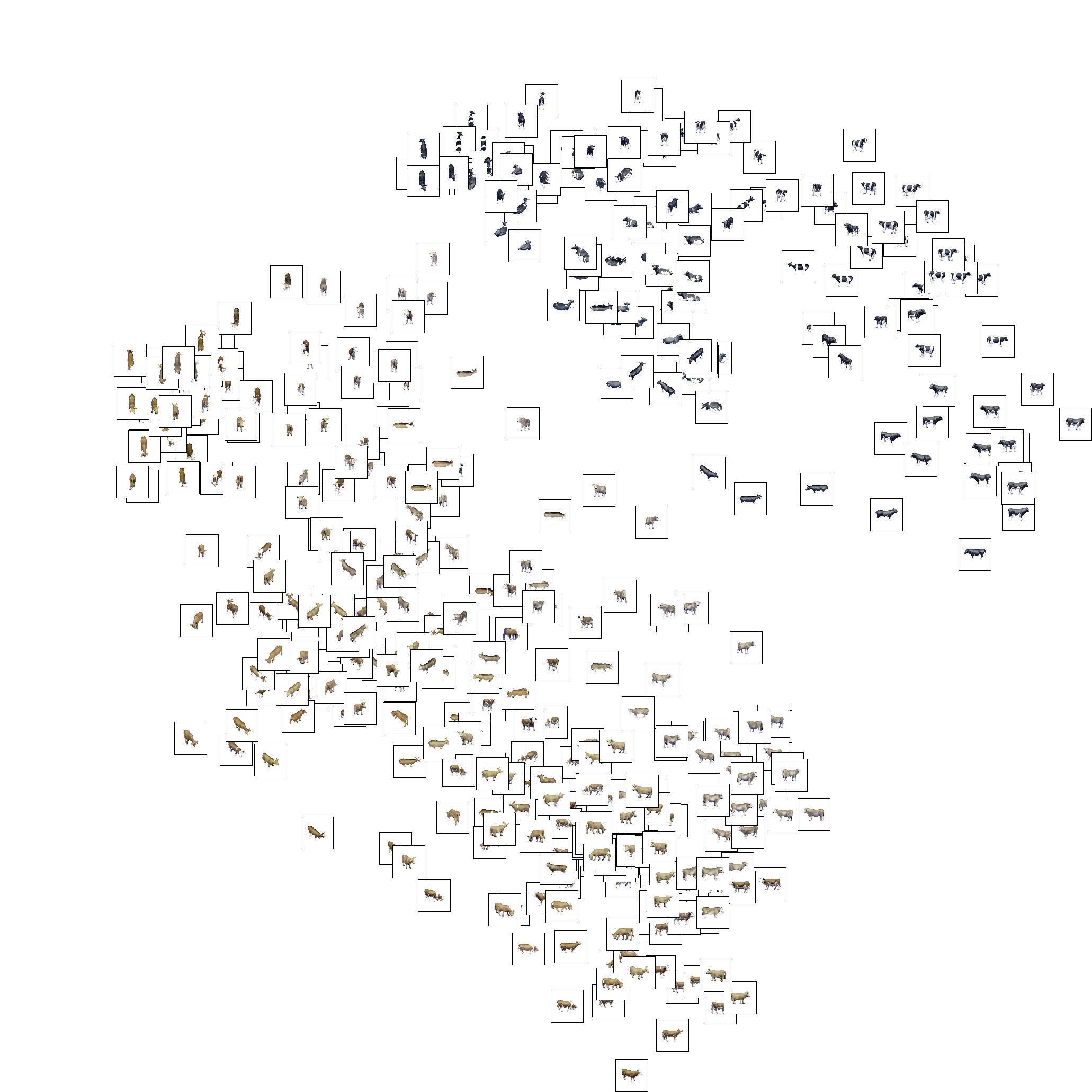

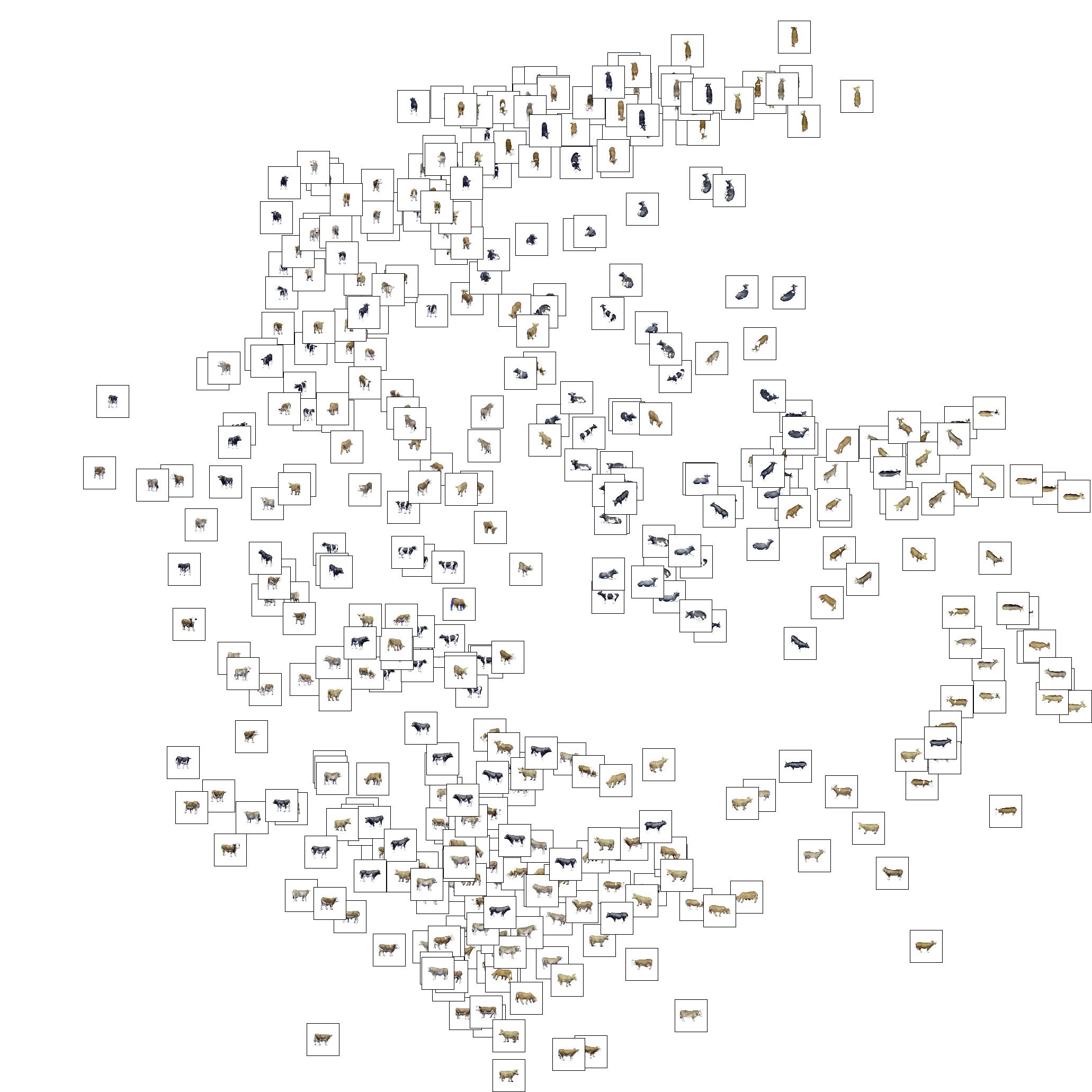

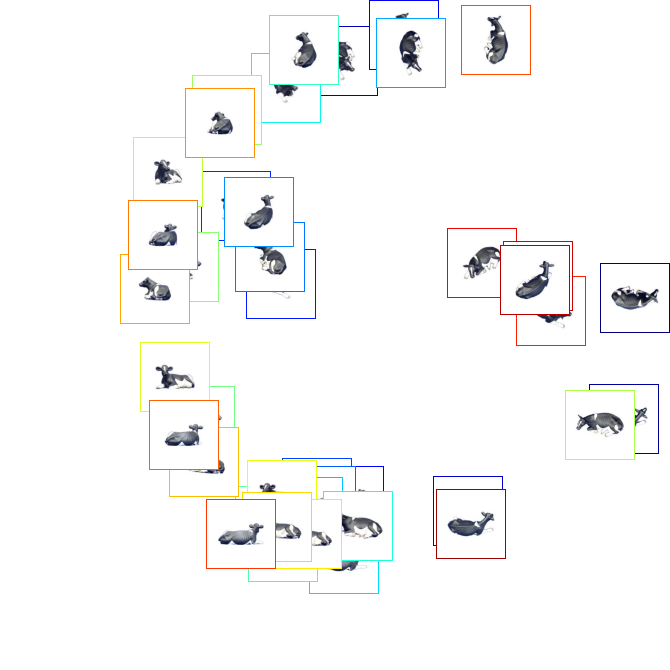

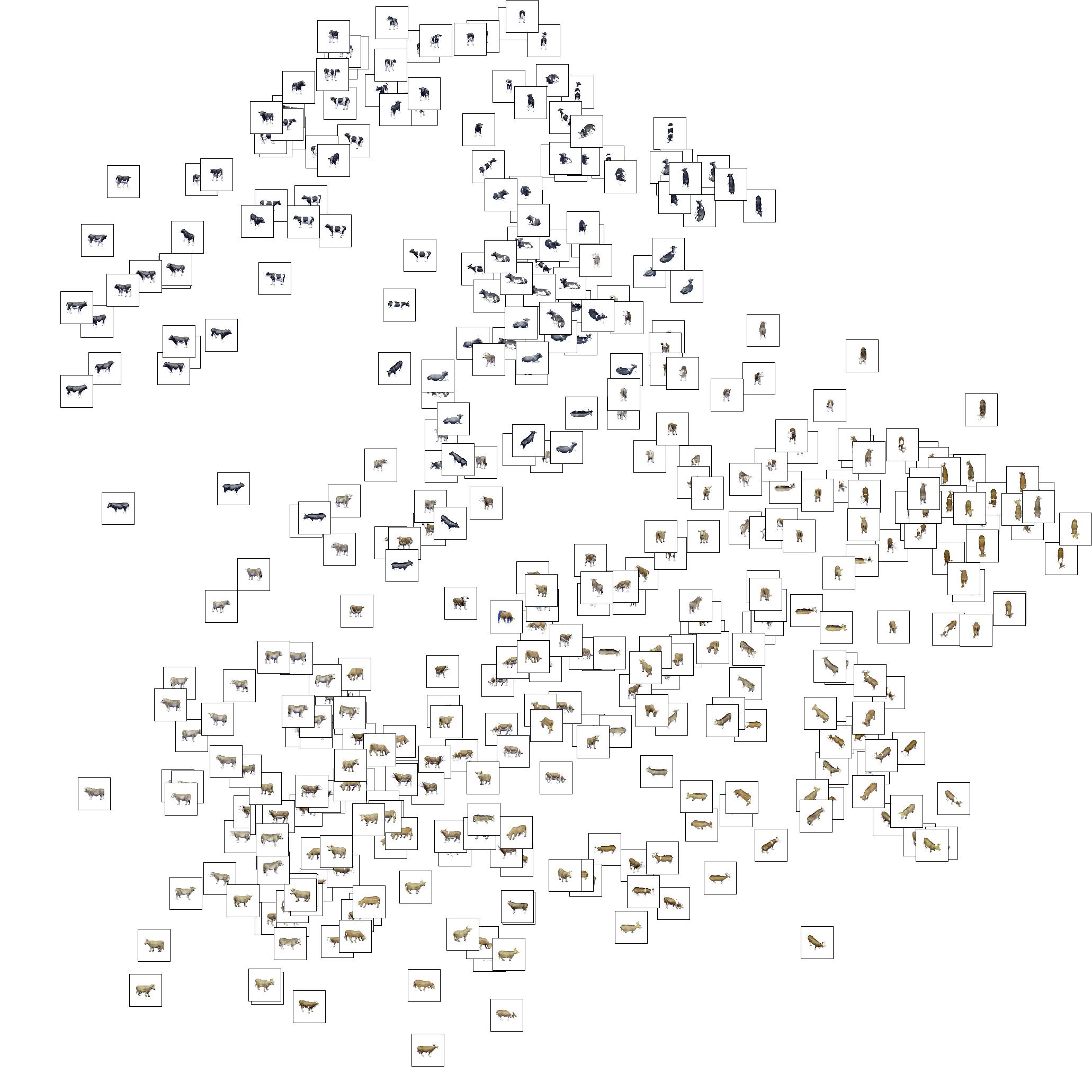

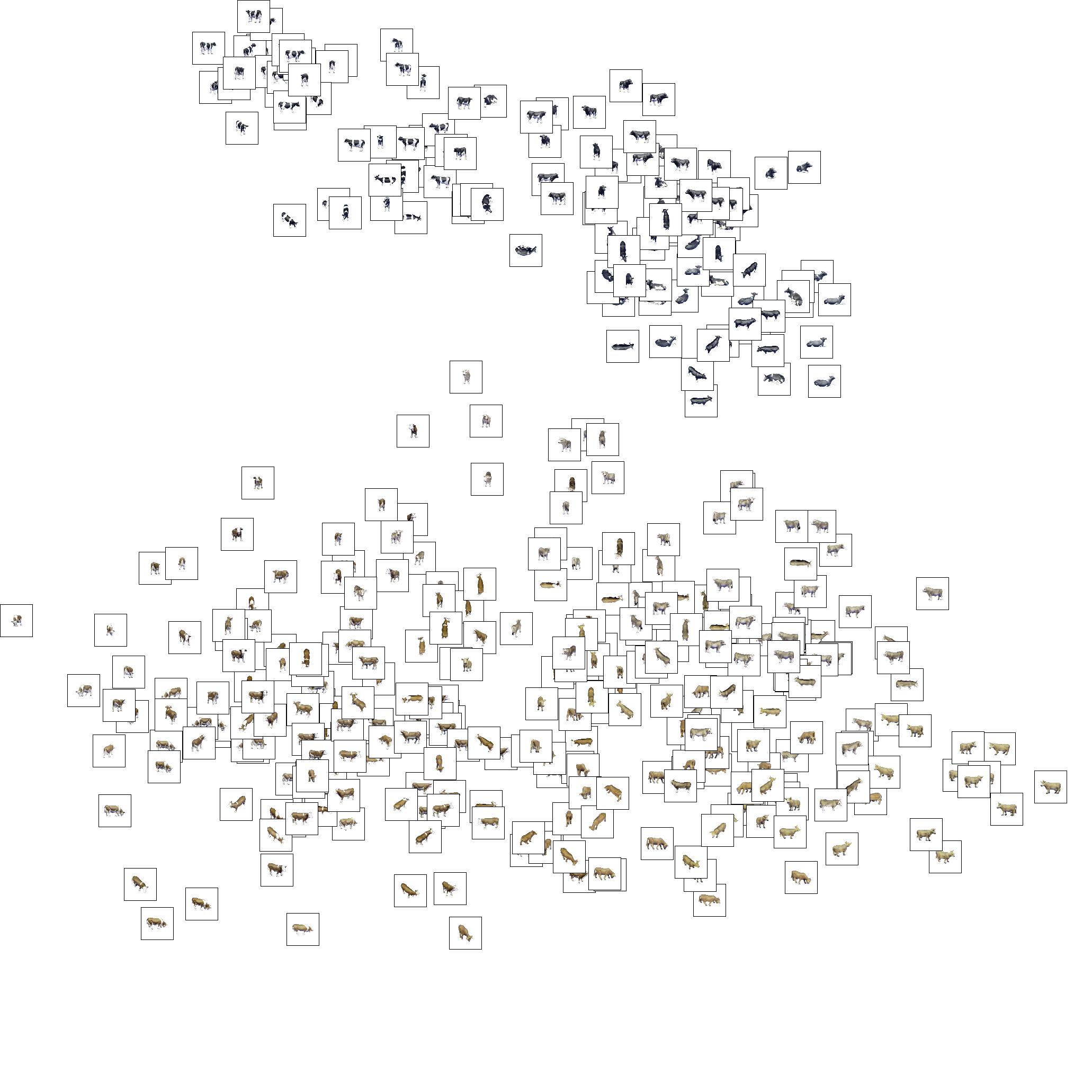

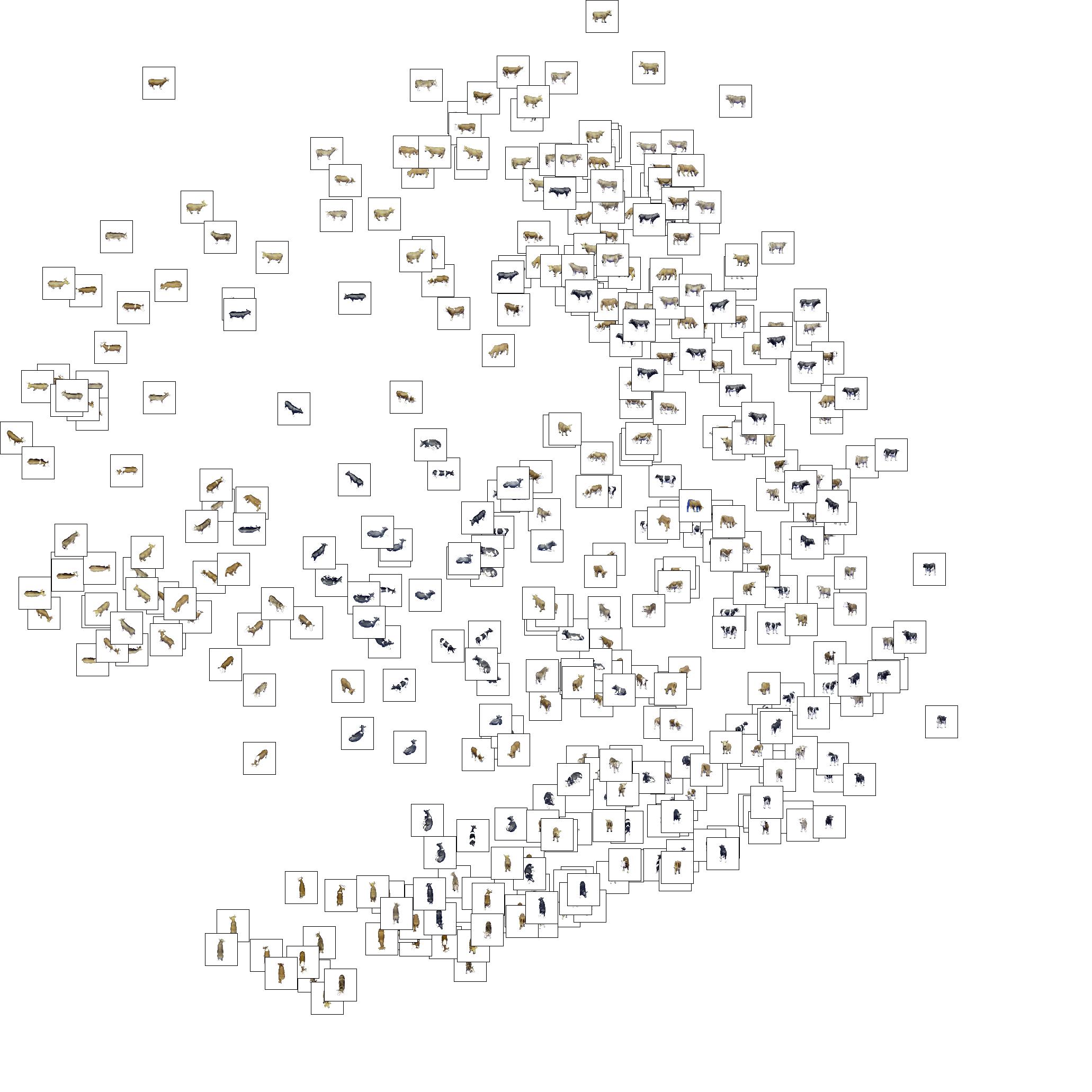

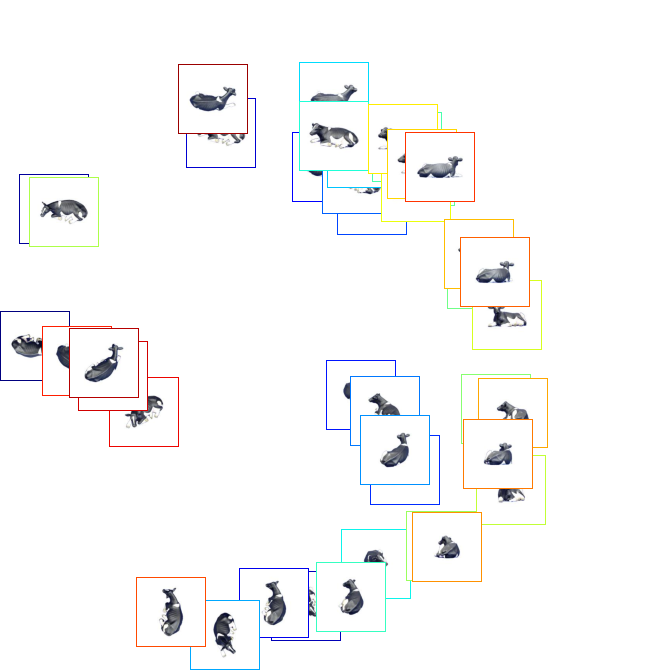

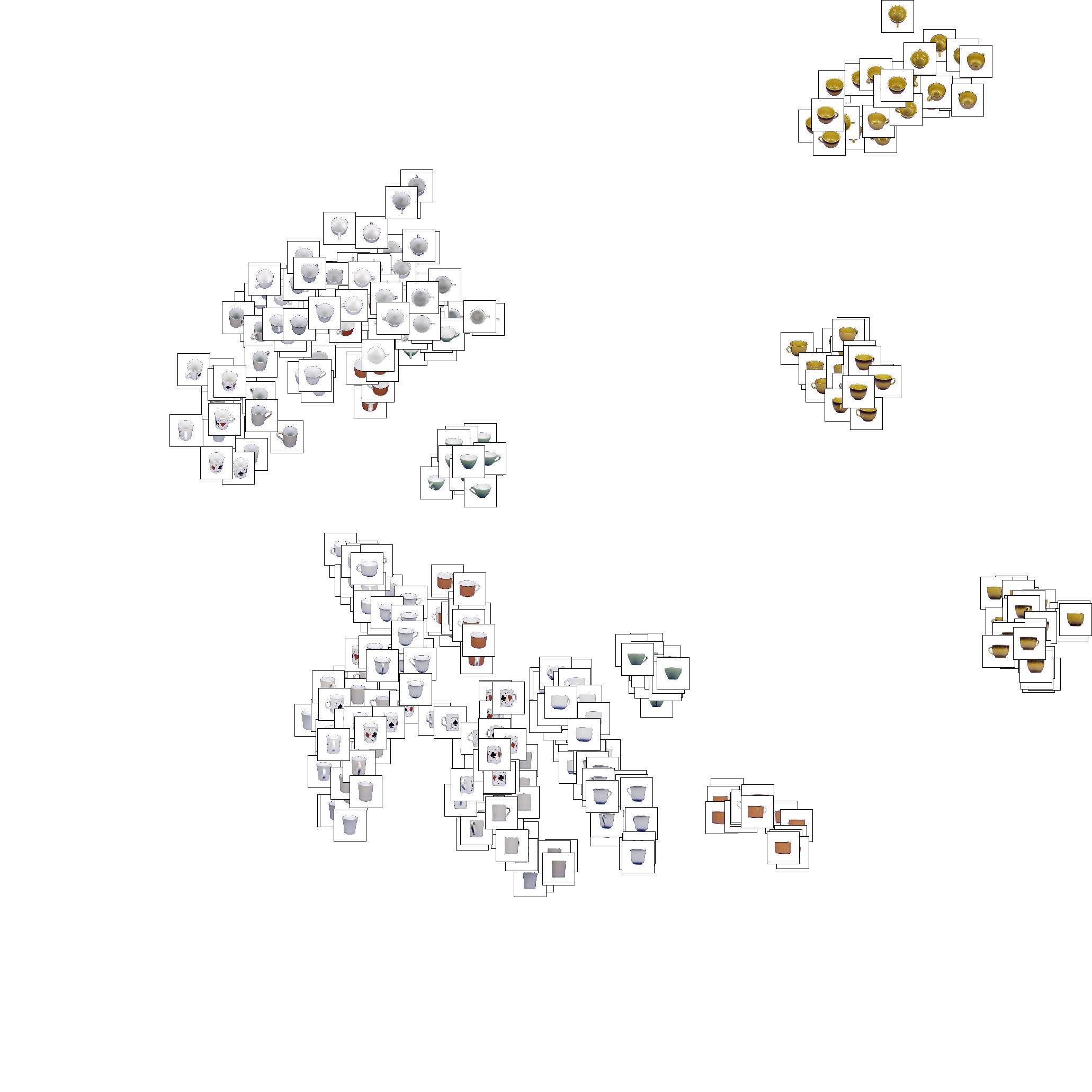

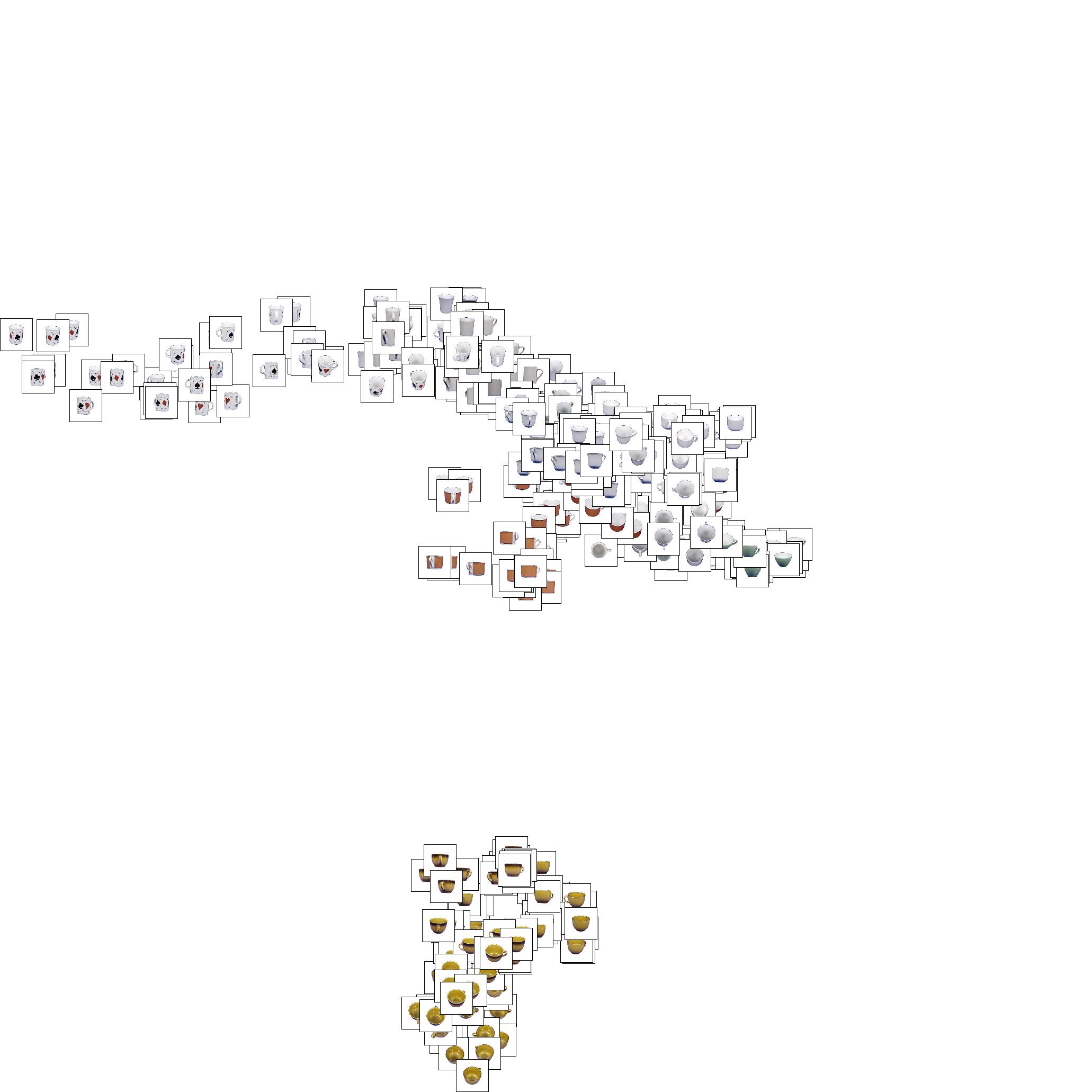

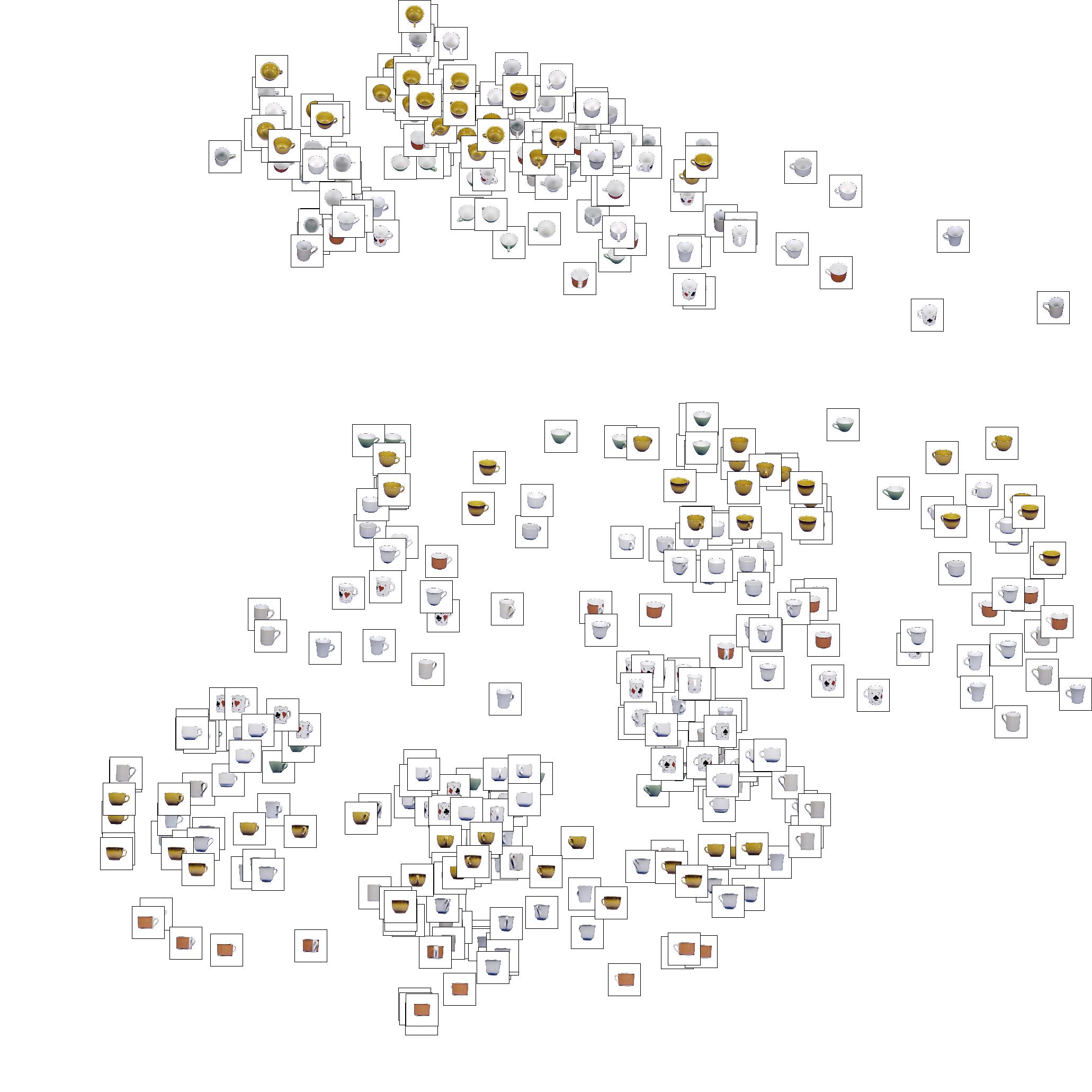

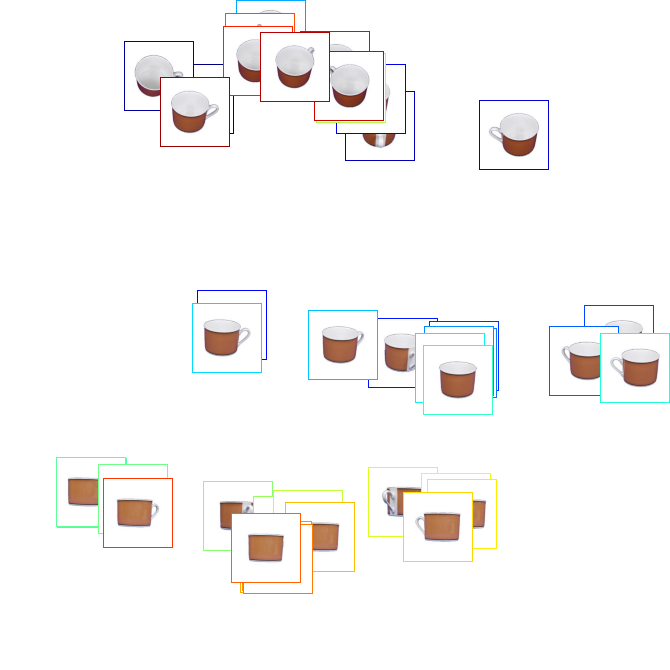

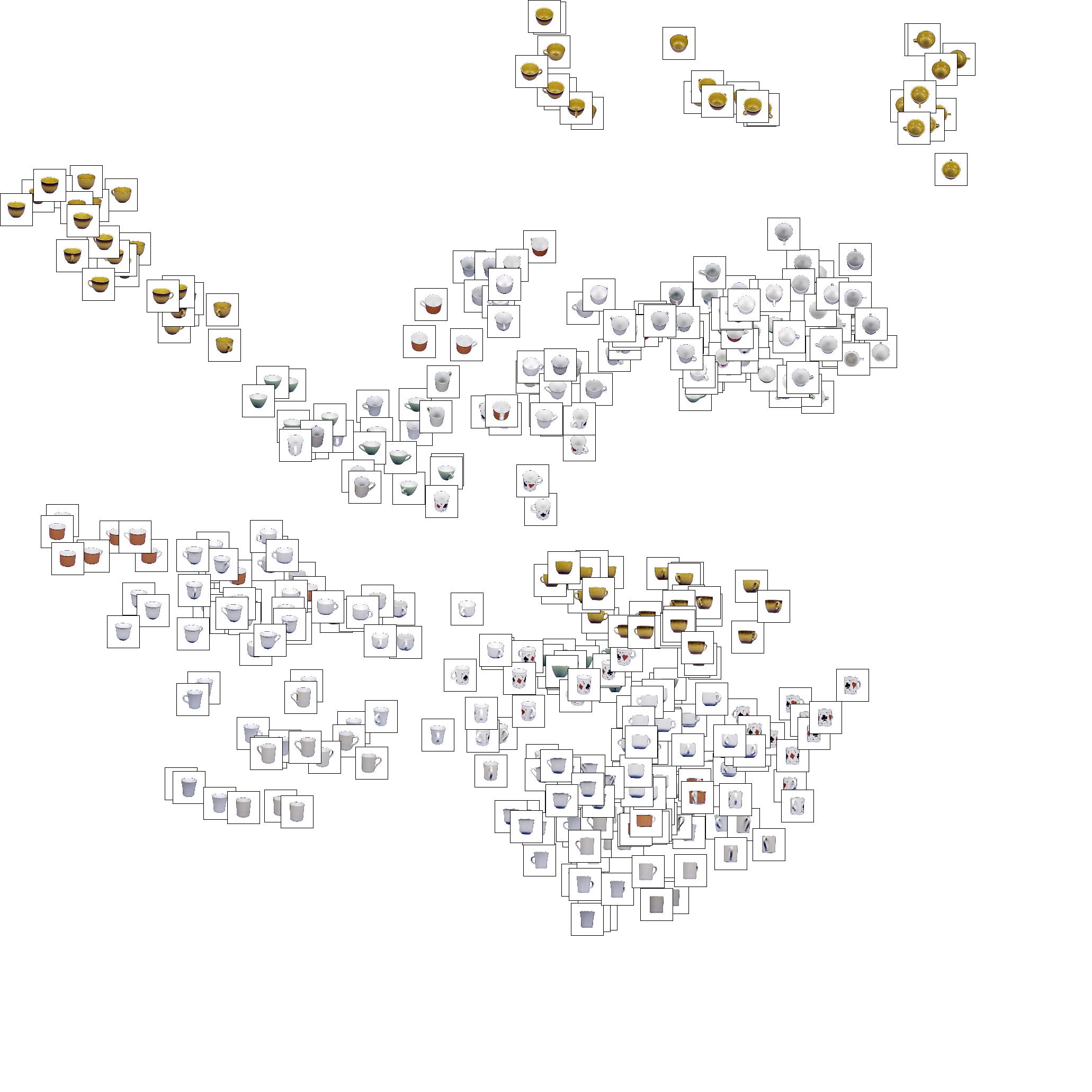

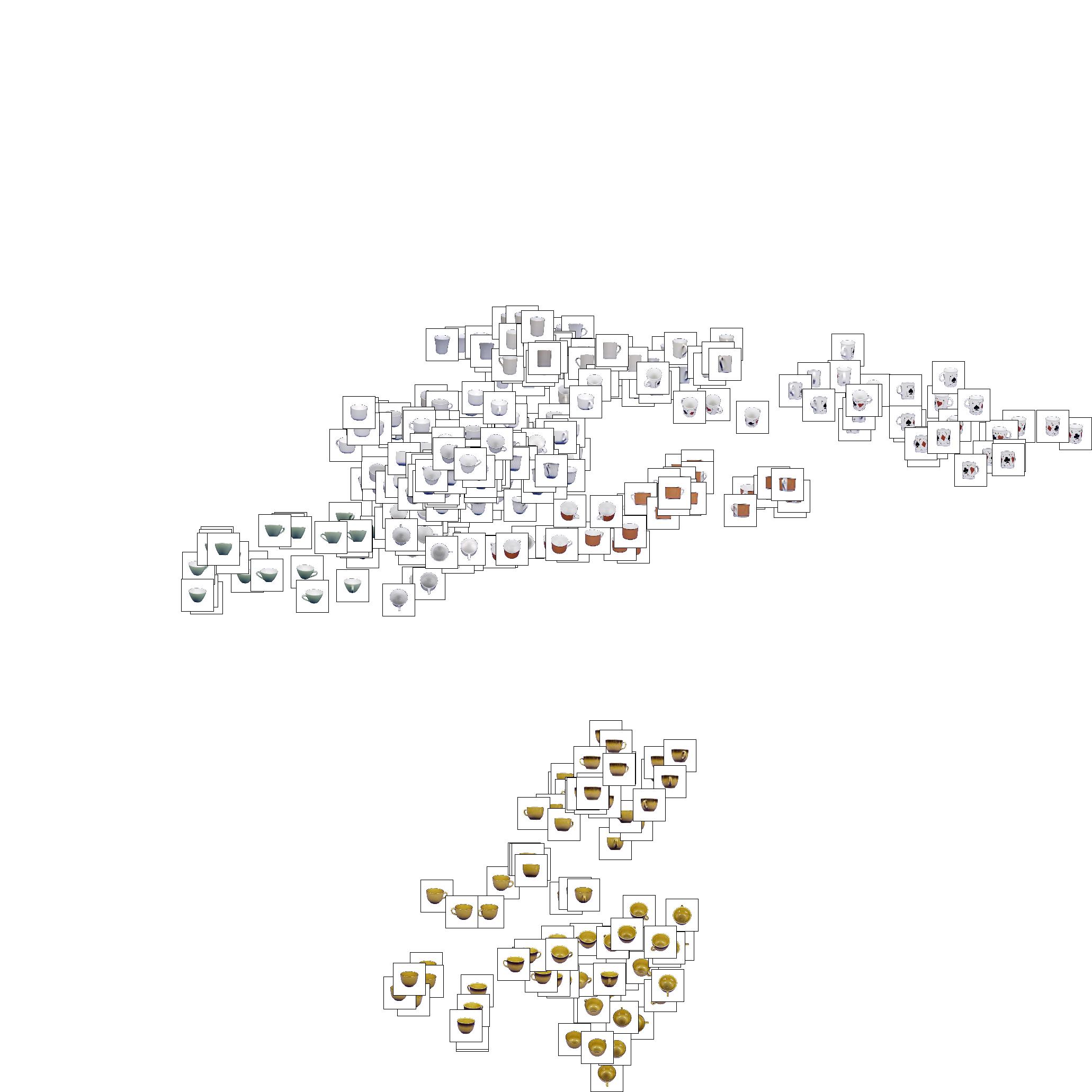

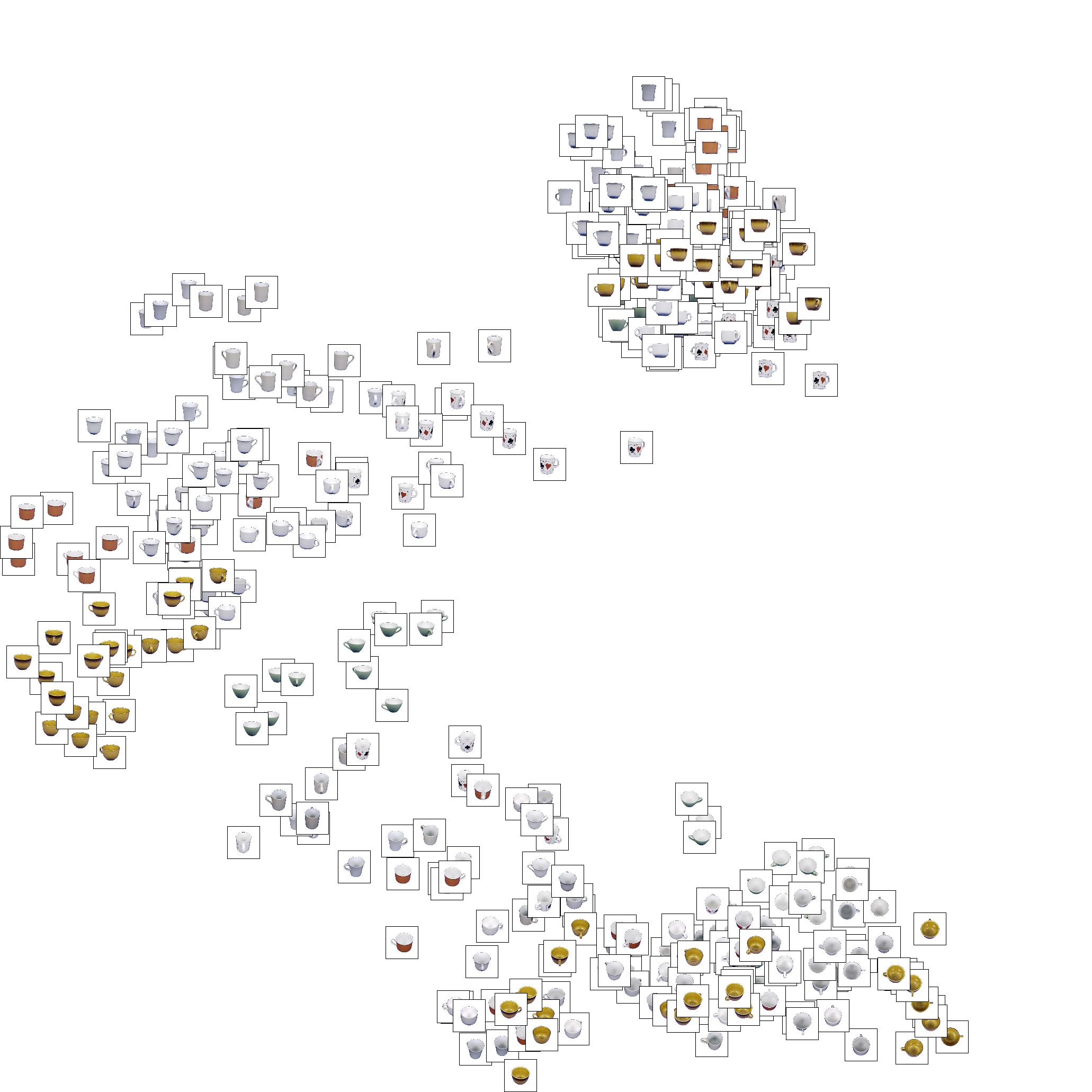

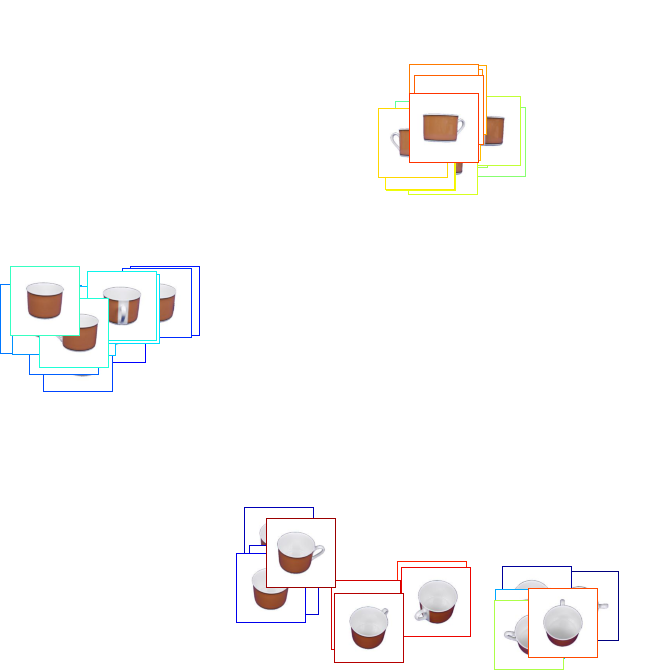

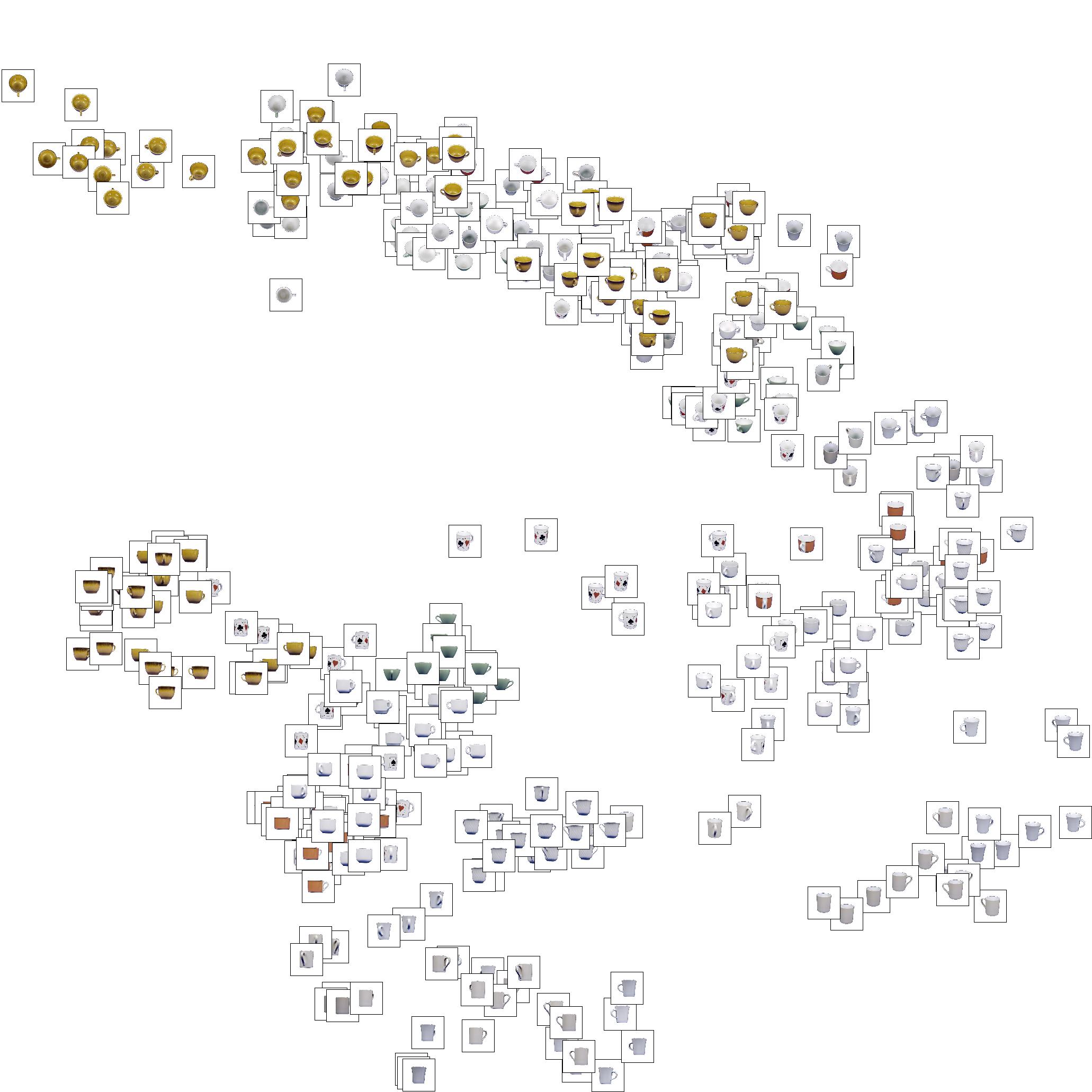

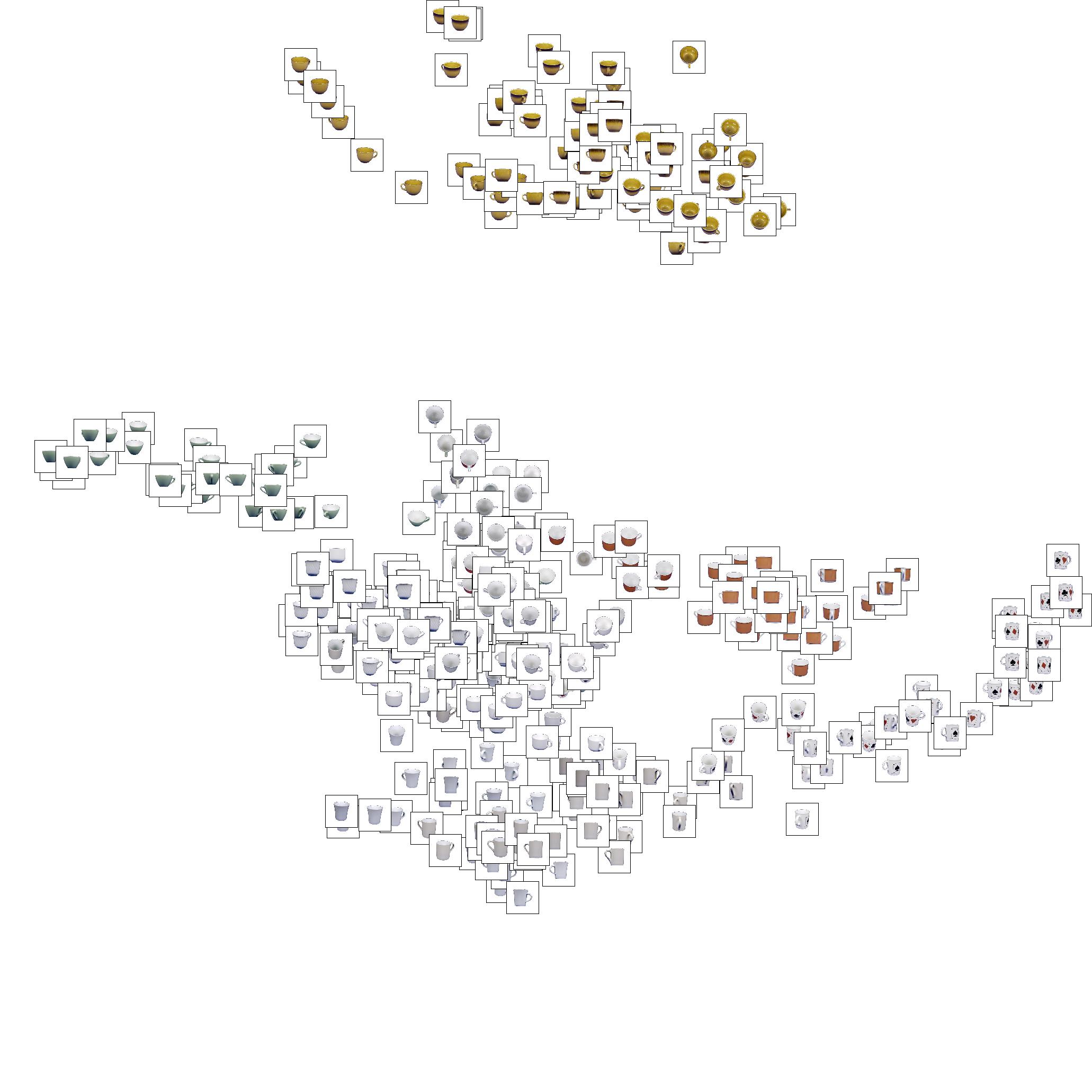

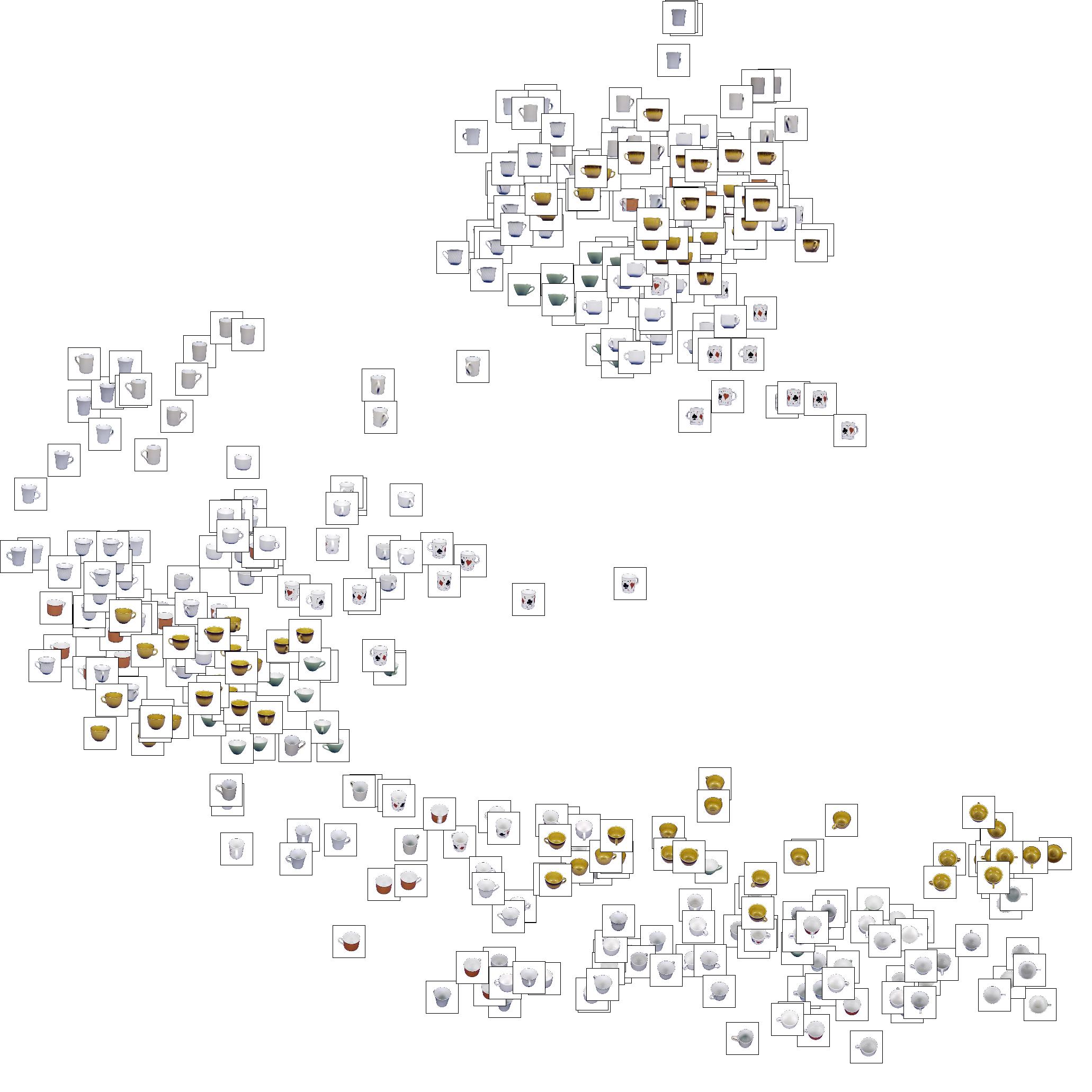

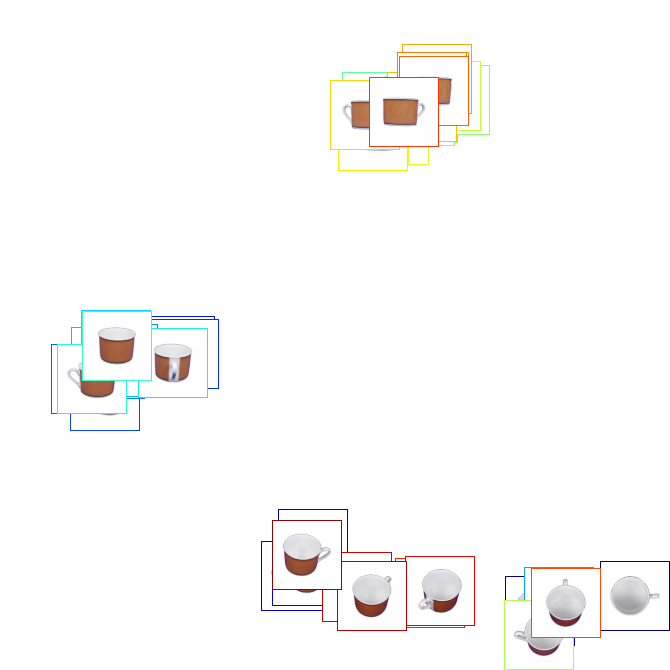

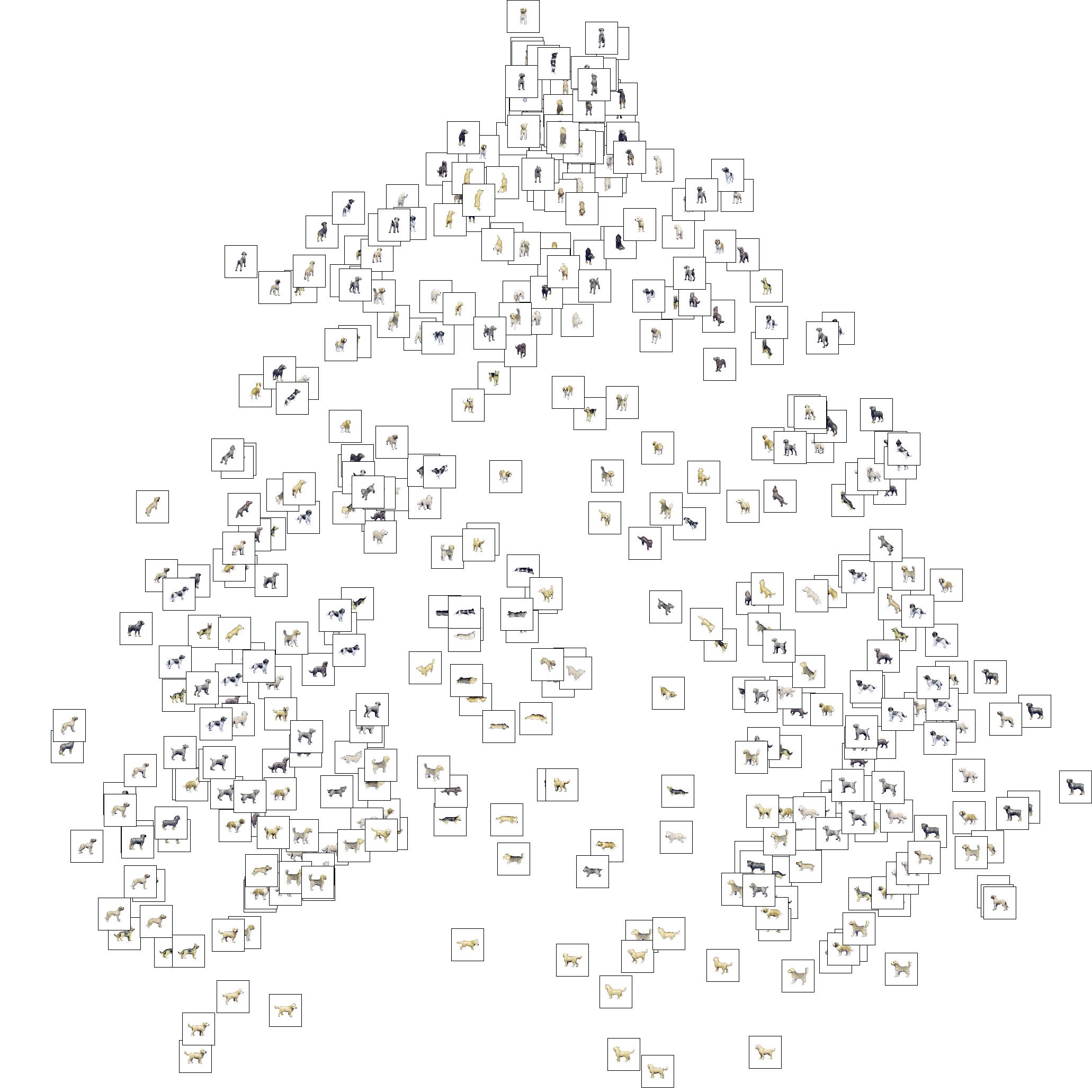

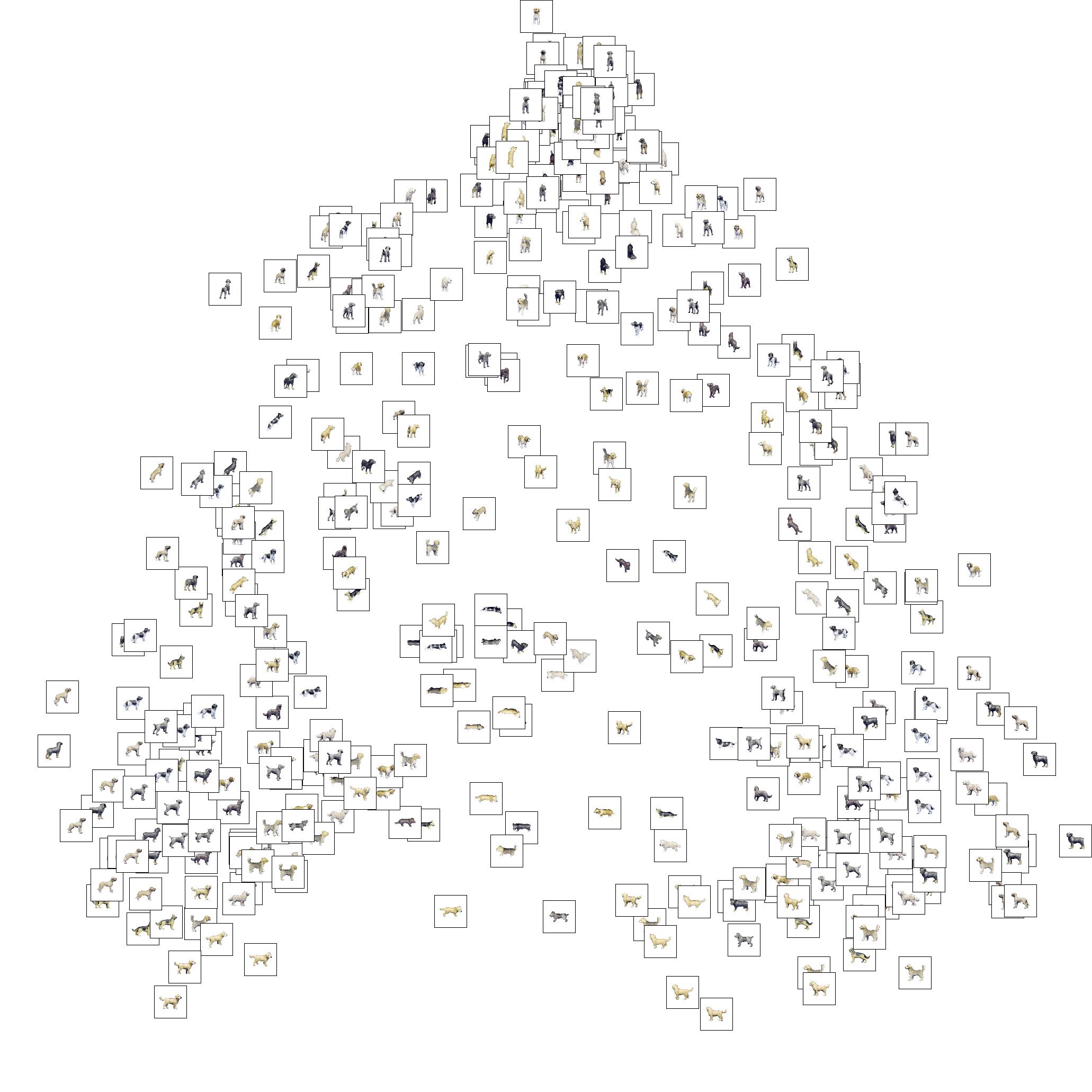

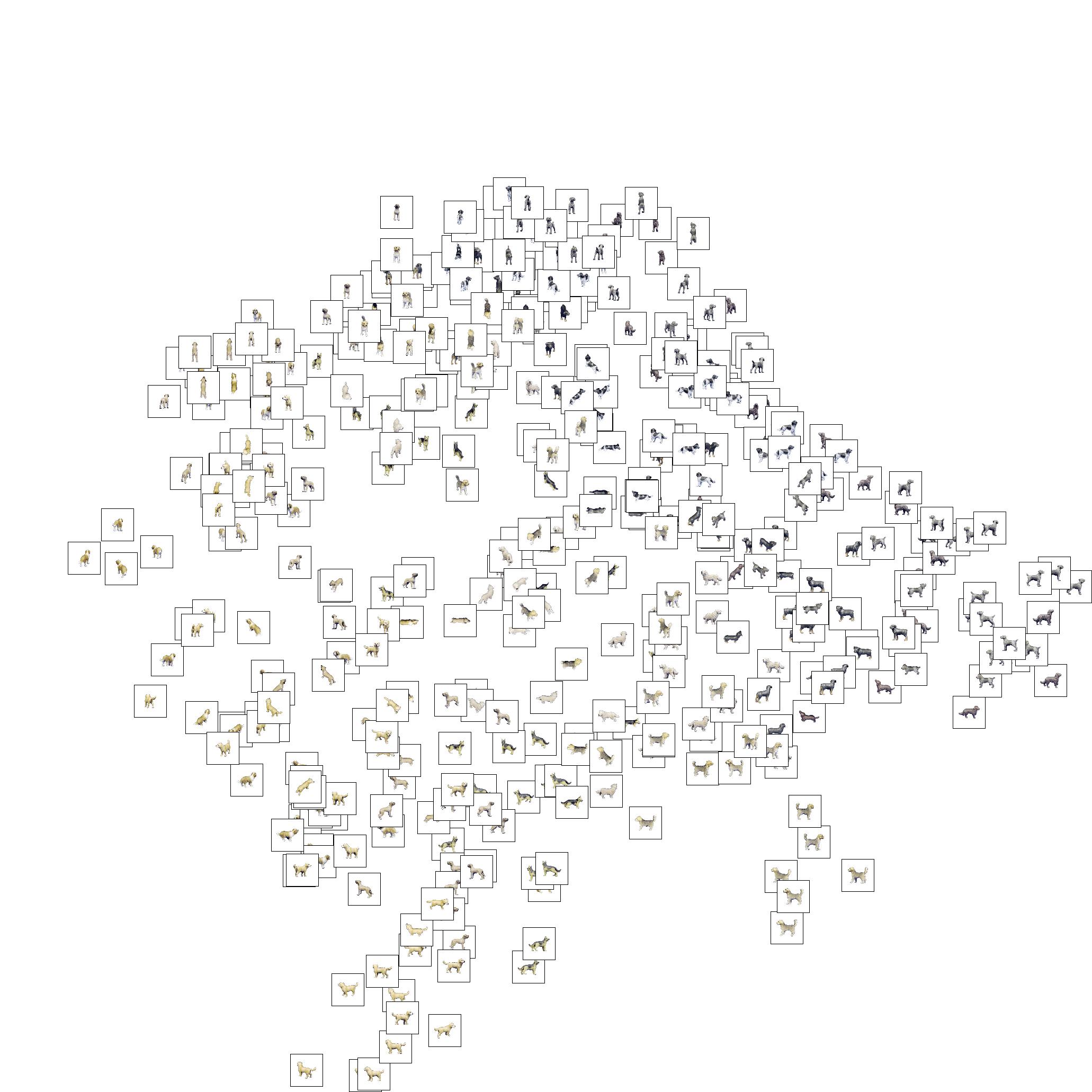

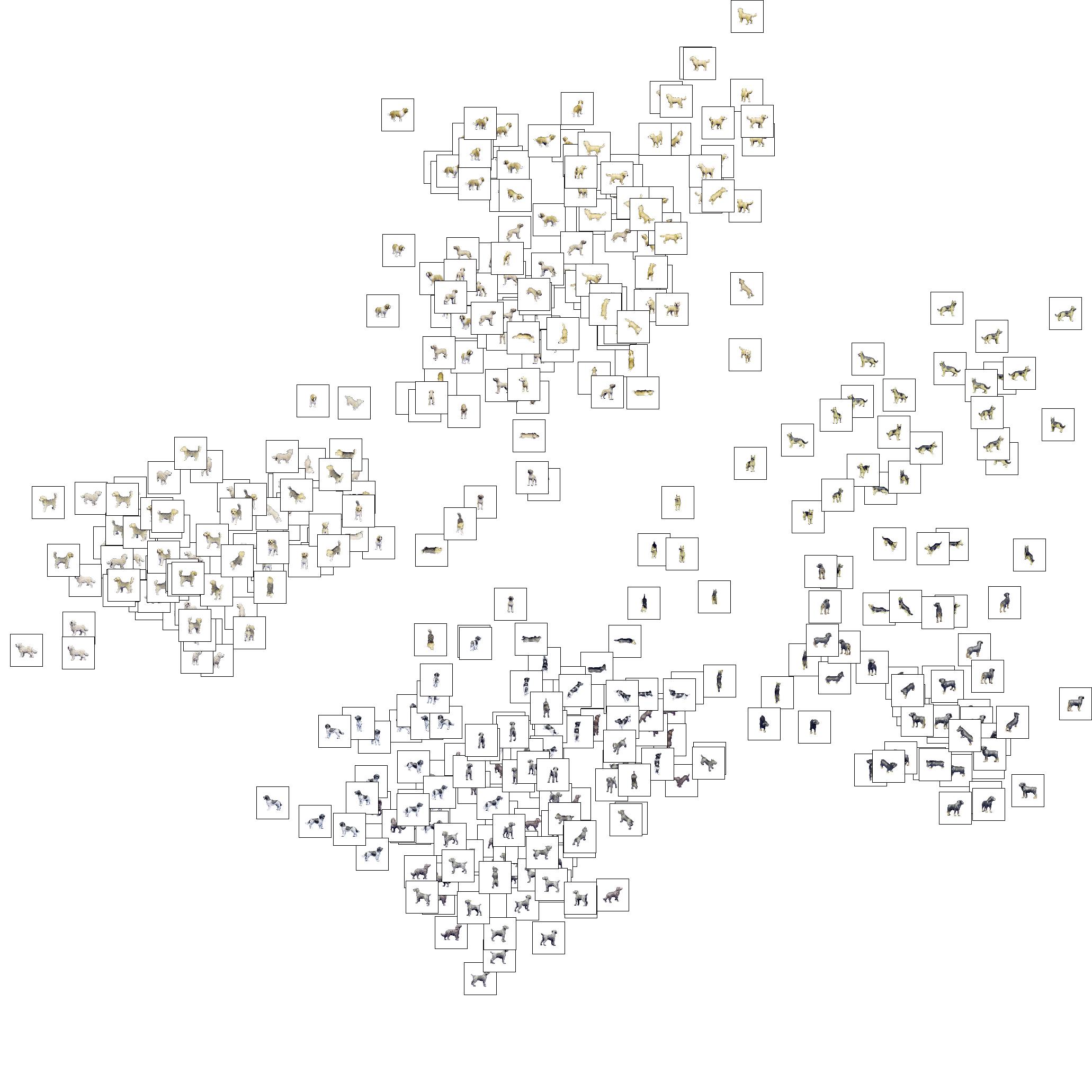

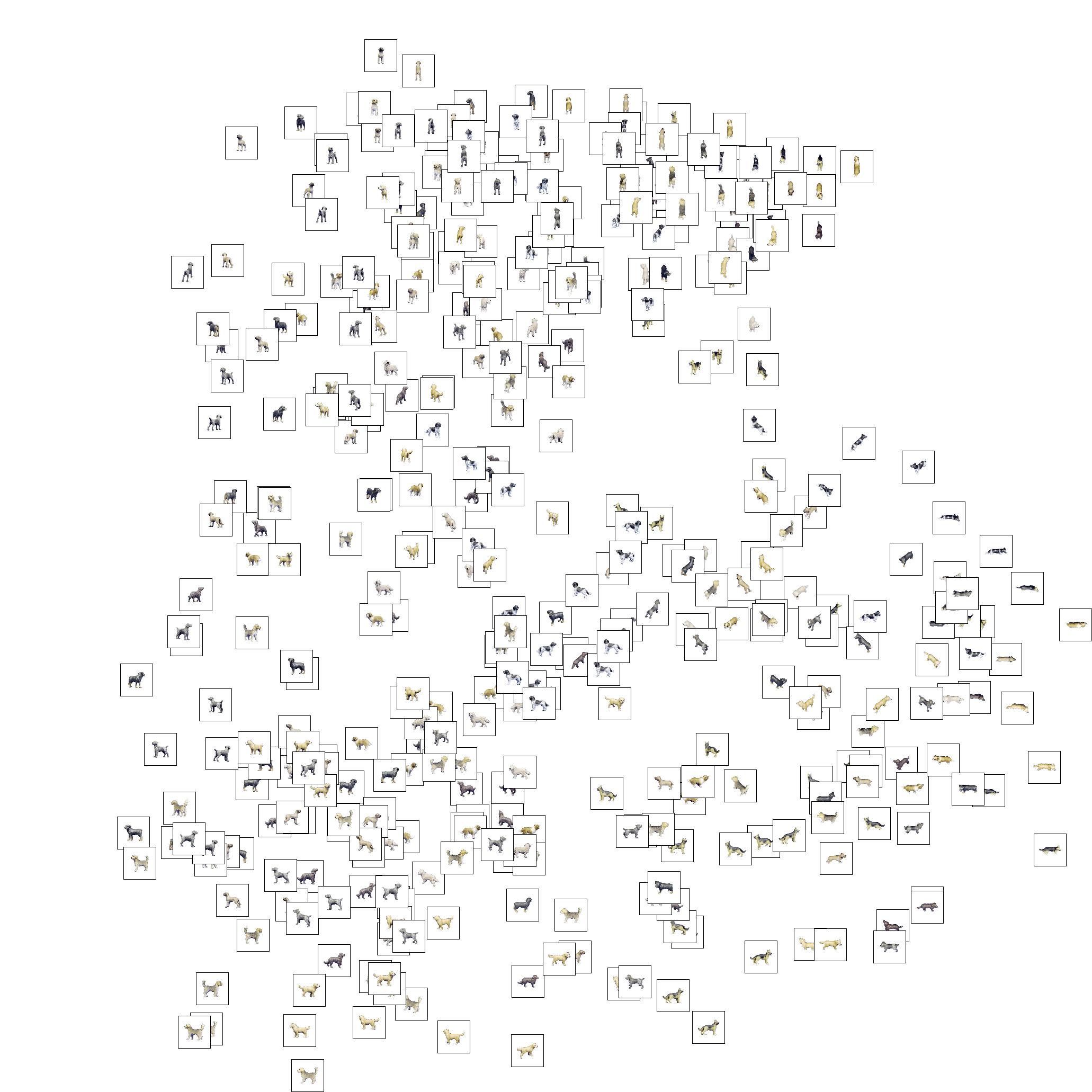

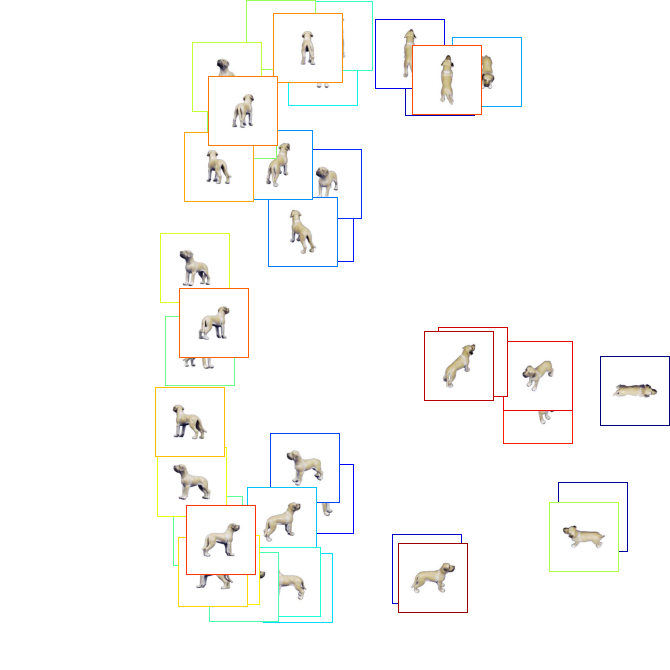

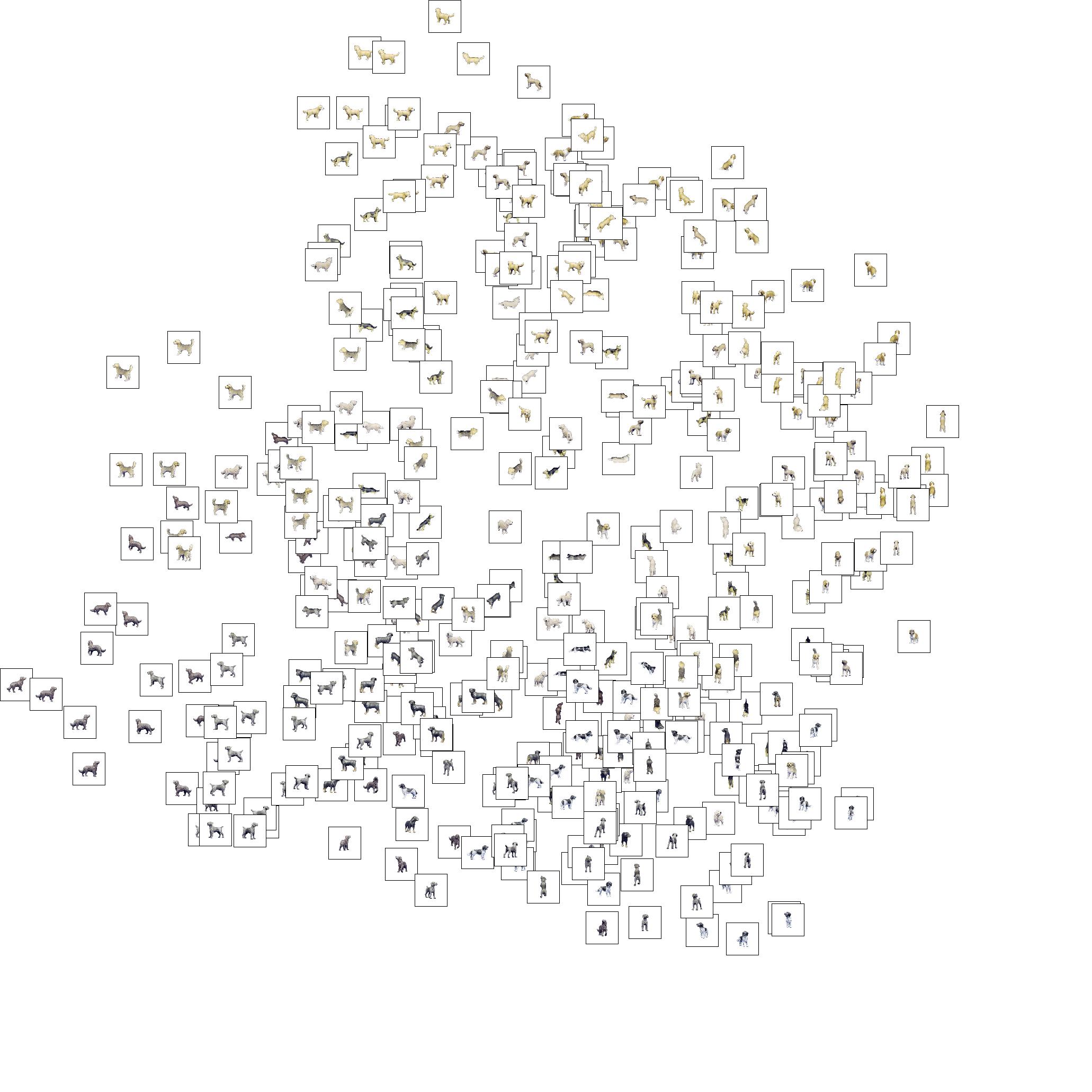

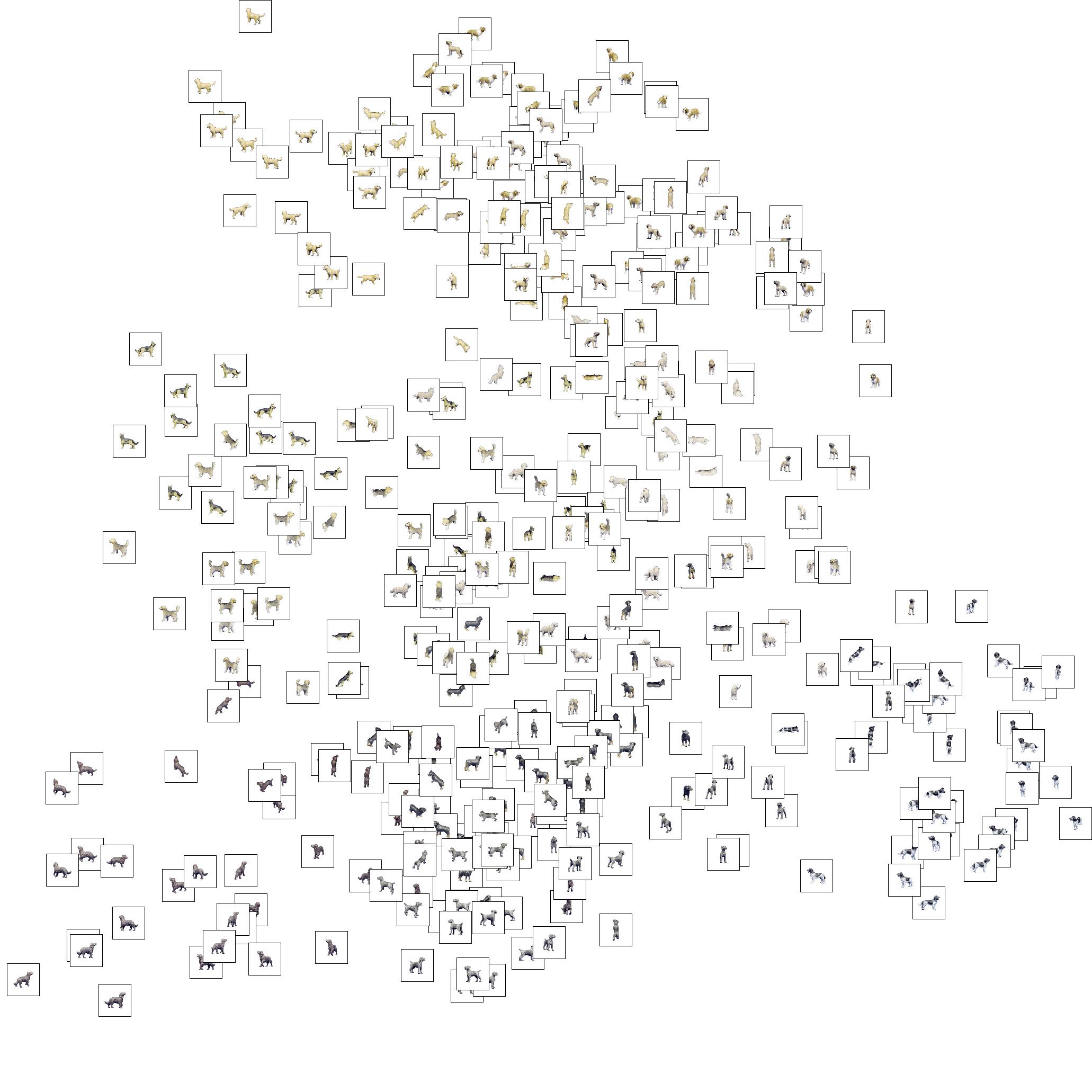

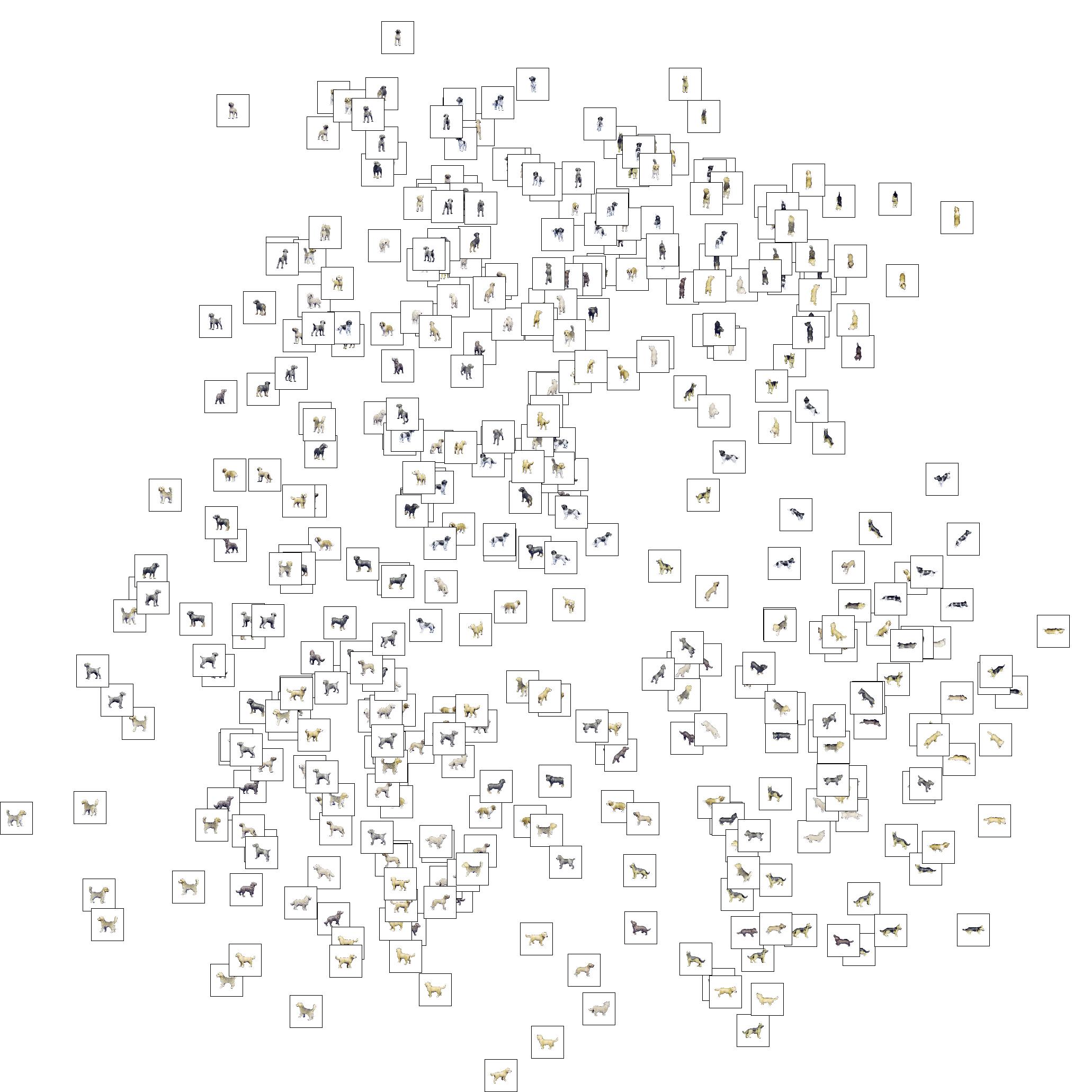

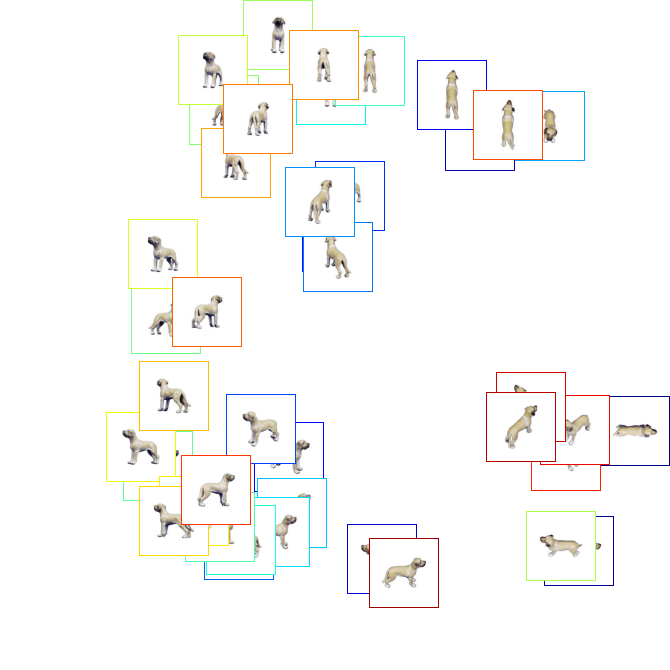

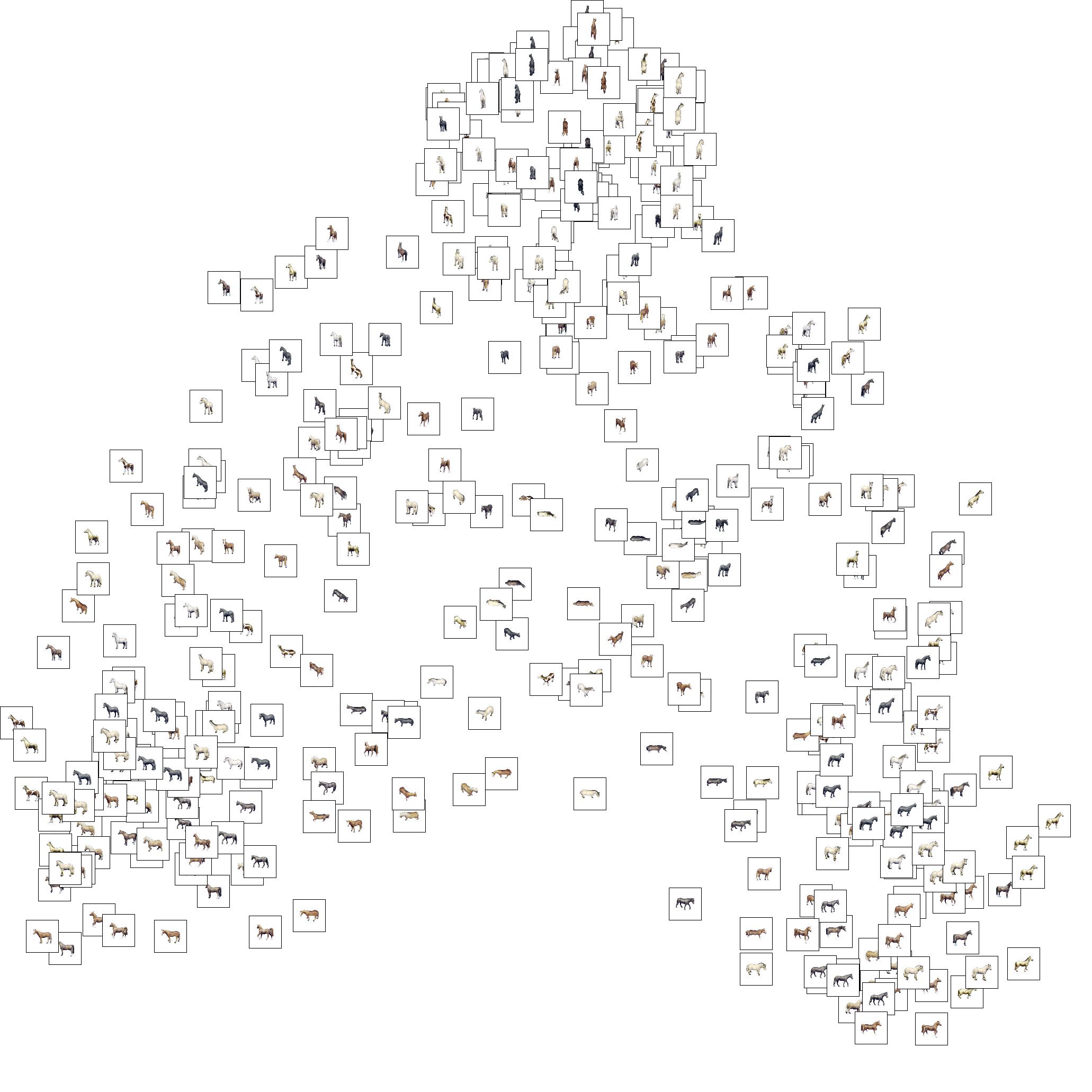

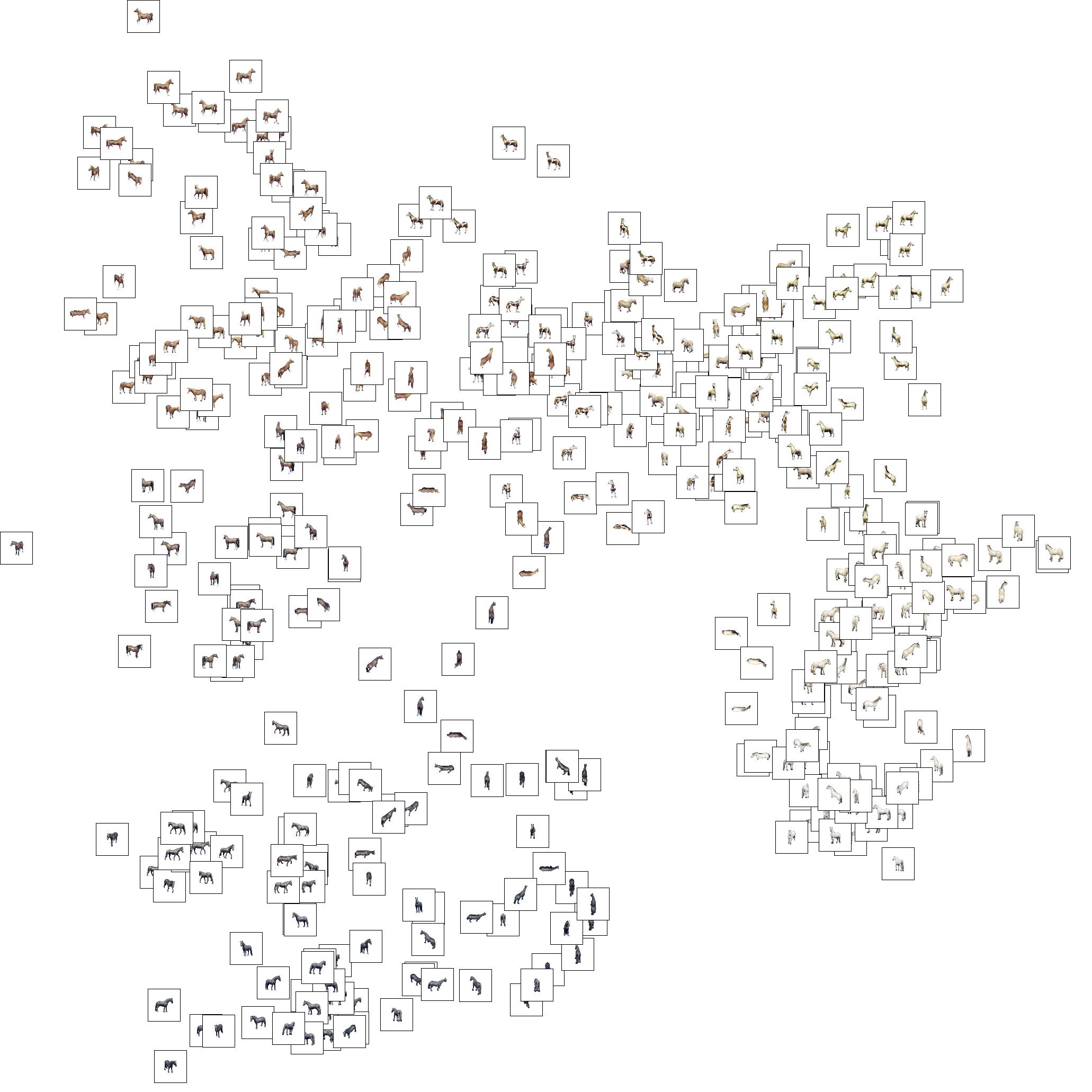

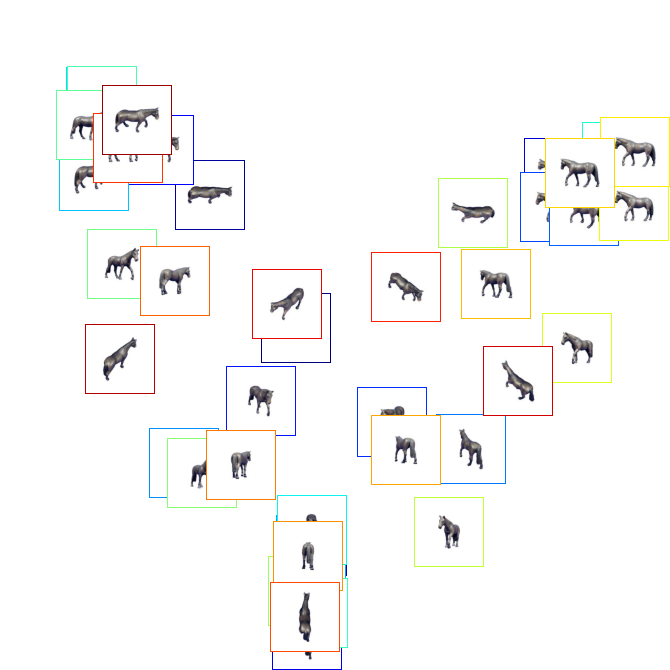

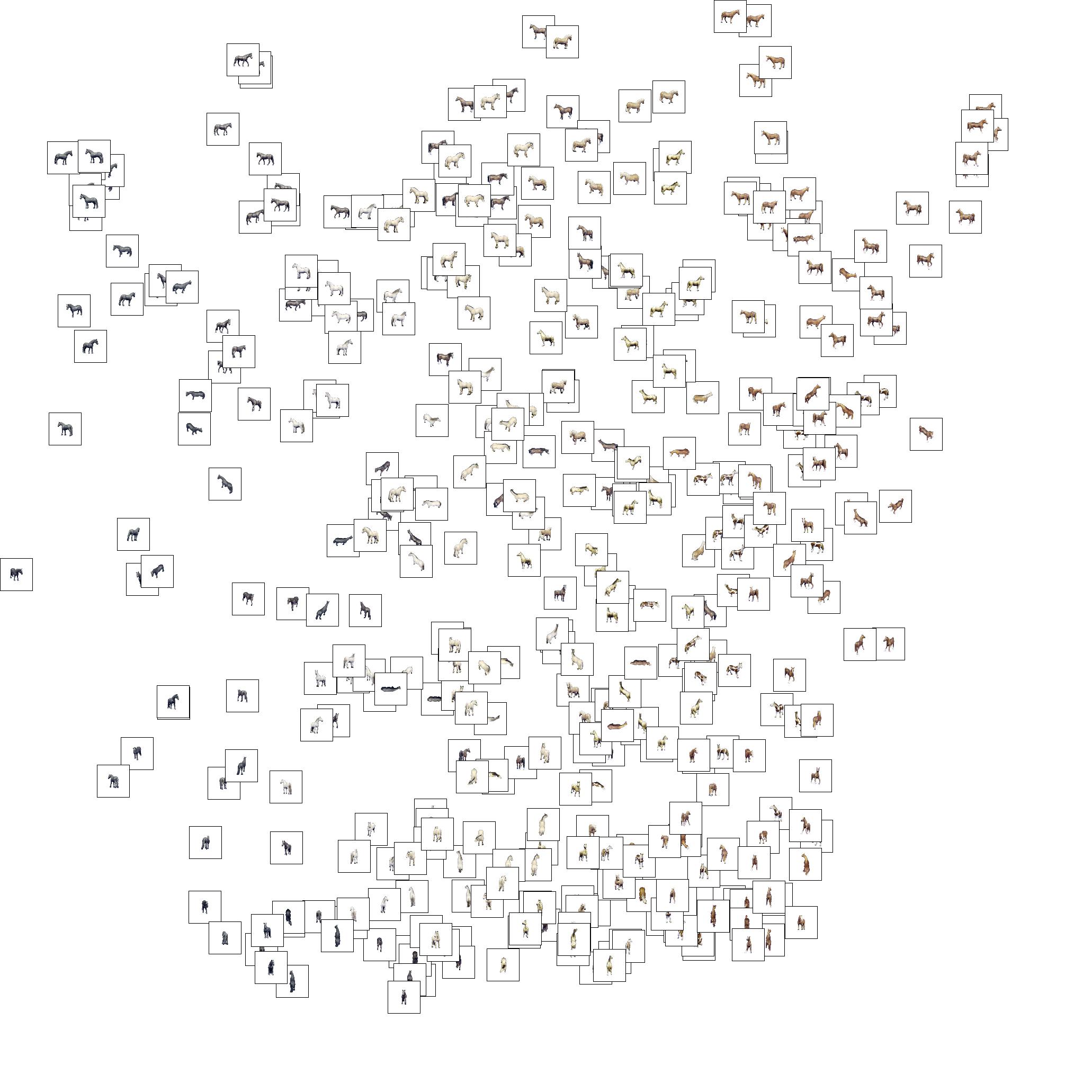

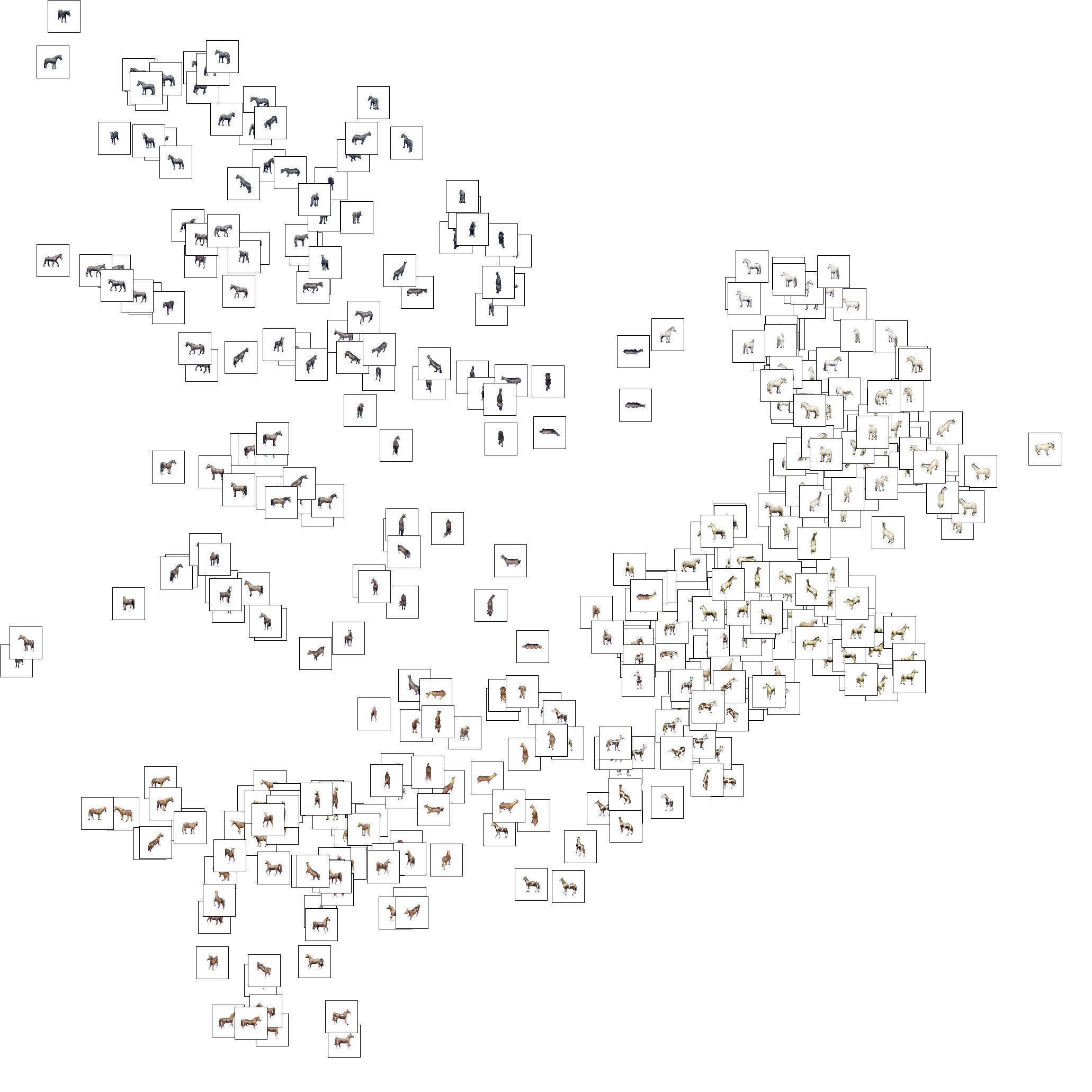

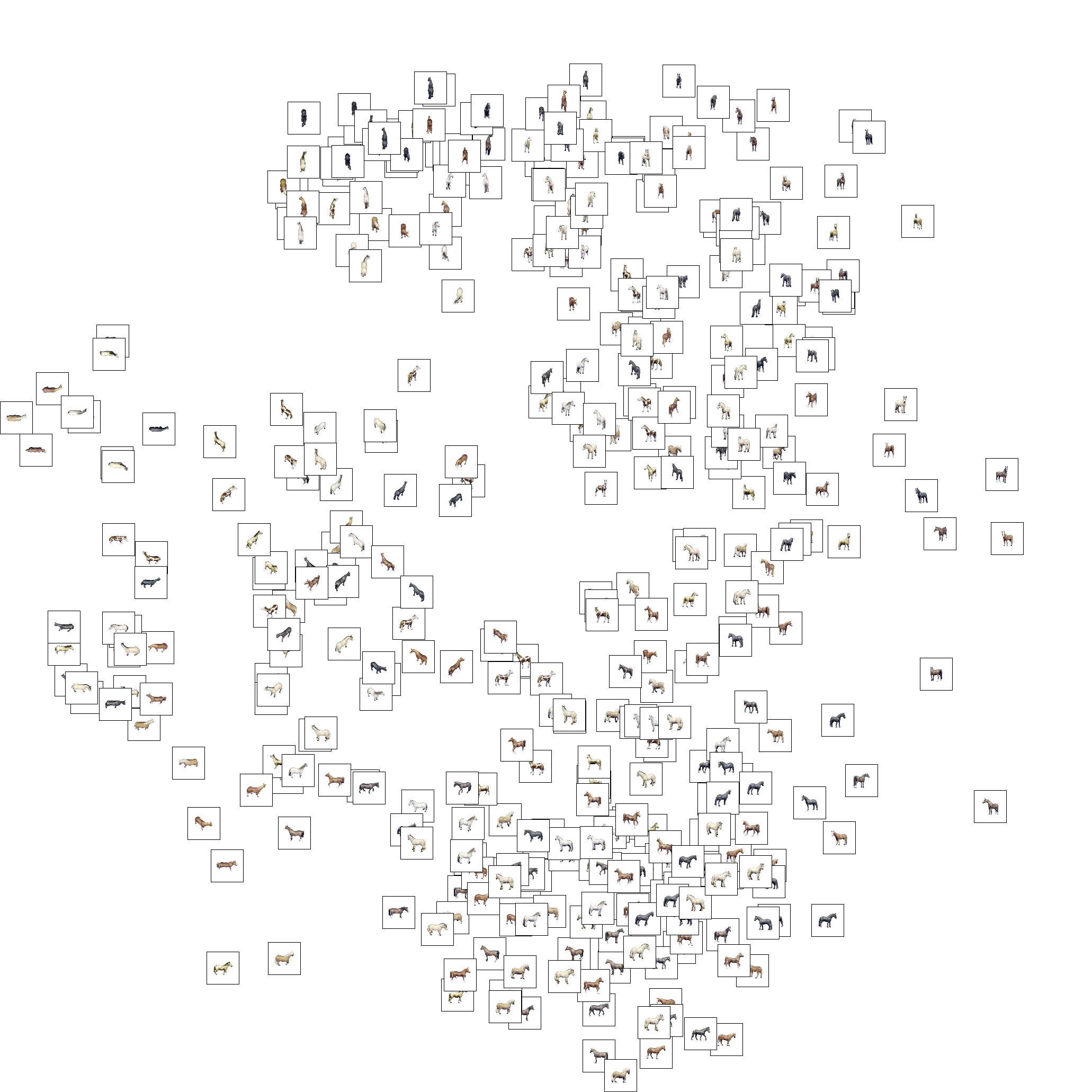

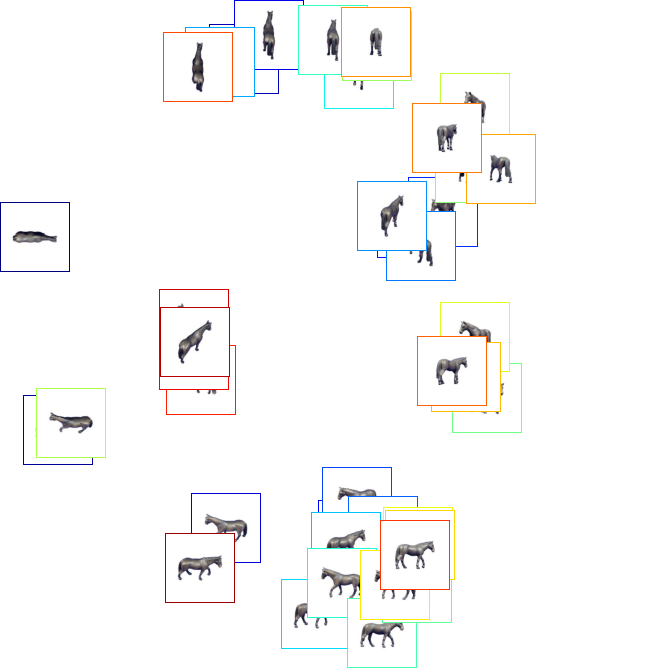

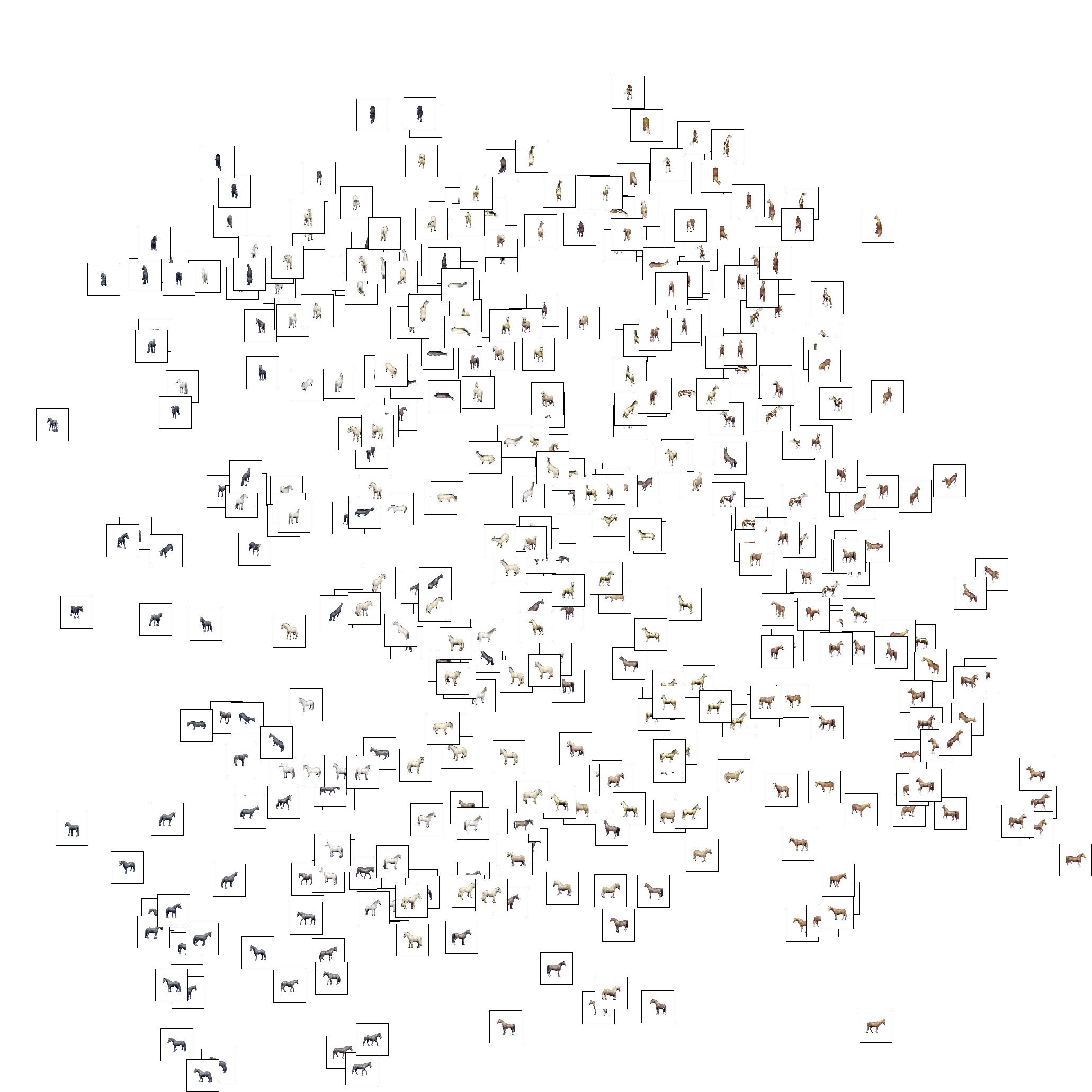

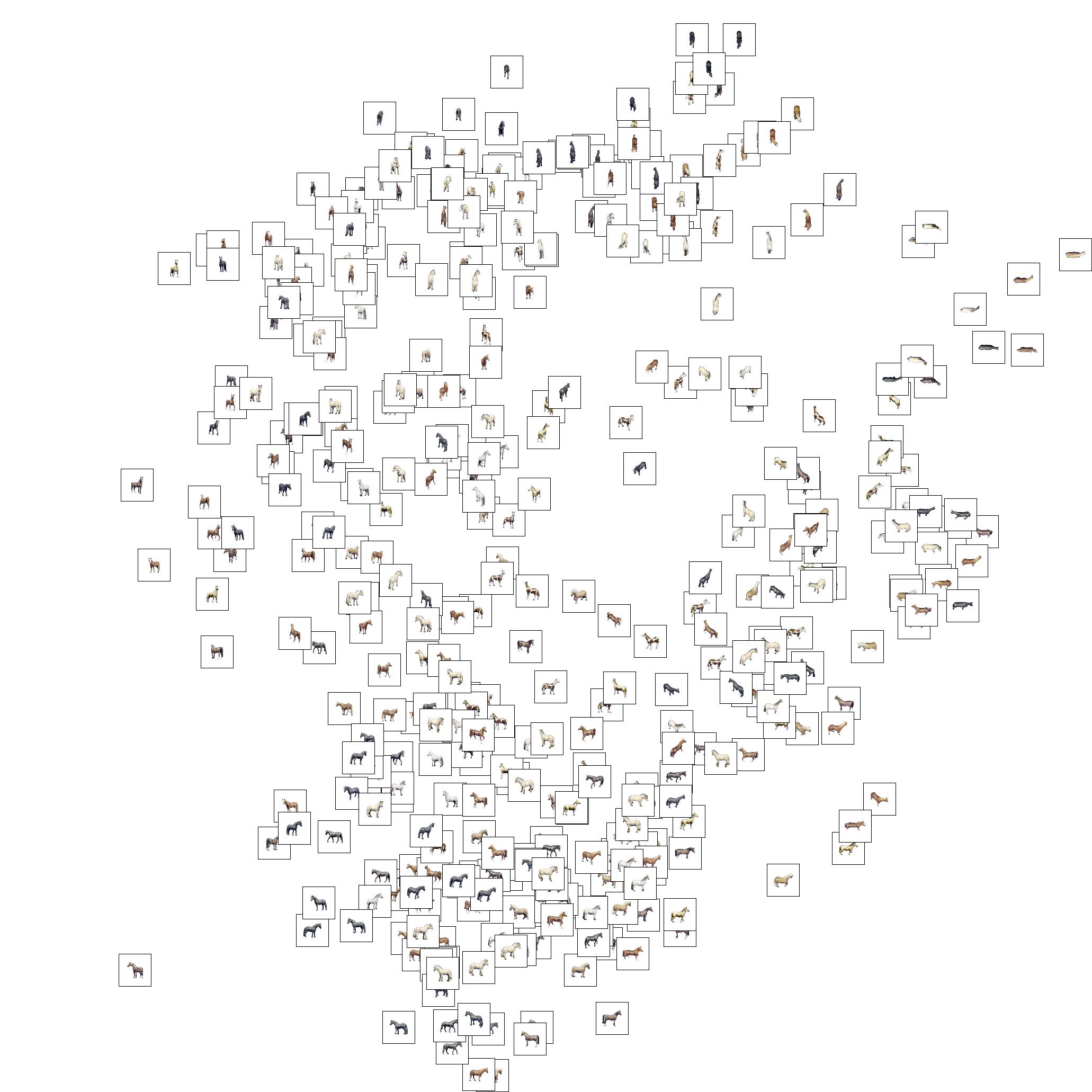

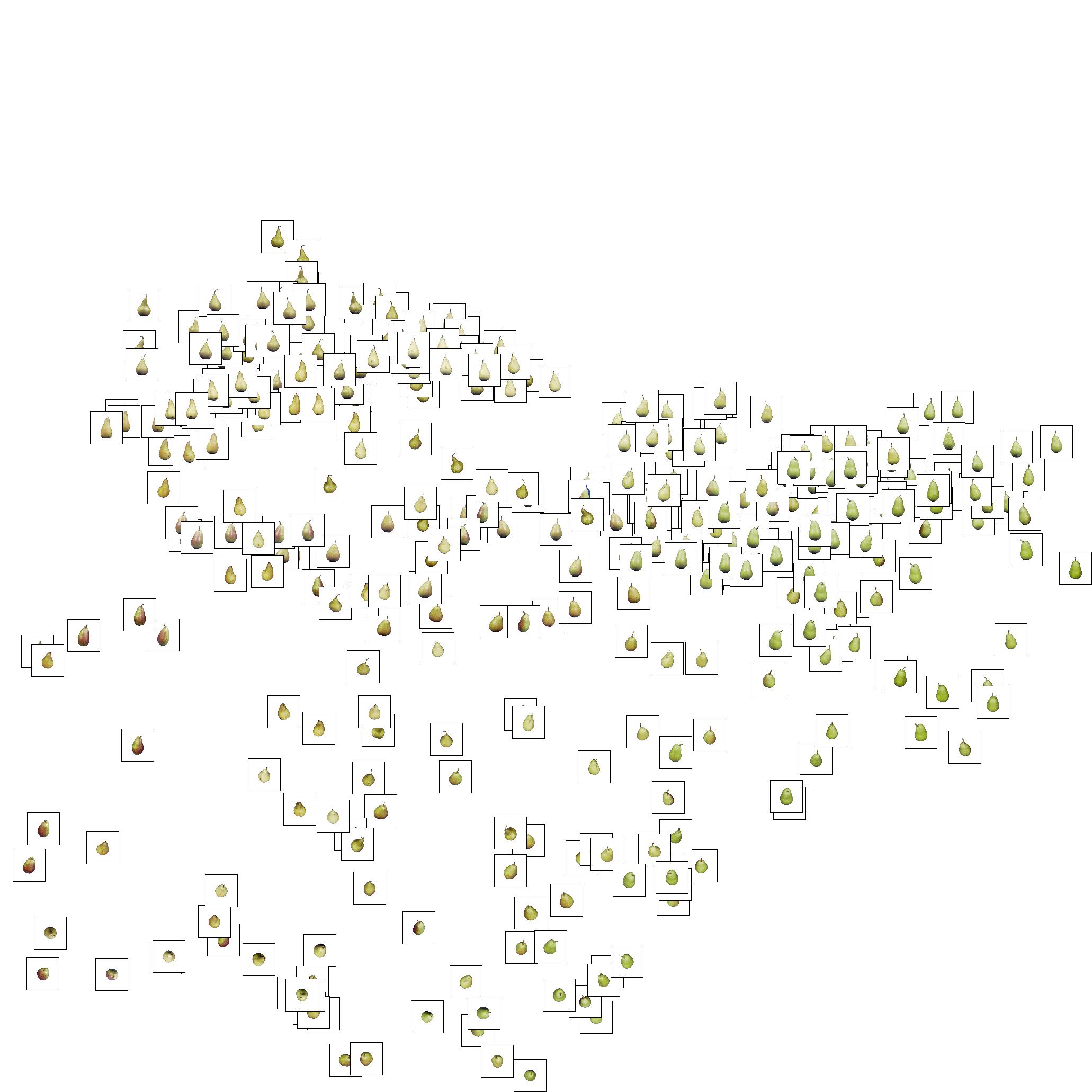

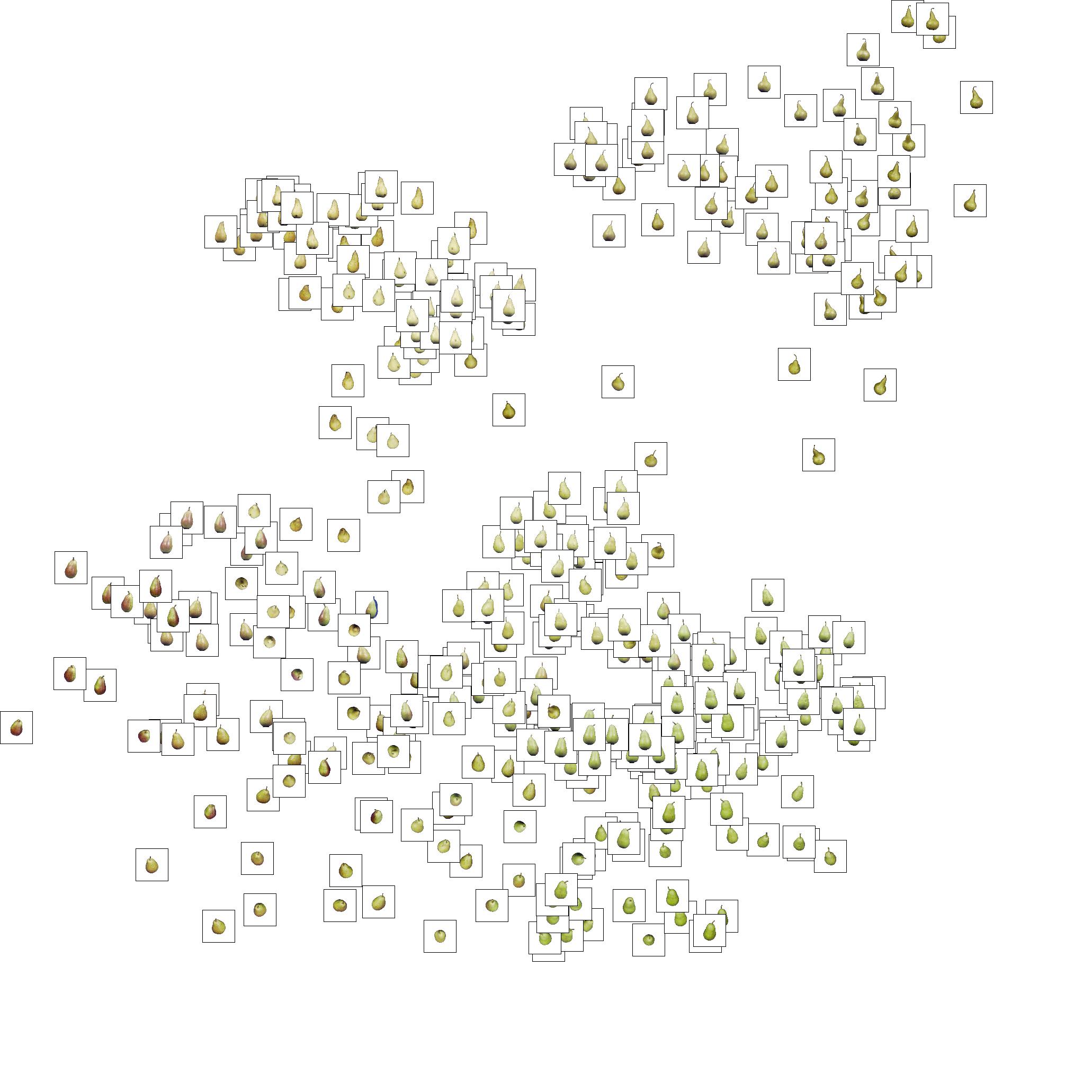

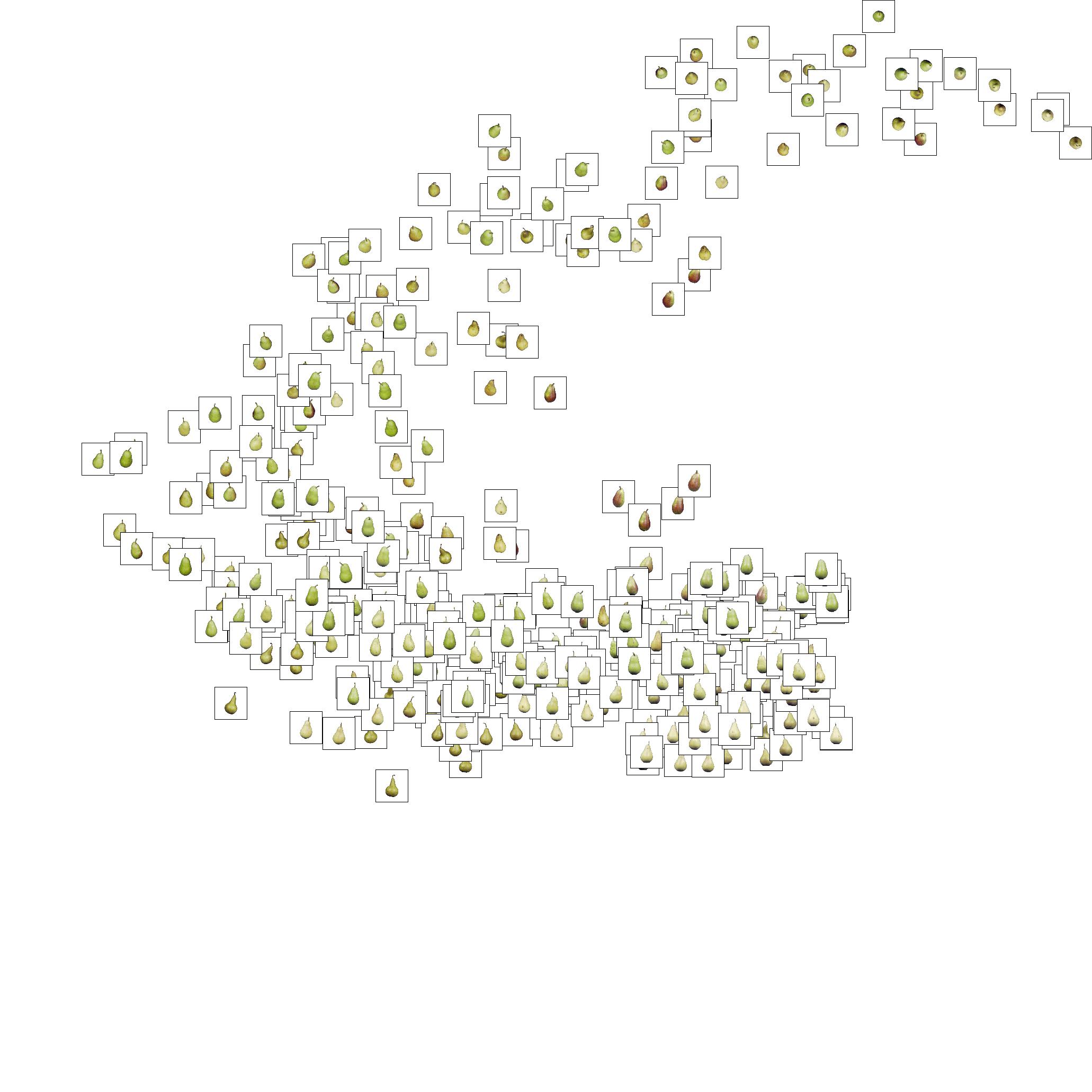

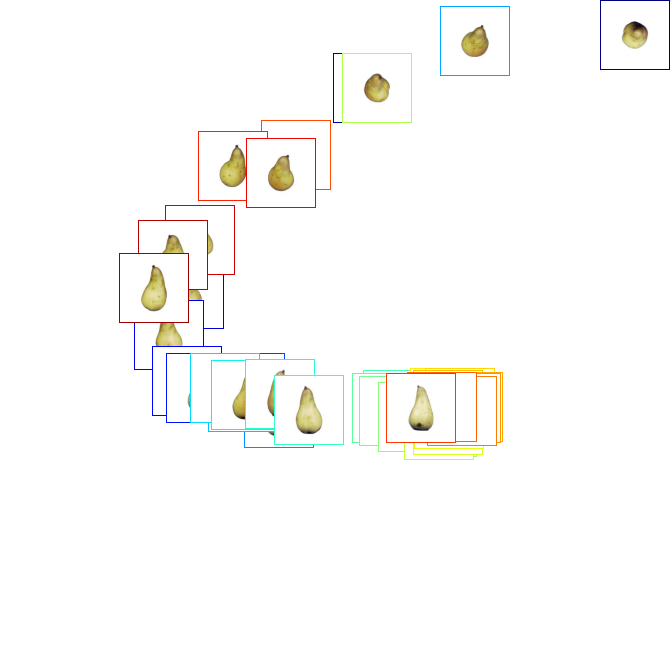

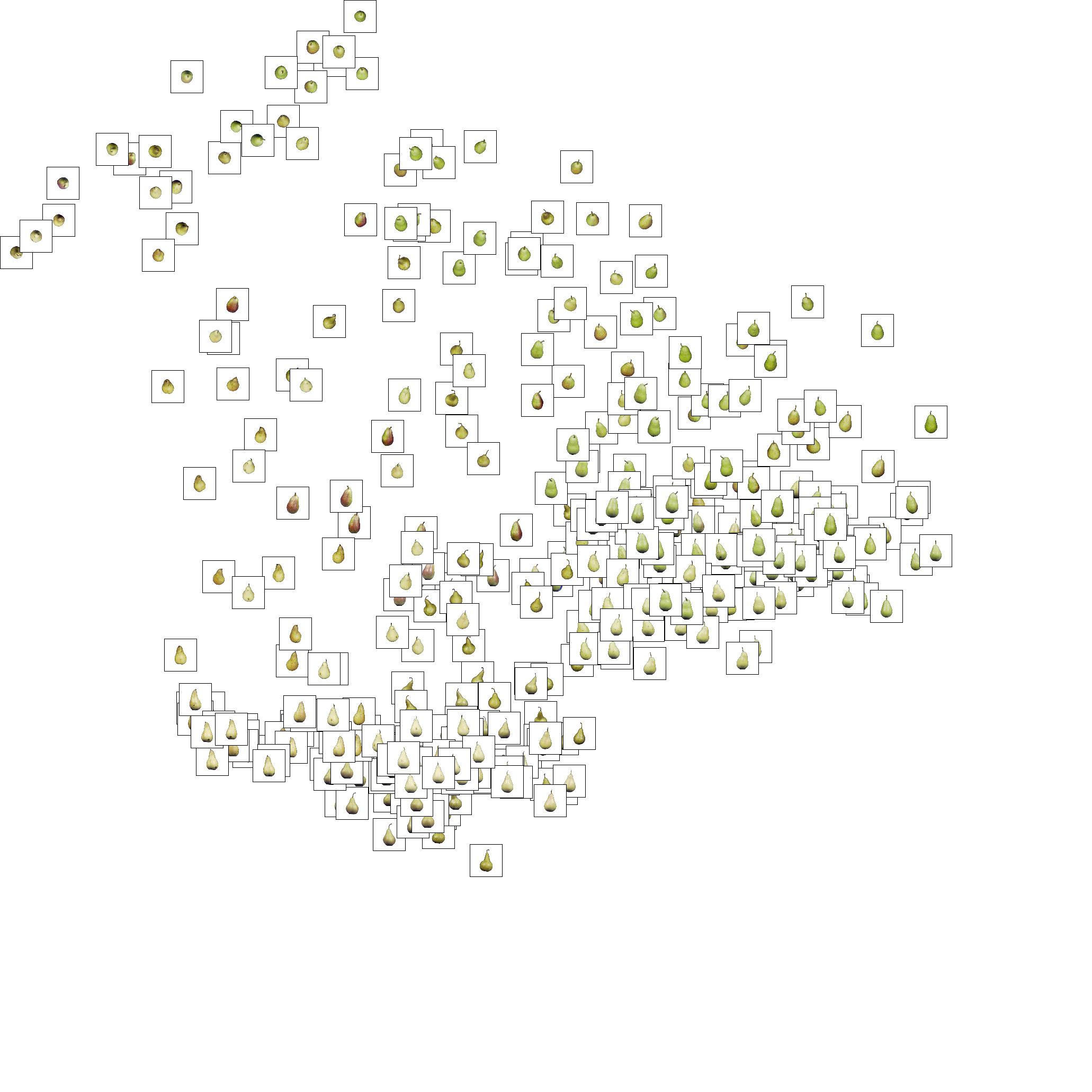

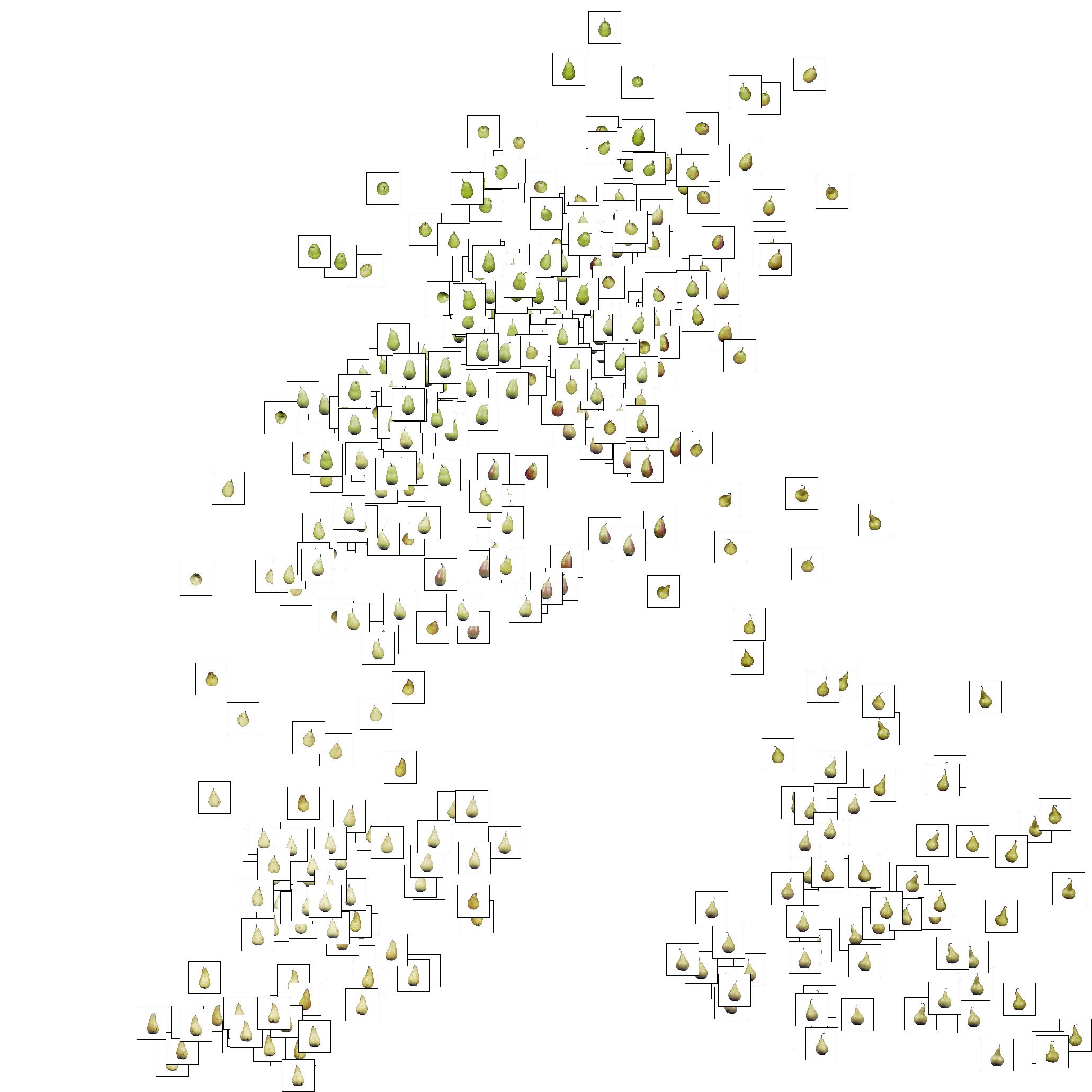

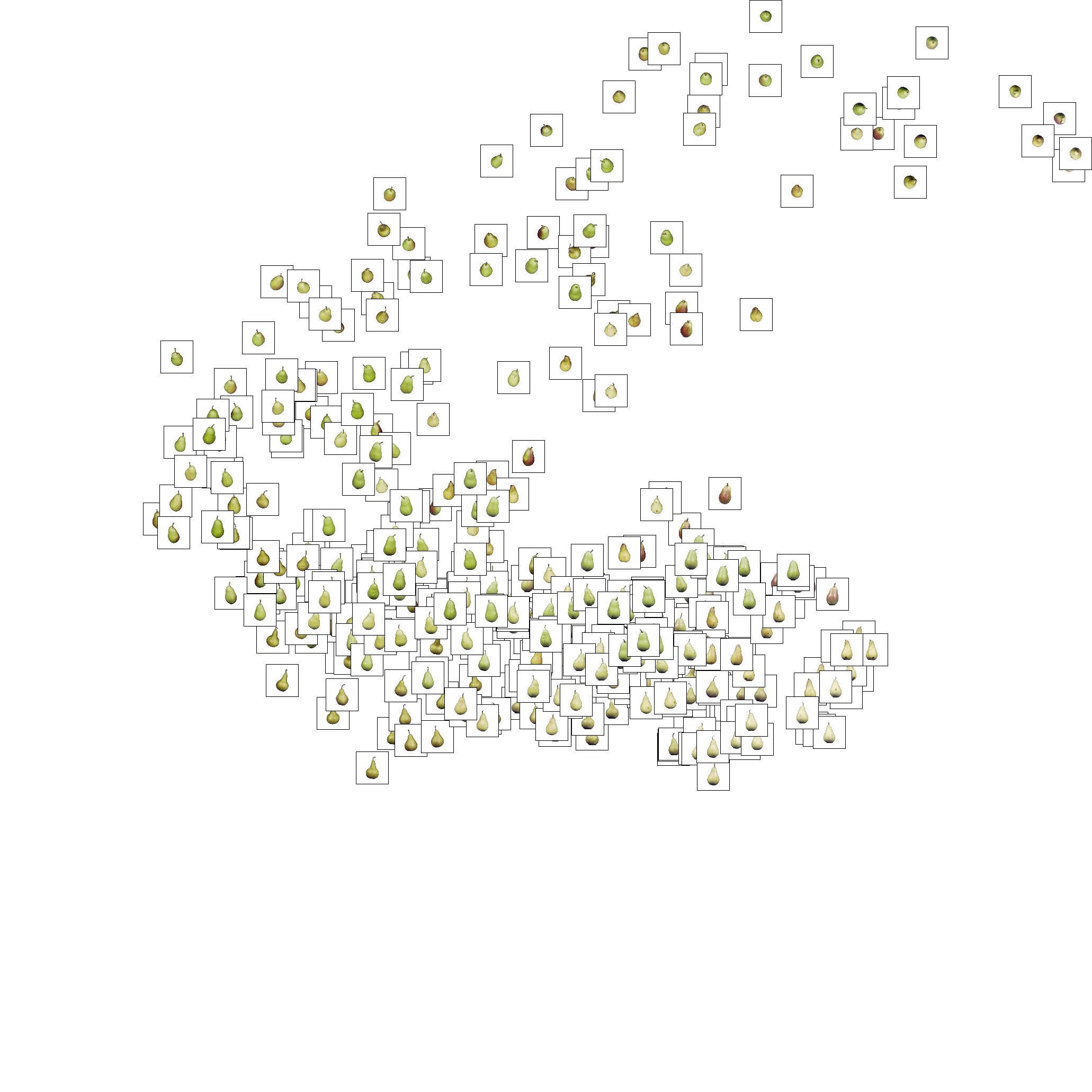

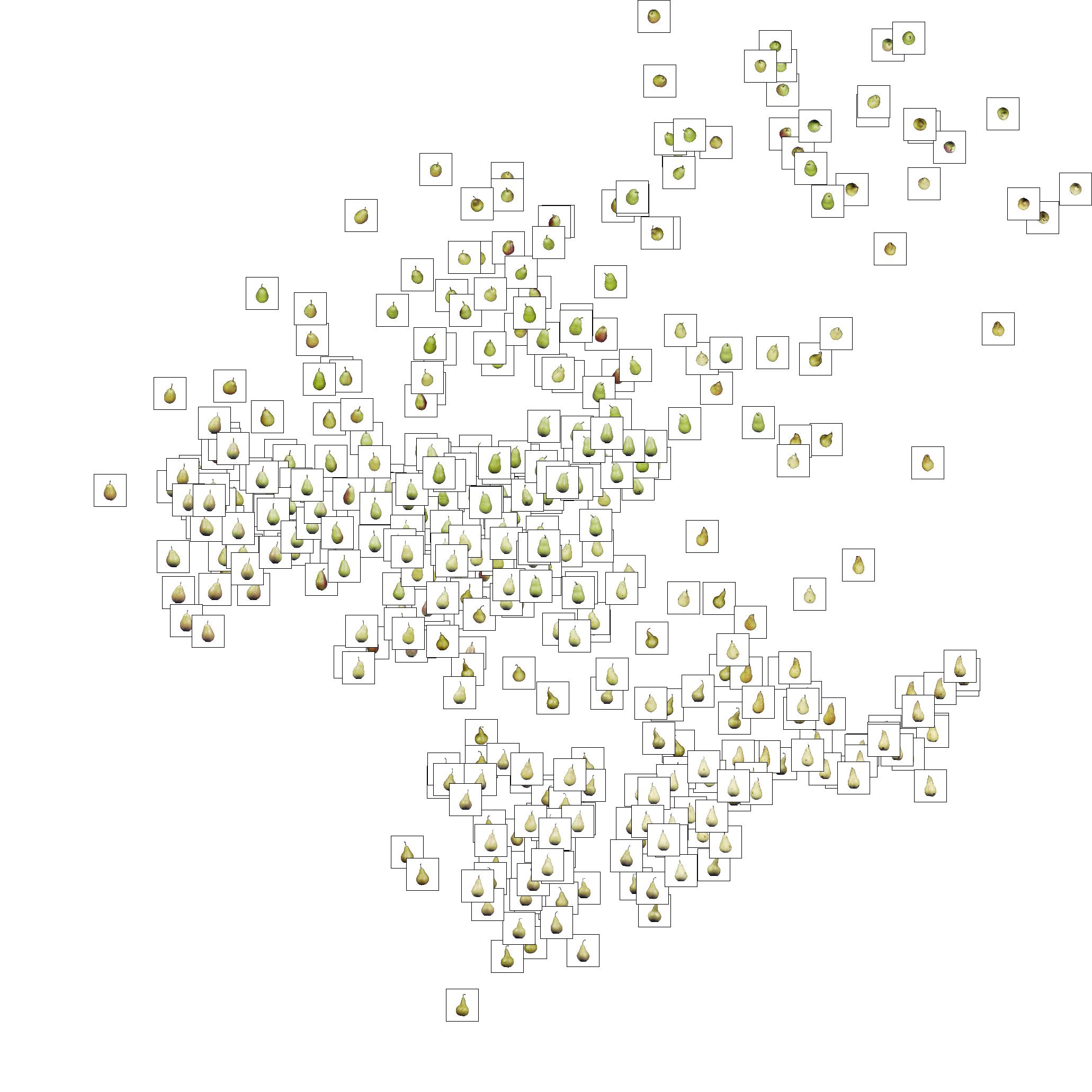

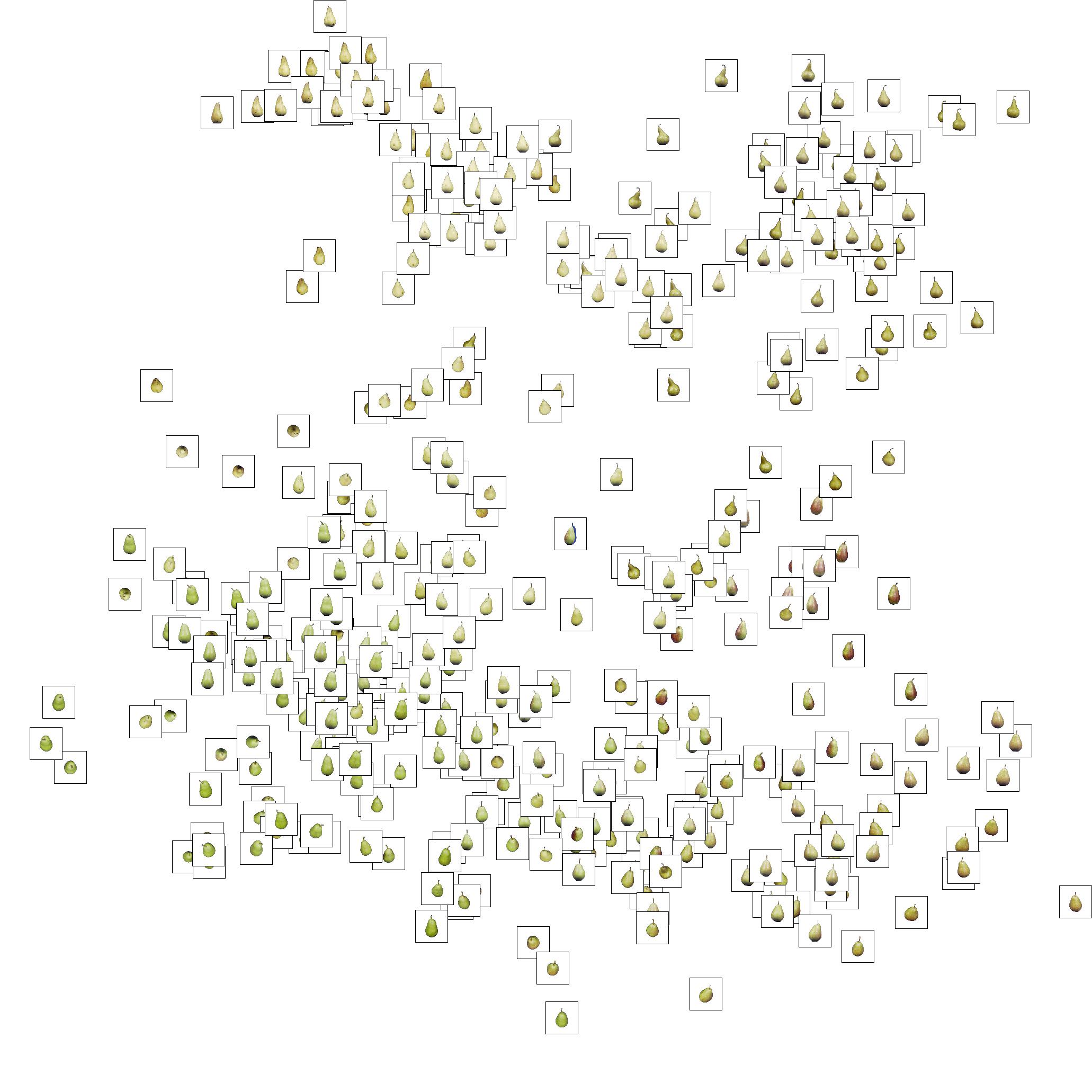

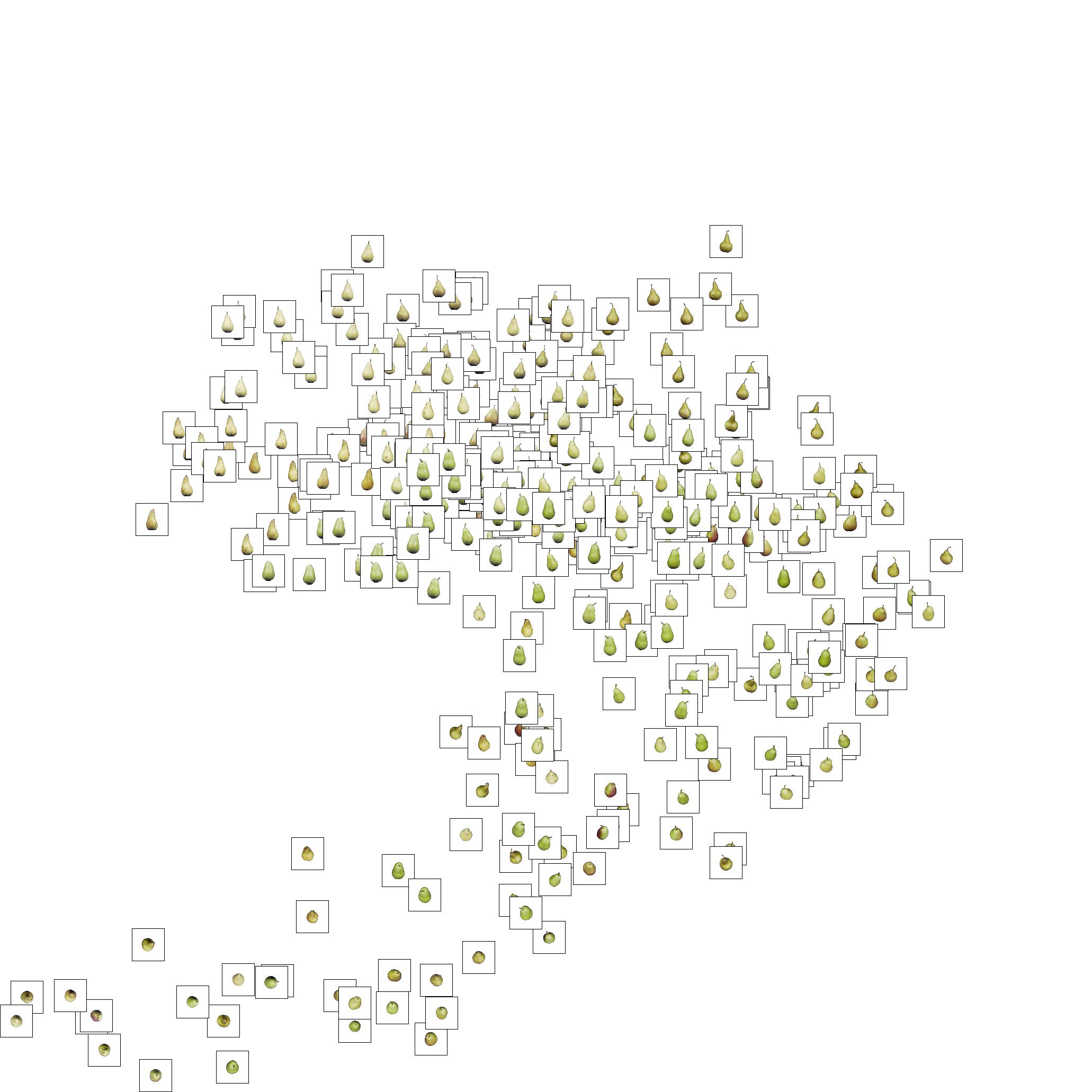

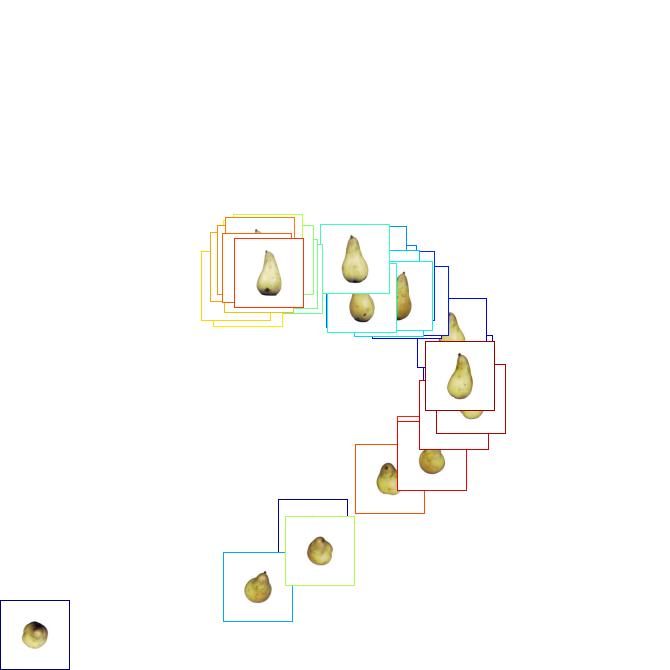

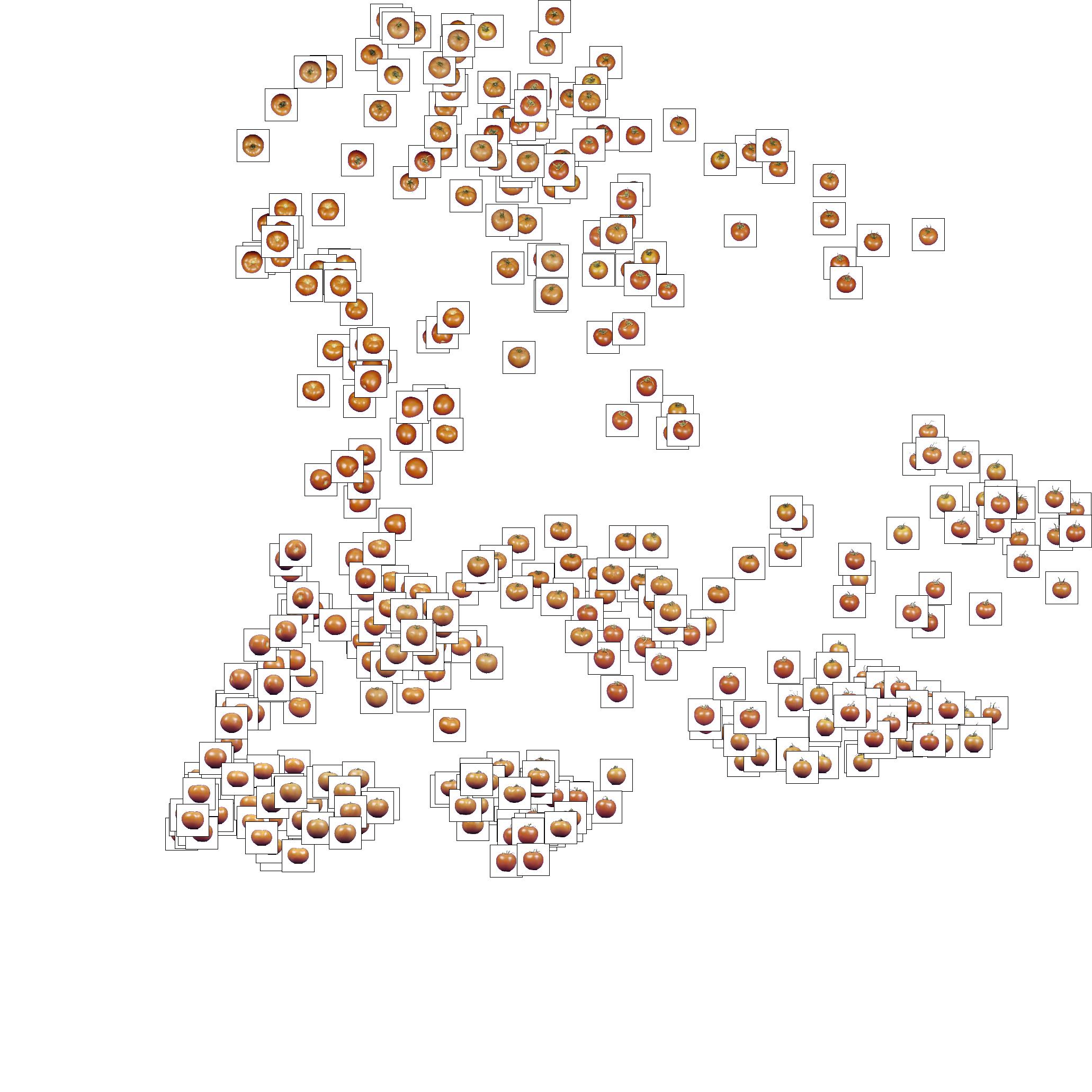

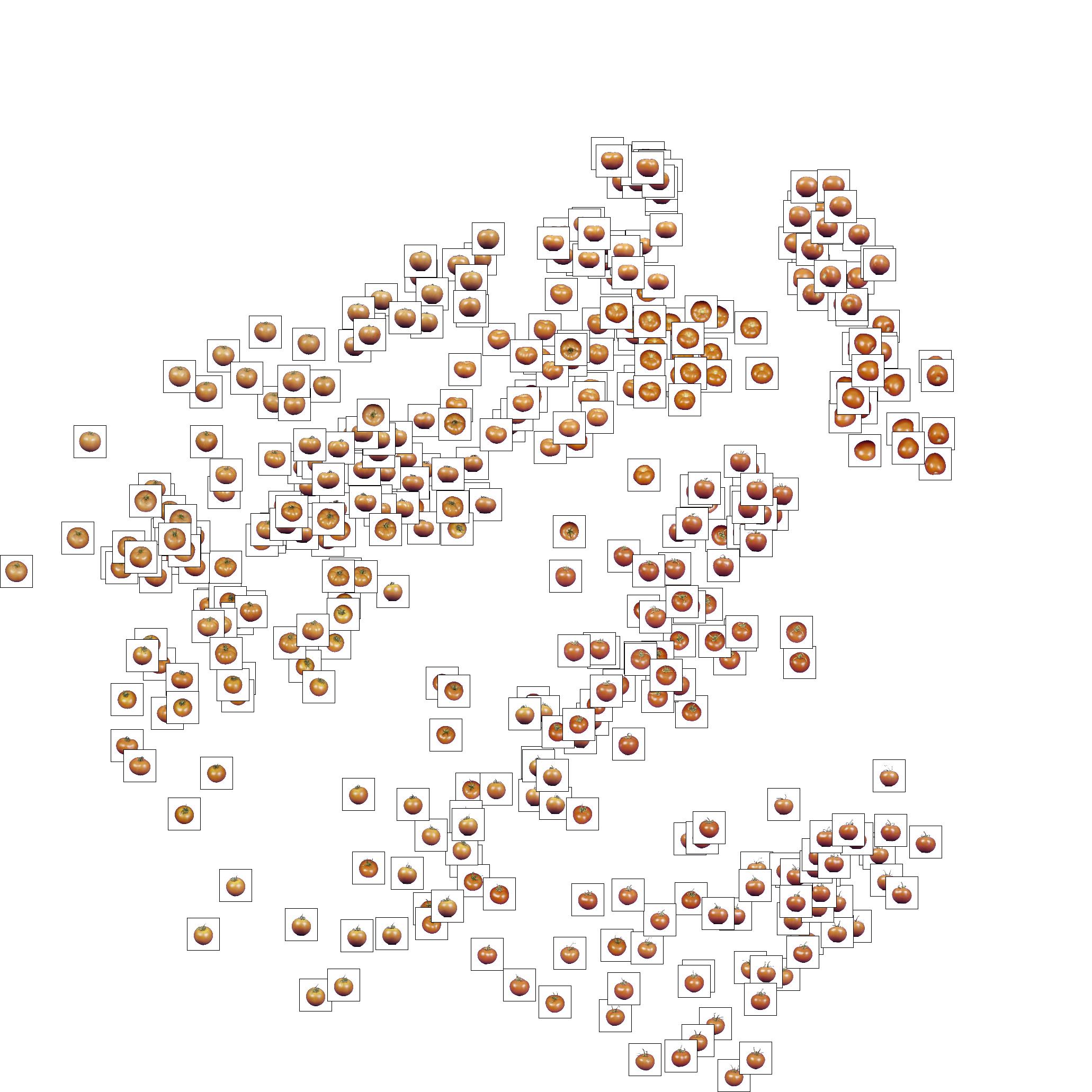

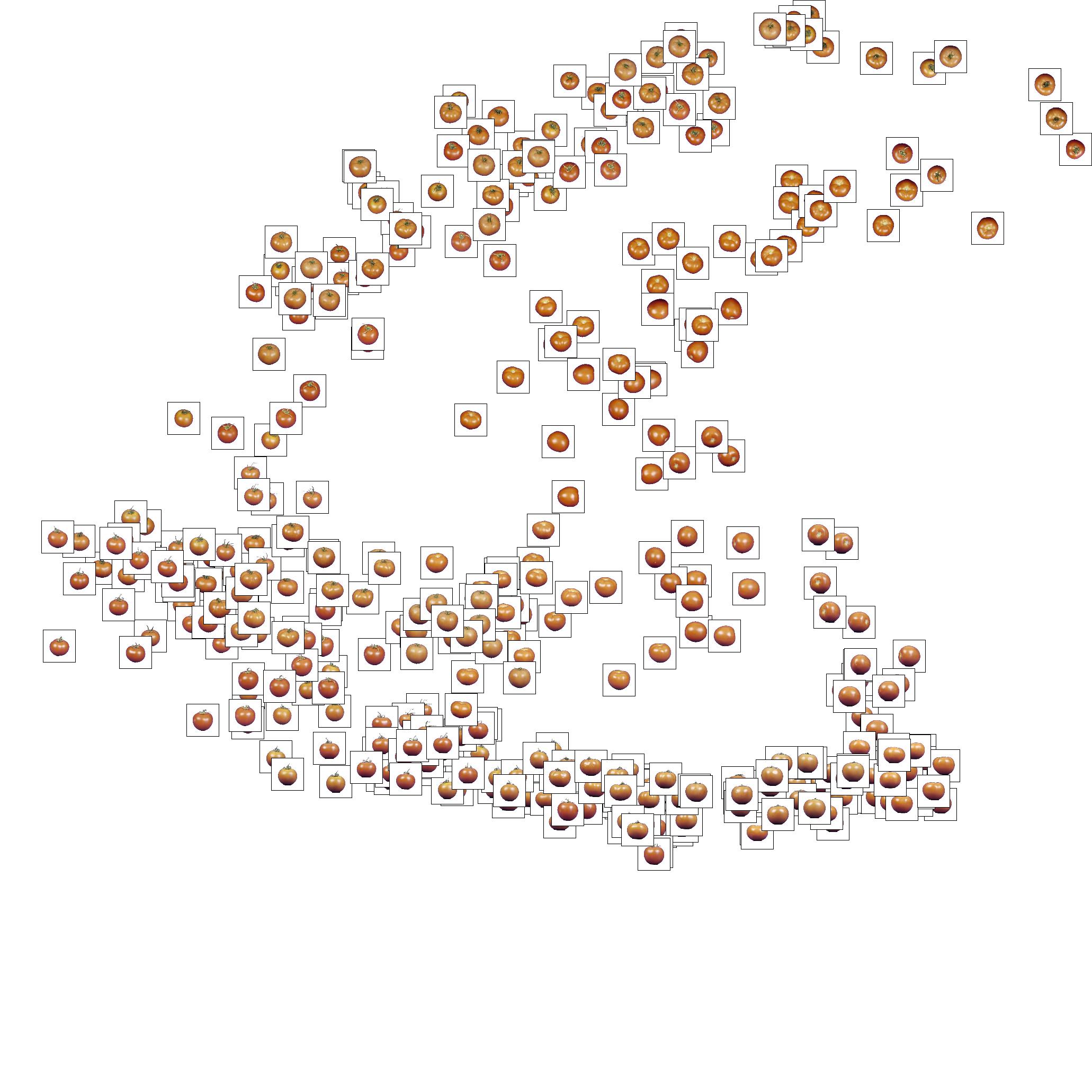

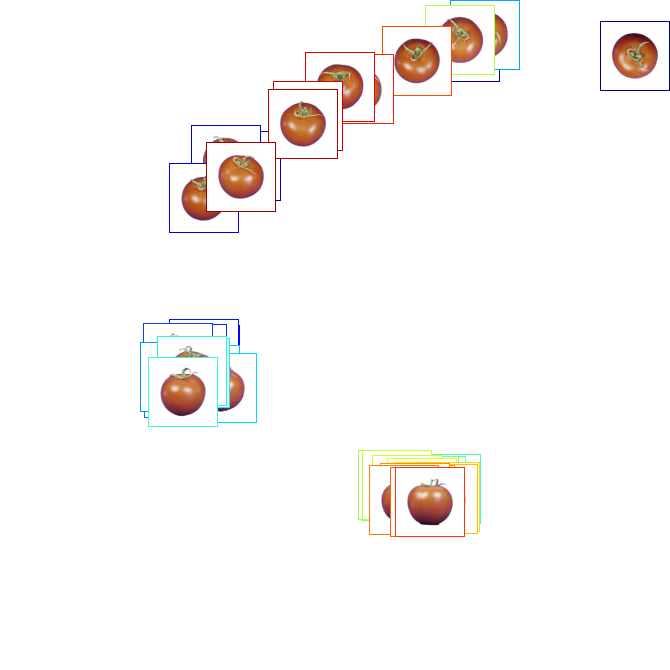

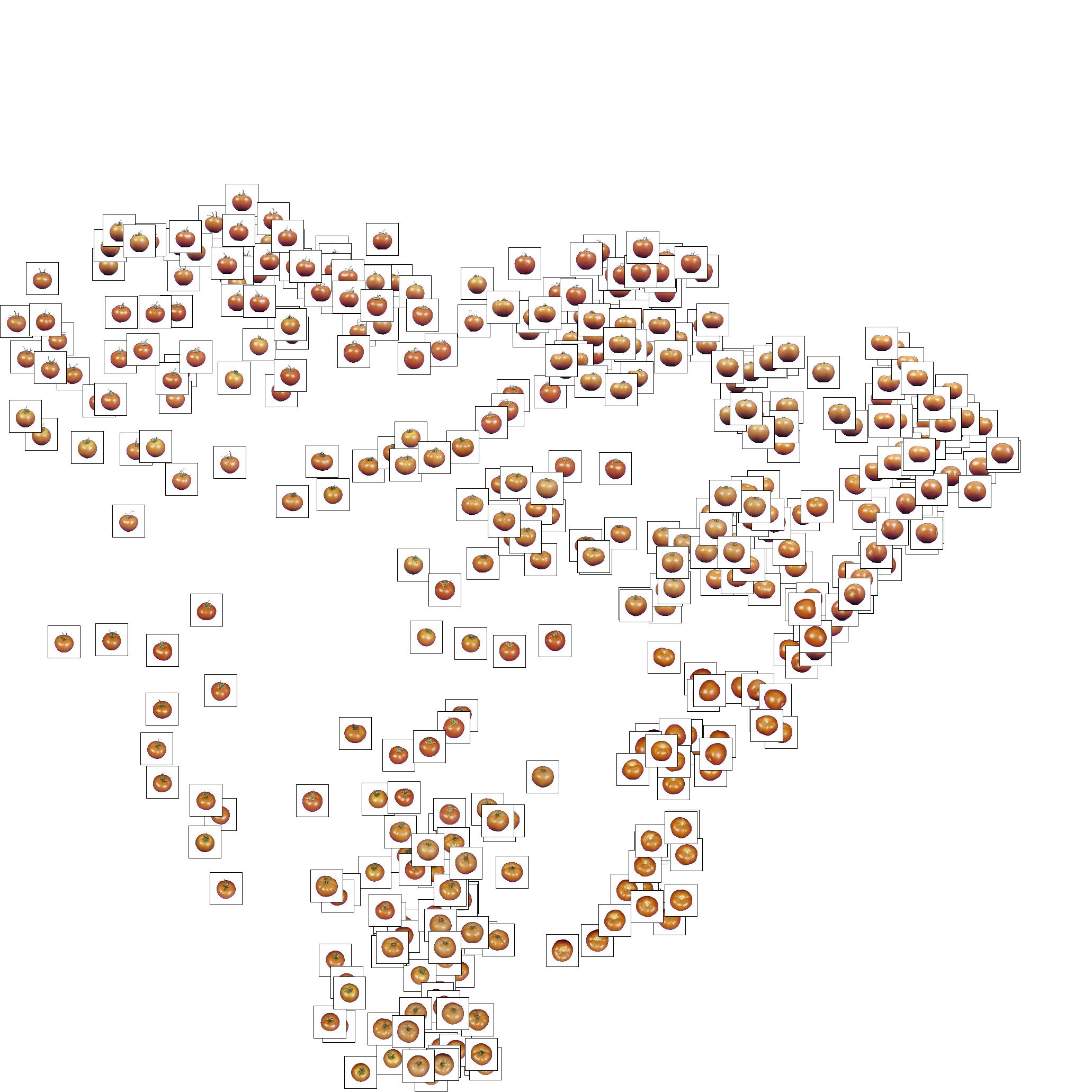

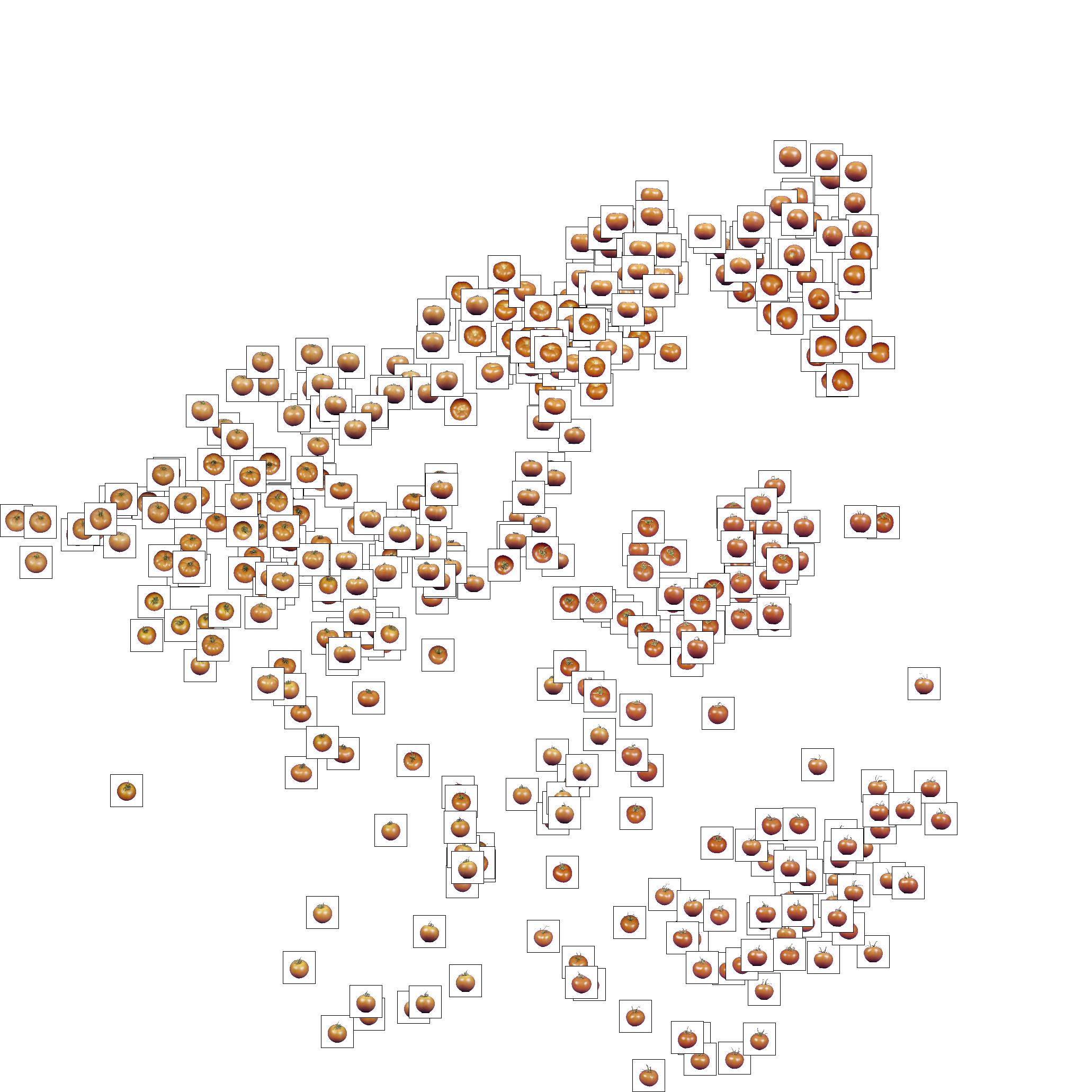

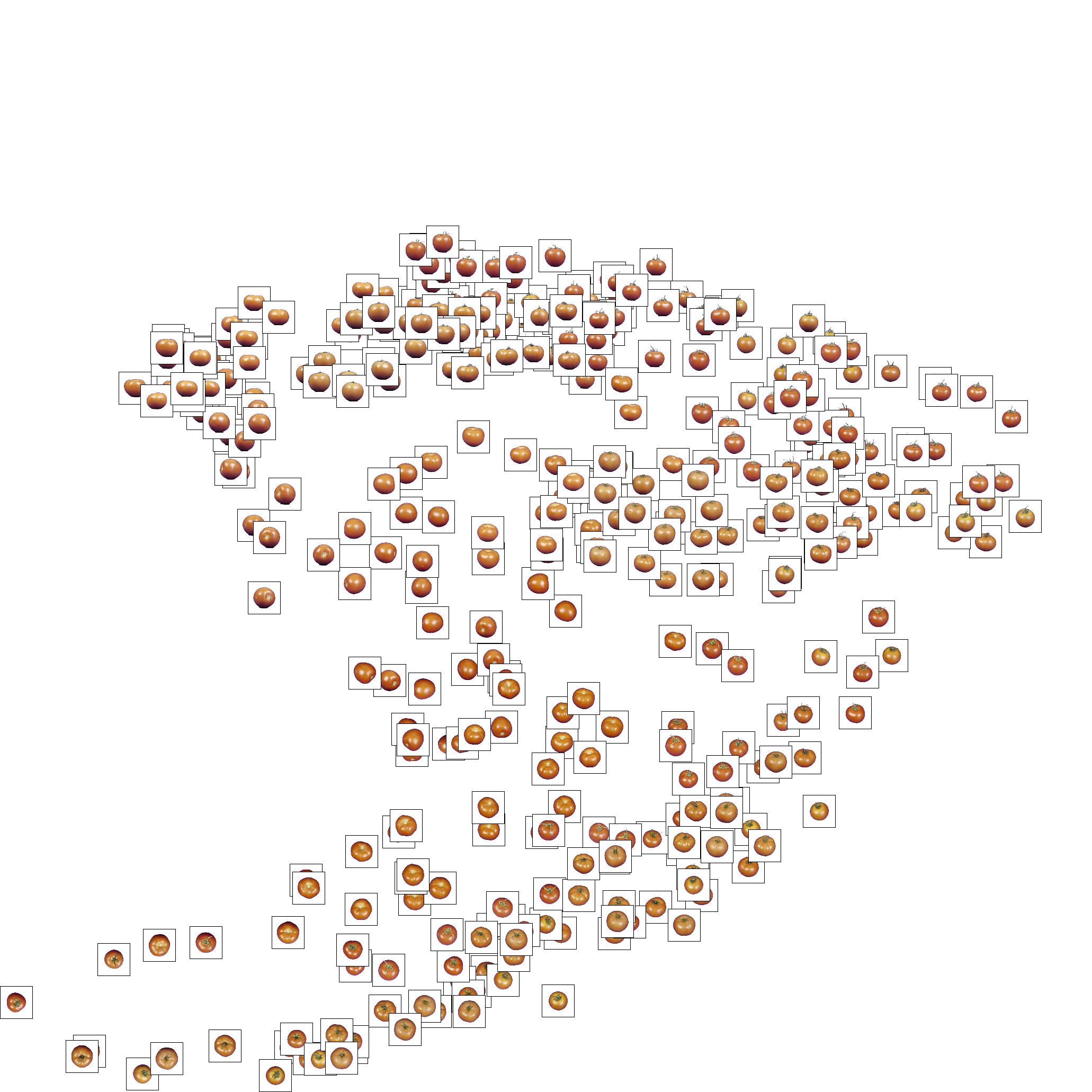

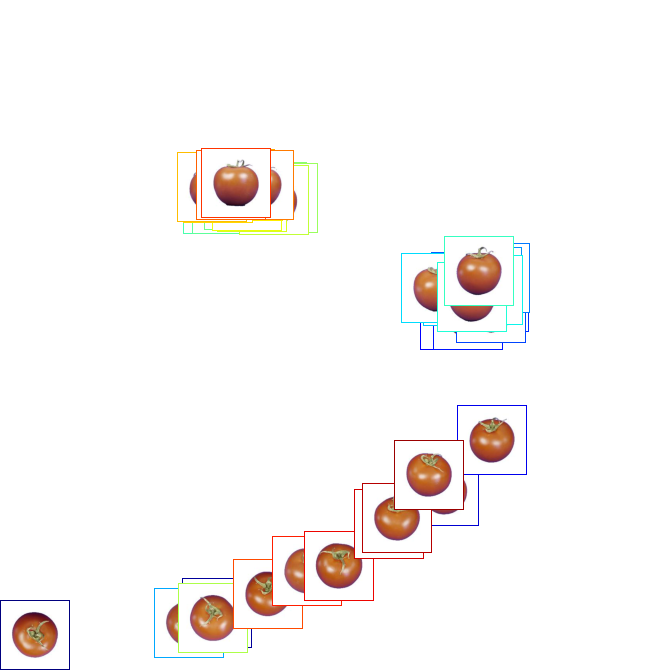

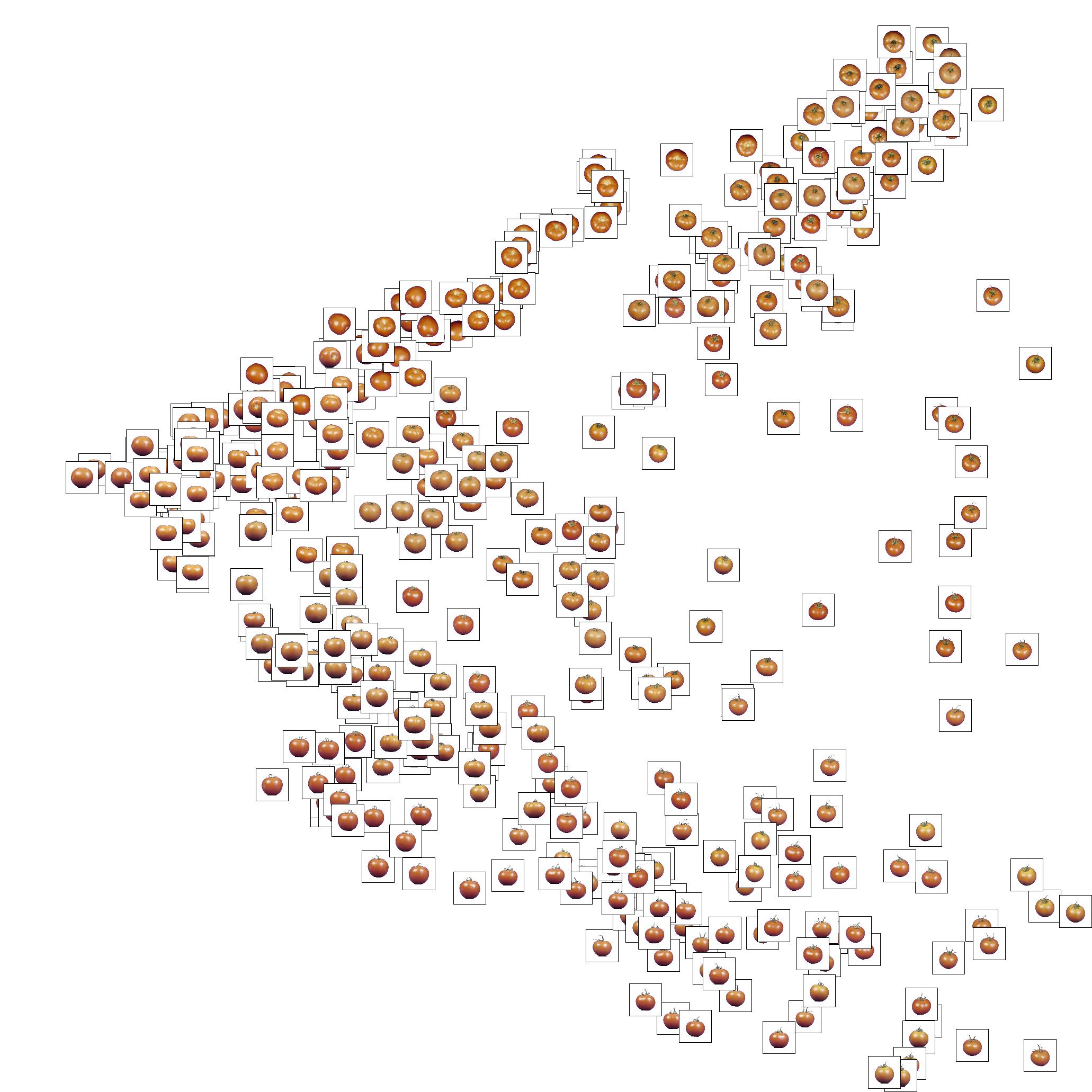

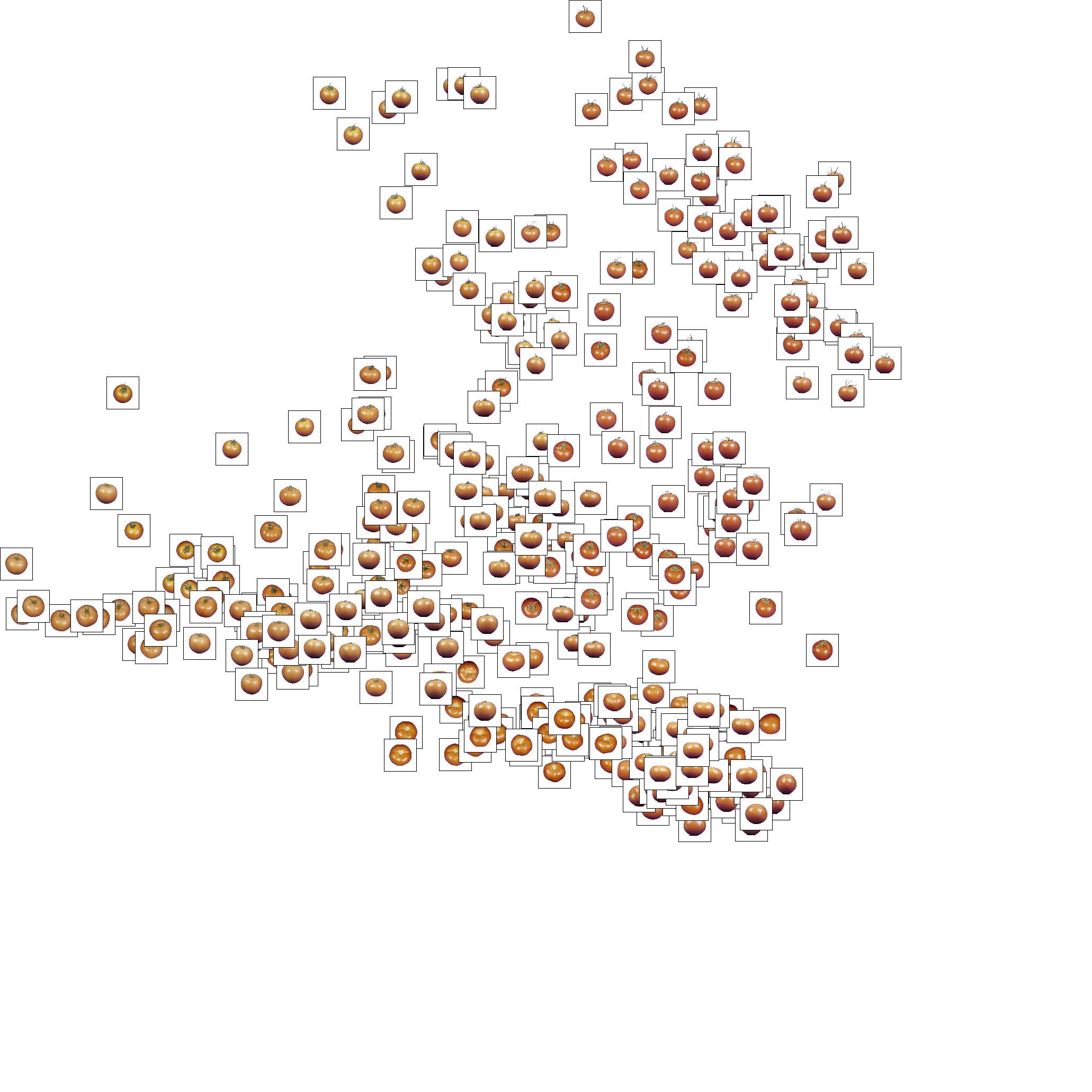

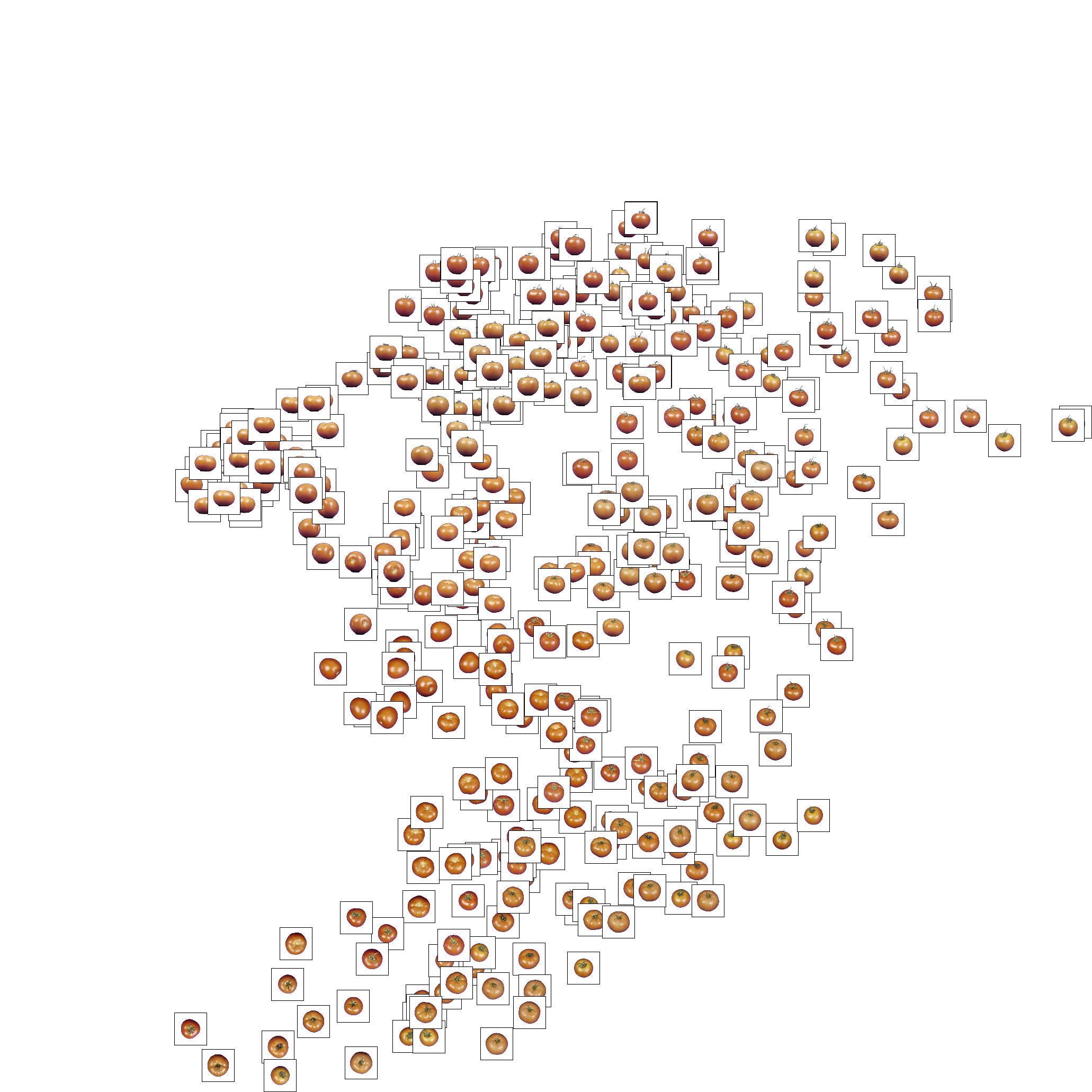

Experiment on ETH-80, qualitative results

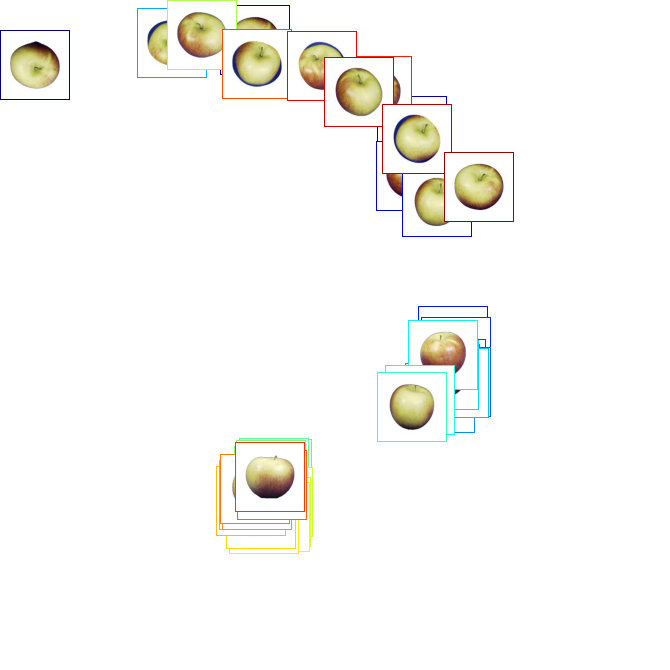

The images presented on this page are similar to those presented in figure 2 in the paper. They correspond to the PCA embeddings of AlexNet features (first column), style feature of our decomposition (second column), and rotation feature of our decomposition (third and forth column). Since the ETH dataset provides only 10 instances per category, we show all the projections of all the views for both style (second column) and orientation (third column). Note that this is different than the second and third column of the paper which show the marginalized features. The first column is similar to the first column of figure 2 in the paper and the forth column to column 3 and 4 in figure 2.

While the smaller number of views and instances than in the experiments presented in the paper makes these embeddings slightly harder to interpret, one can still notice that the spaces associated with style/viewpoint are different and make sense. It is also clear that the viewpoint is encoded differently for the different categories, and that fc6 is more invariant to left/right flip. Interestingly, one can also note that color seems to be an important factor to differentiate the instances.

Please click on any image to see in full resolution.