IAMAHA 2023

2LIGM, Ecole des Ponts, Univ Gustave Eiffel, CNRS, Marne-la-Vallée, France

3IMJ-PRG, UMR 7586, Sorbonne Université, CNRS, Université Paris-Cité

4UMR 8167, Collège de France, Sorbonne Université, CNRS, Paris, France *corresponding authors

Abstract

The VHS project (computer Vision and Historical analysis of Scientific illustration circulation) proposes a new approach to the historical study of the circulation of scientific knowledge based on new methods of illustration analysis. Our contributions in this paper are twofold. First, we present a semi-automatic interactive pipeline for scientific illustration extraction that allows and incorporated expert feedback from historians. Second, we introduce a new dataset of scientific illustrations from Middle Ages to modern era consisting of 11k illustrations validated by historians and a total number of 235k illustrations obtained from 405k corpora pages. We further discuss our current research for identifying series of related illustrations from this data.

Approach and Results

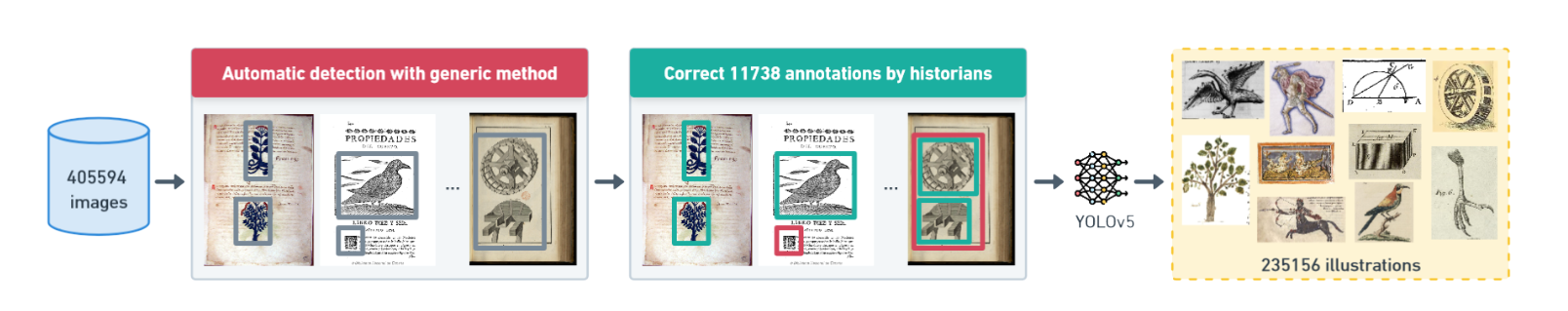

Figure 1: Overview of our pipeline to refine illustration detections in scientific historical documents.

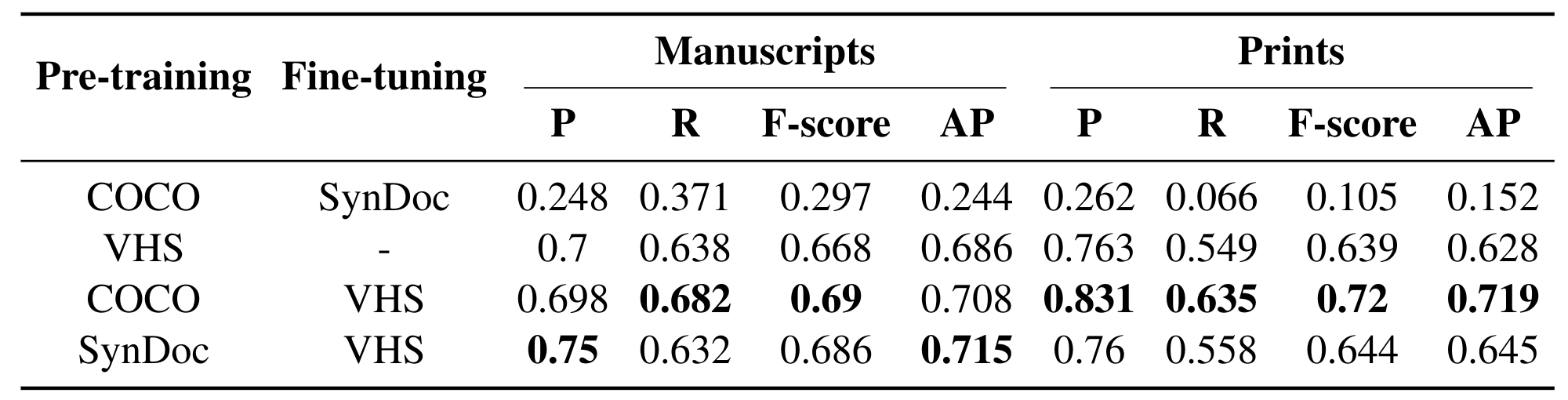

Our method adapts YOLOv5 (Jocher et al., 2022) object detector for the specific task of detecting historical scientific illustrations. We developed a two-stage workflow (Fig. 1) where we first extracted illustrations from a subset of 54 manuscripts and 704 printed volumes uploaded to our platform, which were subjects to historian expert feedback. Consequently, we fine-tuned our model with this curated VHS dataset, and obtained a significant performance boost (Tab. 1). VHS dataset will be publicly available soon.

Table 1: Performance of YOLOv5s in precision (P), recall (R), F-score and average precision (AP).

References

Jocher, G., Chaurasia, A., Stoken, A., Borovec, J., NanoCode012, Kwon, Y., ... Jain, M. (2022) ultralytics/yolov5: v7.0 - YOLOv5 SOTA Realtime Instance Segmentation (Version v7.0).

Lin, T. Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., ... Zitnick, C. L. (2014). Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13 (pp. 740-755). Springer International Publishing.

Monnier, T., Aubry, M. (2020, September). docExtractor: An off-the-shelf historical document element extraction. In 2020 17th International Conference on Frontiers in Handwriting Recognition (ICFHR) (pp. 91-96). IEEE.

BibTeX

@inproceedings{aouinti2023computer,

title={{Computer Vision and Historical Scientific Illustrations}},

author={Aouinti, Fouad and Baltaci, Zeynep Sonat and Aubry, Mathieu and Guilbaud, Alexandre and Lazaris, Stavros},

booktitle={IAMAHA},

year={2023}}Acknowledgements

This work was supported by the ANR (ANR project VHS ANR-21-CE38-0008). Mathieu Aubry and Zeynep Sonat Baltaci were supported by ERC project DISCOVER funded by the European Union's HorizonEurope Research and Innovation programme under grant agreement No. 101076028. Views and opinions expressed are however those of the authors only and do not necessarily reflect those of the European Union. Neither the European Union nor the granting authority can be held responsible for them.