Abstract:

Inspired by vector graphics software, we propose a model that learns to reconstruct a rasterized image by overlaying a finite number of layers of unique color. Similar to a human composing the image, the network iteratively predicts the next mask by looking at both the target image to reconstruct and the actual canvas.

How it works ?

We frame image generation as an alpha-blending composition of a sequence of layers. More precisely, we define our image generation procedure in a recurrent manner, given a fixed budget of T iterations. We start from an empty uniform random canvas I0 and iteratively blend a total of T generated colored masks onto it.

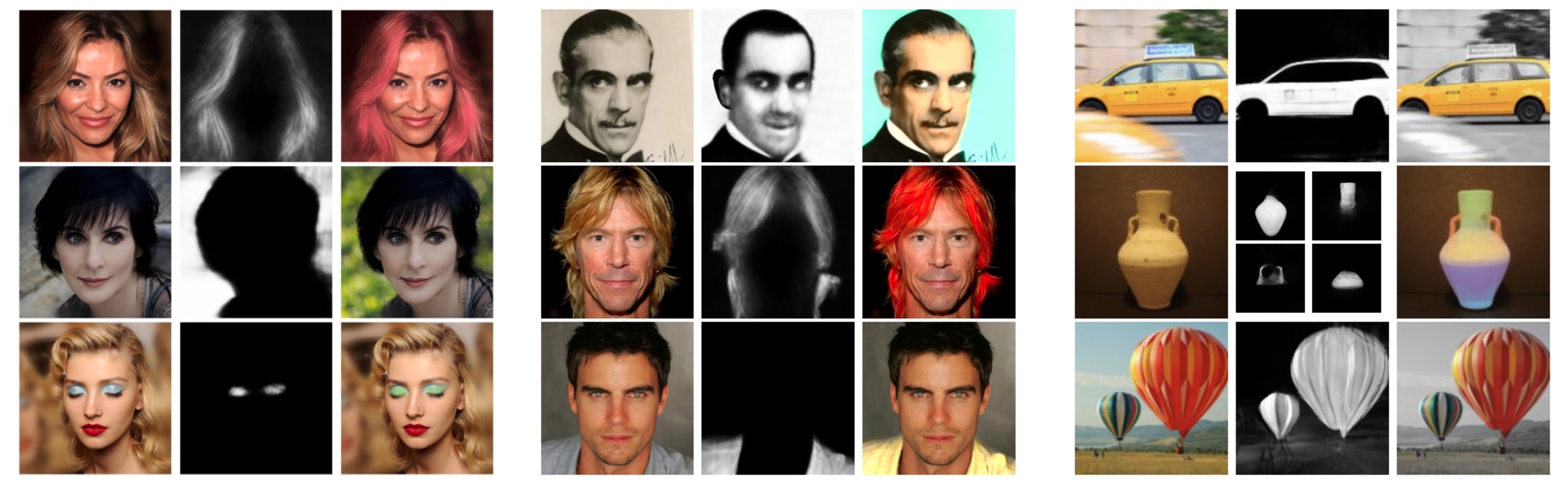

Editing on CelebaHQ and ImageNet:

Our obtained masks allow us to perform editings seamlessly, without the burden of mask selection. The visual quality of the edits are satisfying for an unsupervised method.

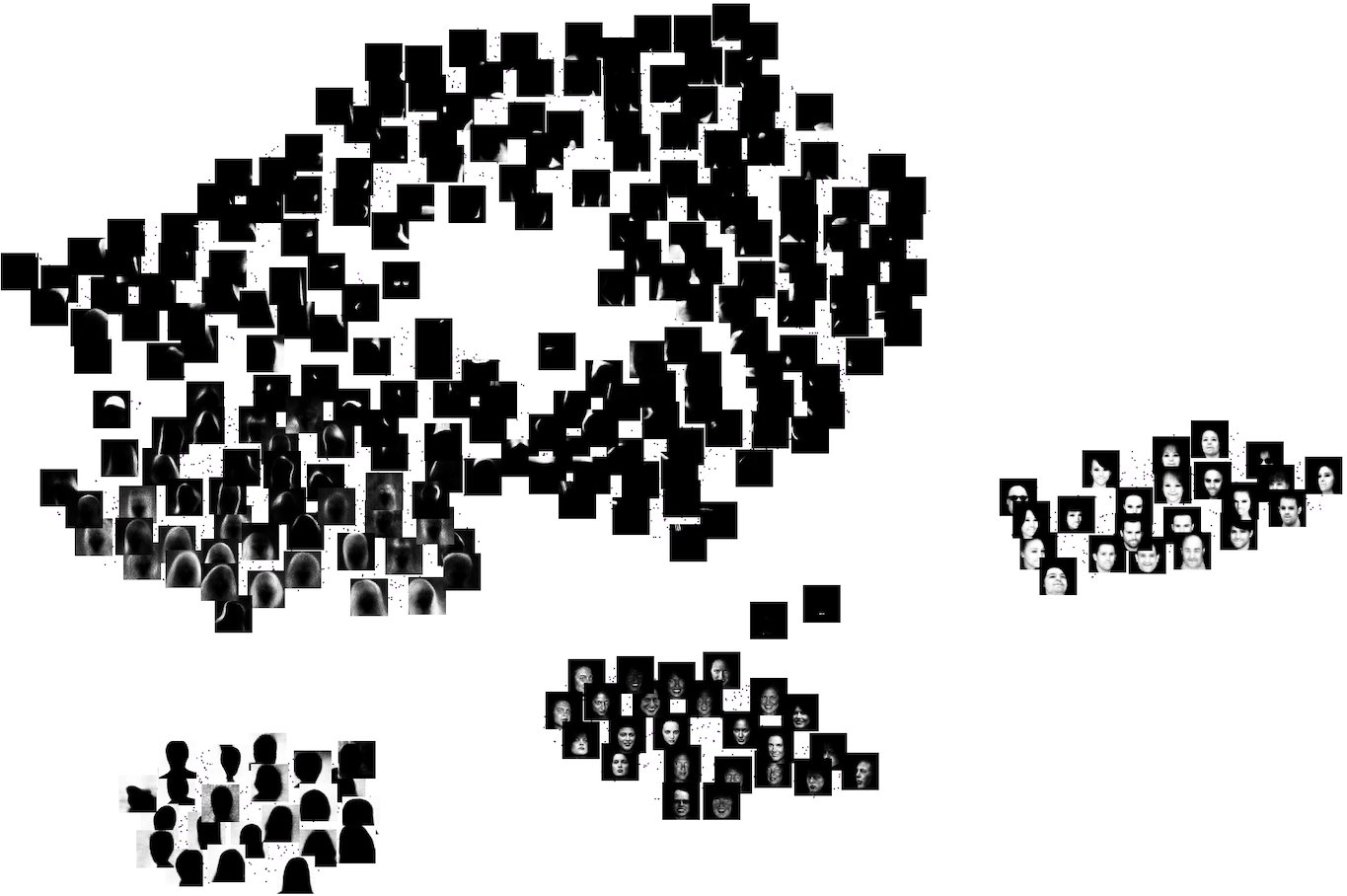

T-SNE over the learned mask space on CelebA:

A t-SNE visualization of the mask parameters obtained on CelebA is shown. Different clusters of masks are clearly visible, for backgrounds, hairs, face shadows, etc..

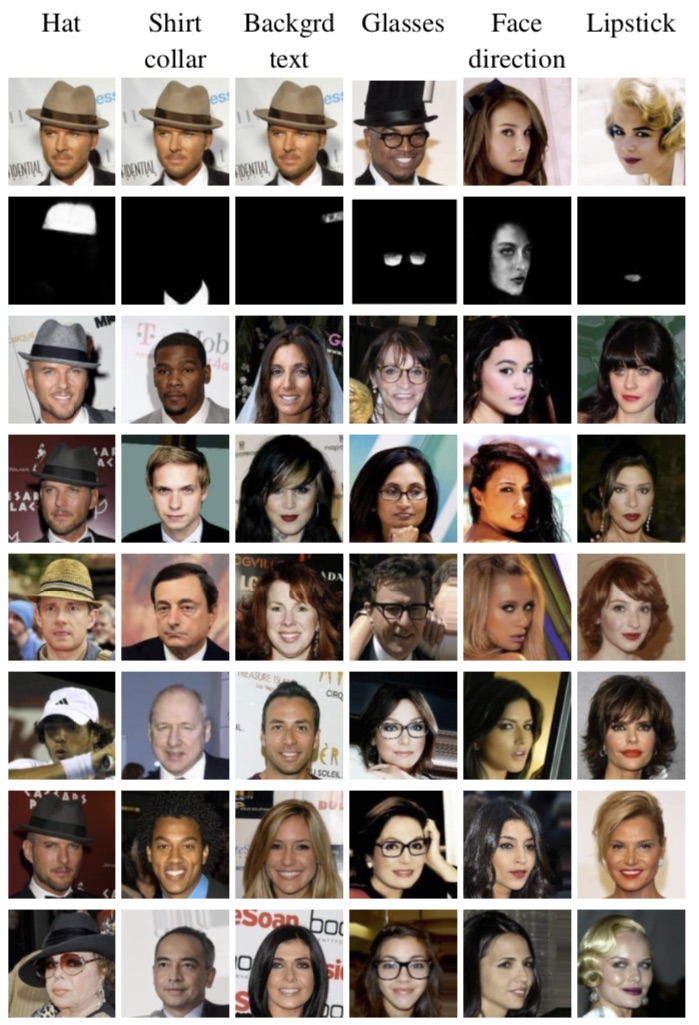

Attribute retrieval using Nearest neighbors in mask space:

Given a target image and a mask of an area of interest extracted from it, a nearest neighbor search in the learned mask parameter space allows the retrieval of images sharing the desired attribute with the target.

How to cite?

Citation

SBAI, Othman, COUPRIE, Camille, et AUBRY, Mathieu. Unsupervised Image Decomposition in Vector Layers. Arxiv paper

Bibtex

@inproceedings{sbaio2020pix2vec,

TITLE = {{Unsupervised Image Decomposition in Vector Layers}},

AUTHOR = {Sbai, Othman and Couprie, Camille and Aubry, Mathieu},

URL ={https://arxiv.org/abs/1812.05484},

BOOKTITLE = {{ICIP 2020 - IEEE International Conference on Image Processing}},

ADDRESS = {Abu Dhabi, UAE},

PUBLISHER = {{IEEE}},

YEAR = {2020},

}