Abstract

We propose a method to allow precise and extremely fast mesh extraction

from 3D Gaussian Splatting (SIGGRAPH 2023).

Gaussian Splatting has recently become very popular as it yields realistic rendering

while being significantly faster to train than NeRFs. It is however challenging

to extract a mesh from the millions of tiny 3D Gaussians as

these Gaussians tend to be unorganized after optimization and no method has been proposed so far.

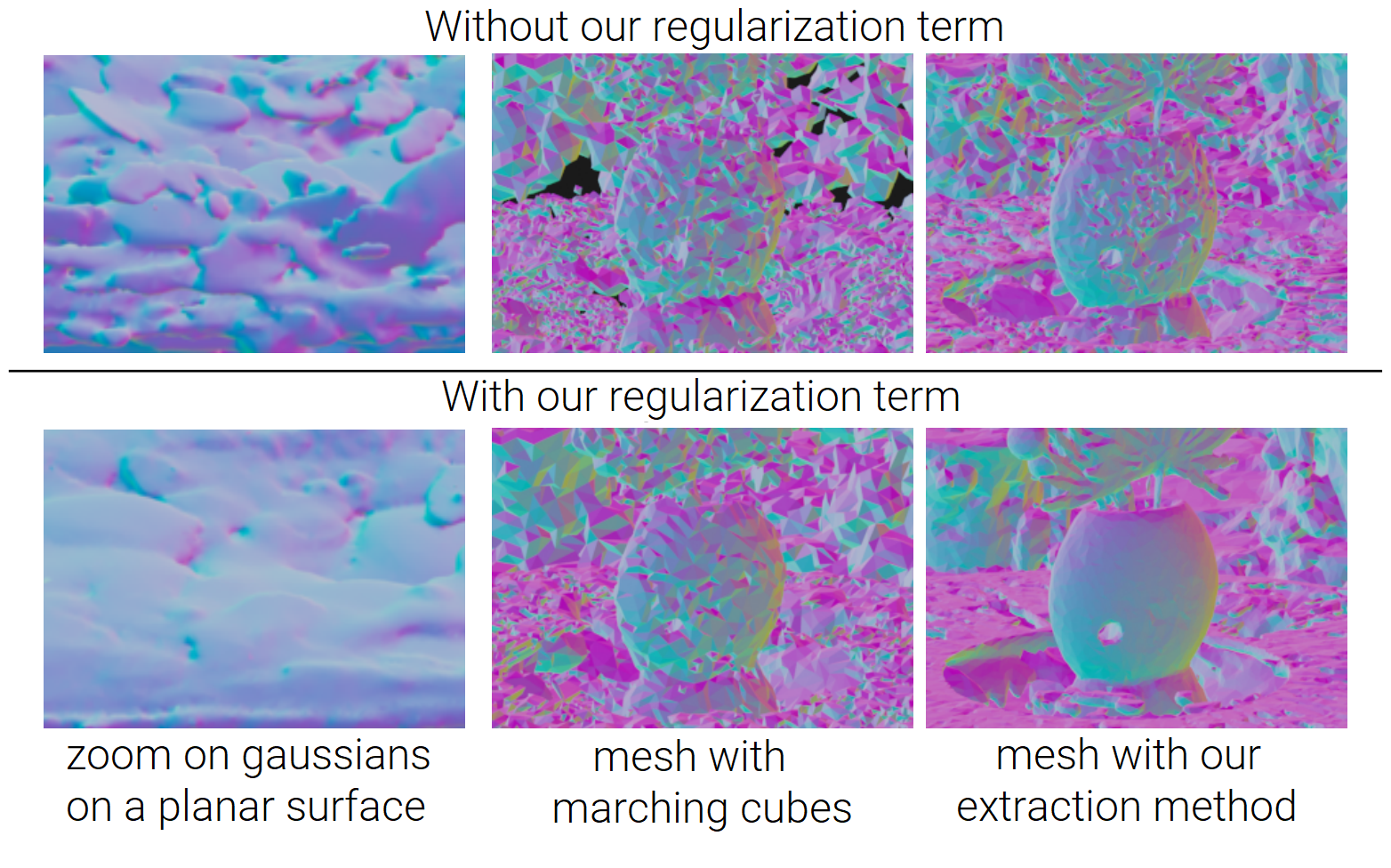

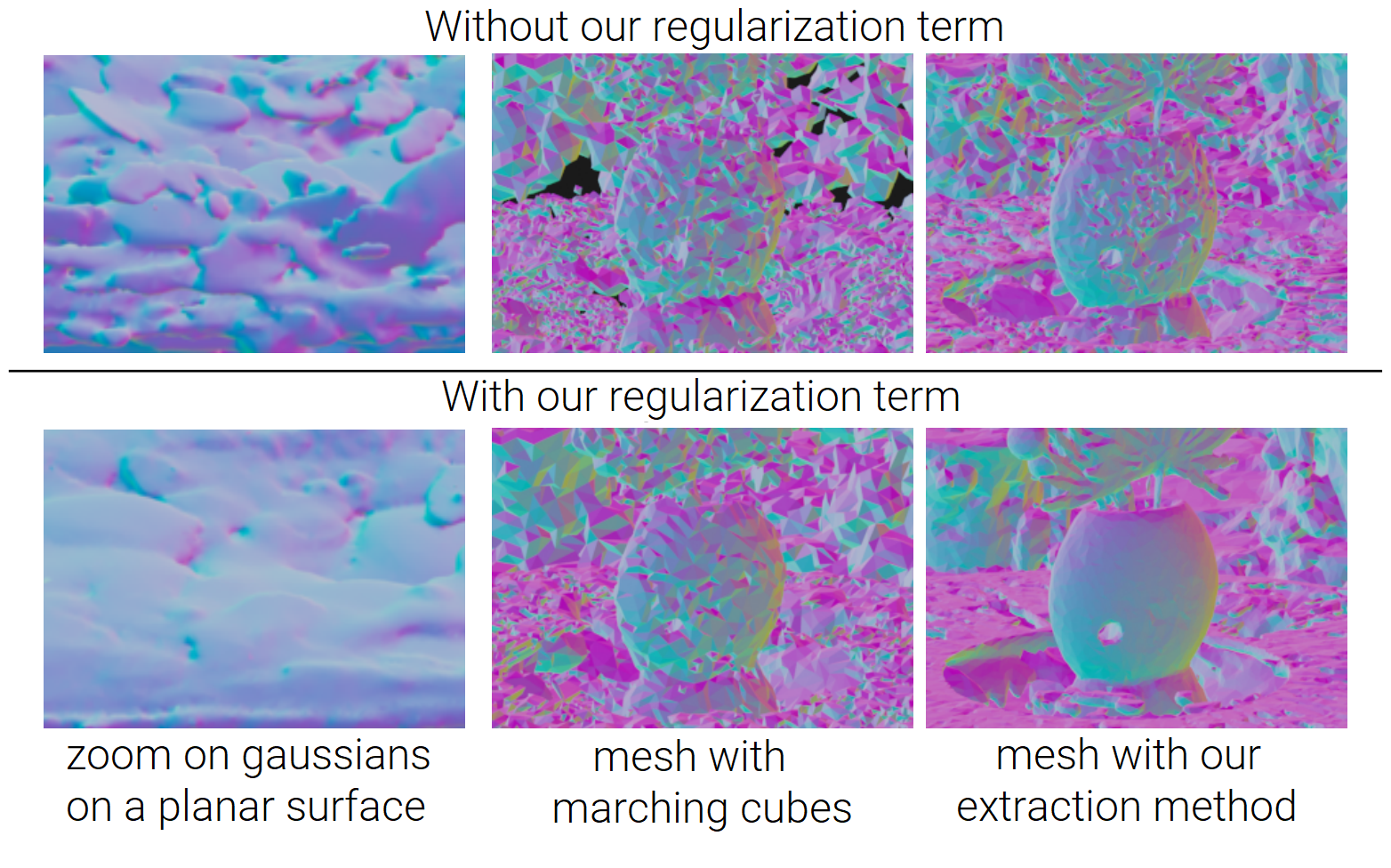

Our first key contribution is a regularization term that encourages the 3D Gaussians

to align well with the surface of the scene.

We then introduce a method that exploits this alignment to sample points on the real surface of the scene

and extract a mesh from the Gaussians using Poisson reconstruction, which is fast, scalable, and preserves details,

in contrast to the Marching Cubes algorithm usually applied to extract meshes from Neural SDFs.

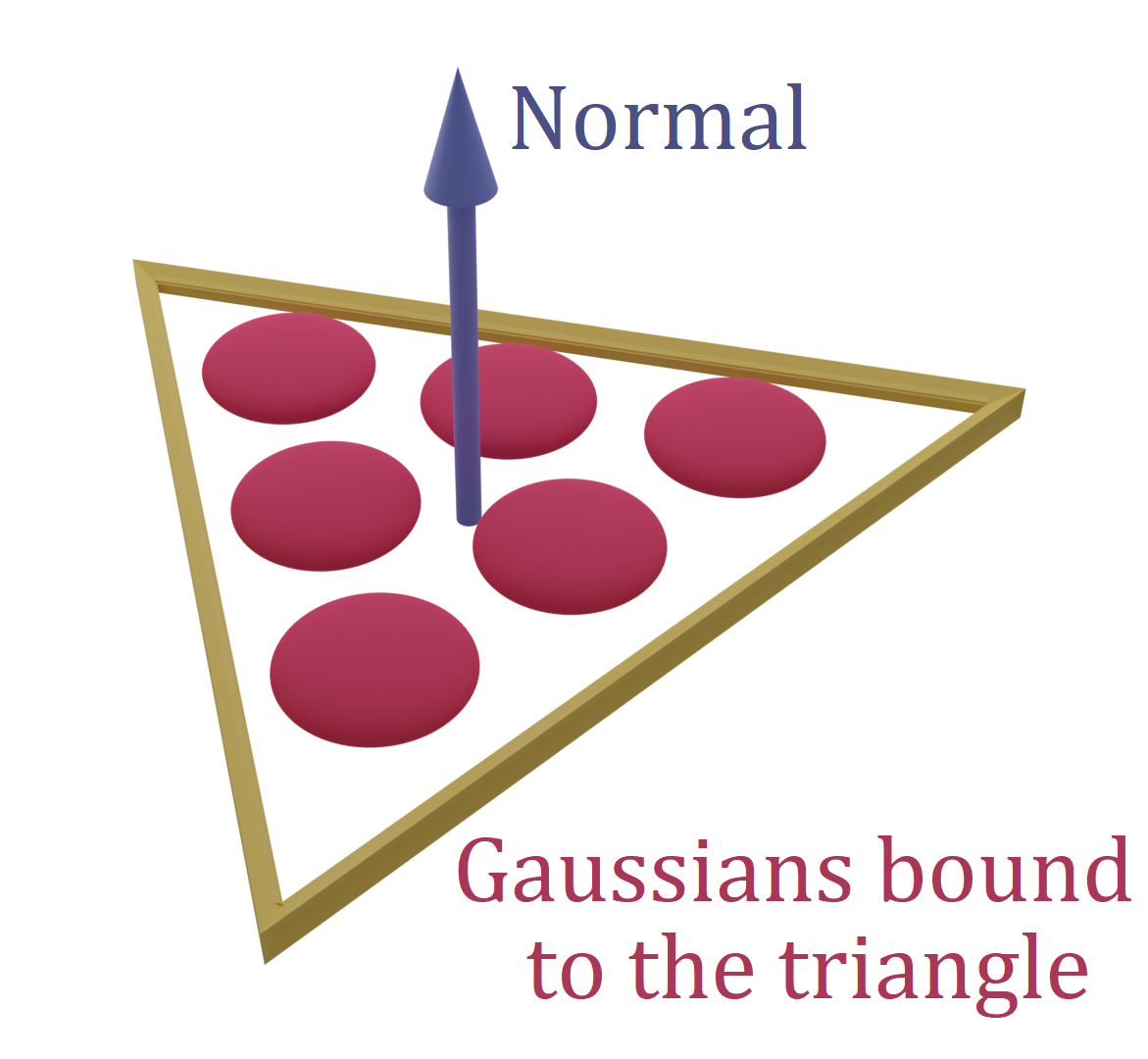

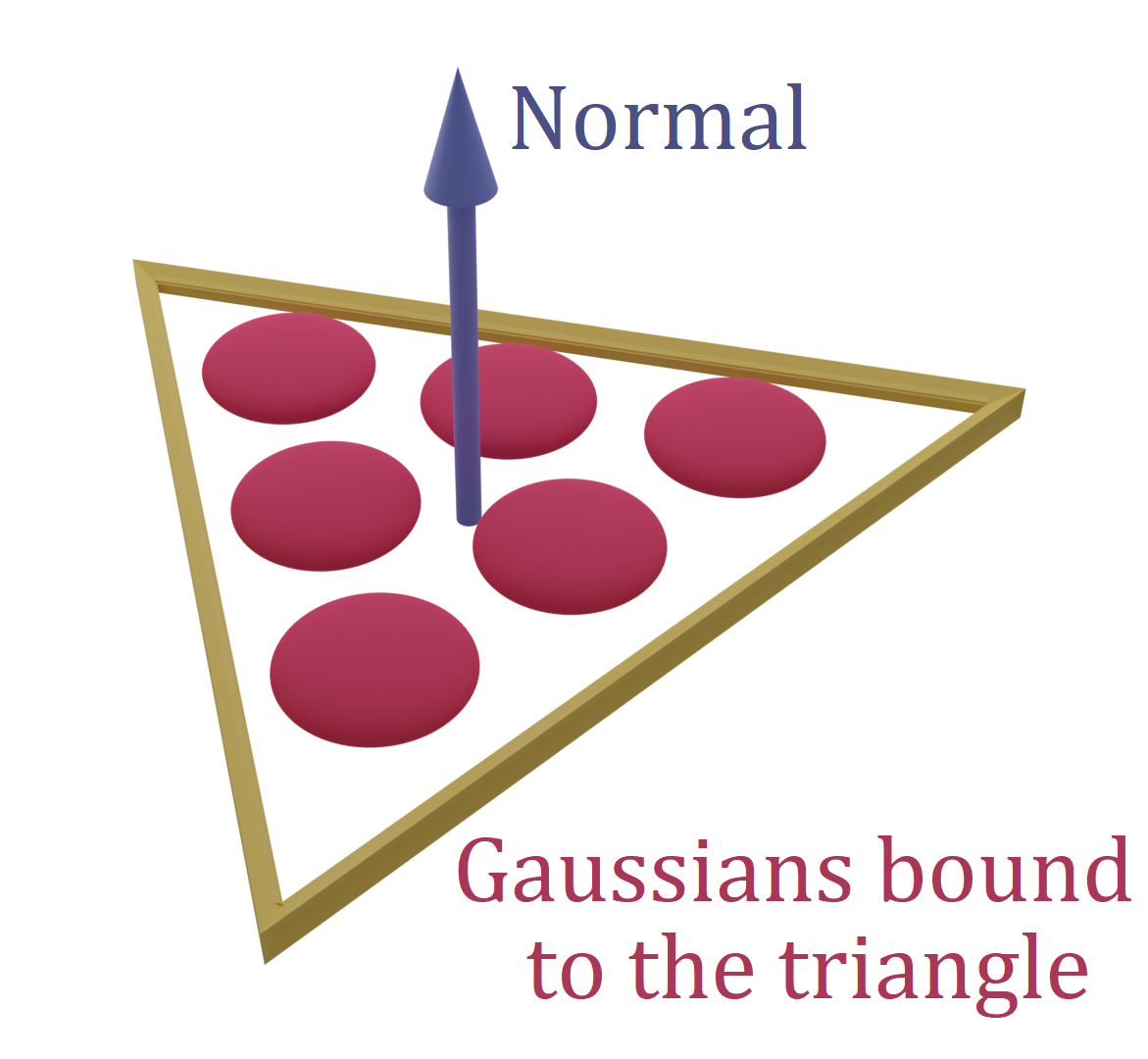

Finally, we introduce an optional refinement strategy that binds Gaussians to the surface of the mesh,

and jointly optimizes these Gaussians and the mesh through Gaussian splatting rendering.

This enables easy editing, sculpting, rigging, animating, or relighting of the Gaussians using traditional softwares (Blender, Unity, Unreal Engine, etc.)

by manipulating the mesh instead of the Gaussians themselves.

Retrieving such an editable mesh for realistic rendering is done within minutes with our method,

compared to hours with the state-of-the-art method on neural SDFs, while providing a better rendering quality in terms of PSNR, SSIM and LPIPS.

SuGaR: Surface-Aligned

Gaussian Splatting for Efficient 3D Mesh

Reconstruction

1. Aligning the Gaussians with the Surface

To facilitate the creation of a mesh from the Gaussians,

we first propose a regularization term that encourages the Gaussians

to be well distributed over the scene surface so that they

capture much better the scene geometry.

Our approach is to derive a volume density from the Gaussians under the assumption

that they are flat and well distributed over the scene surface.

By minimizing the difference between this density and the actual one

computed from the Gaussians during optimization,

we encourage the 3D Gaussians to represent well the surface geometry.

2. Efficient Mesh Extraction

Gaussian Splatting representations of Real scenes typically end up with one or several millions

of 3D Gaussians with different scales and rotations, the majority of them being extremely small

in order to reproduce texture and details in the scene.

This results in a density function that is close to zero almost everywhere,

and the Marching Cubes algorithm fails to extract proper level sets of

such a sparse density function even with a fine voxel grid.

Instead, we introduce a method that very efficiently samples points

on the visible part of a level set of the density function,

allowing us to run the Poisson reconstruction algorithm on these points

to obtain a triangle mesh.

This approach is scalable, by contrast with the Marching Cubes algorithm for example,

and reconstructs a highly detailed surface mesh within minutes on a single GPU,

compared to other state of the art methods relying on Neural SDFs for extracting meshes from radiance fields.

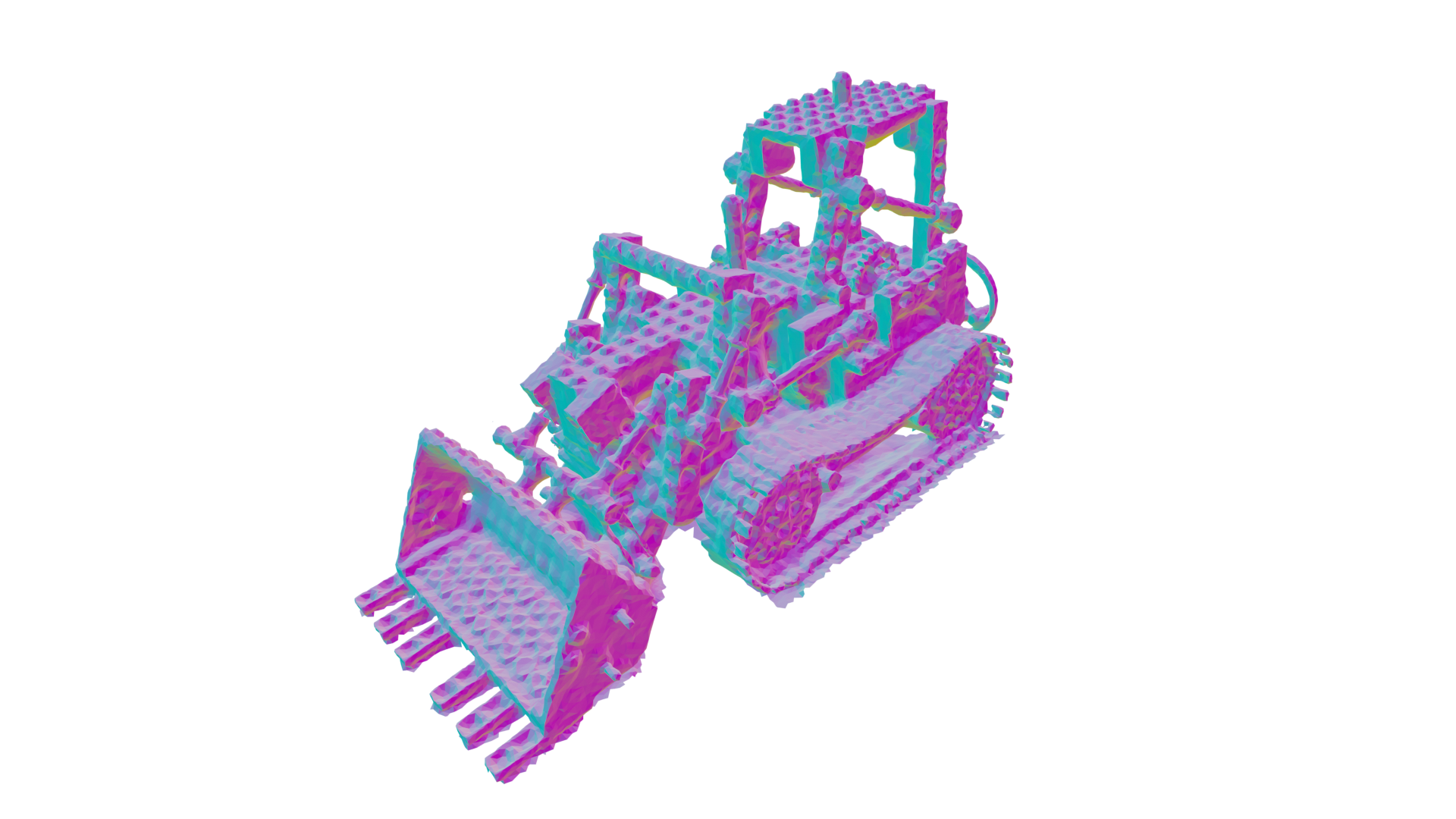

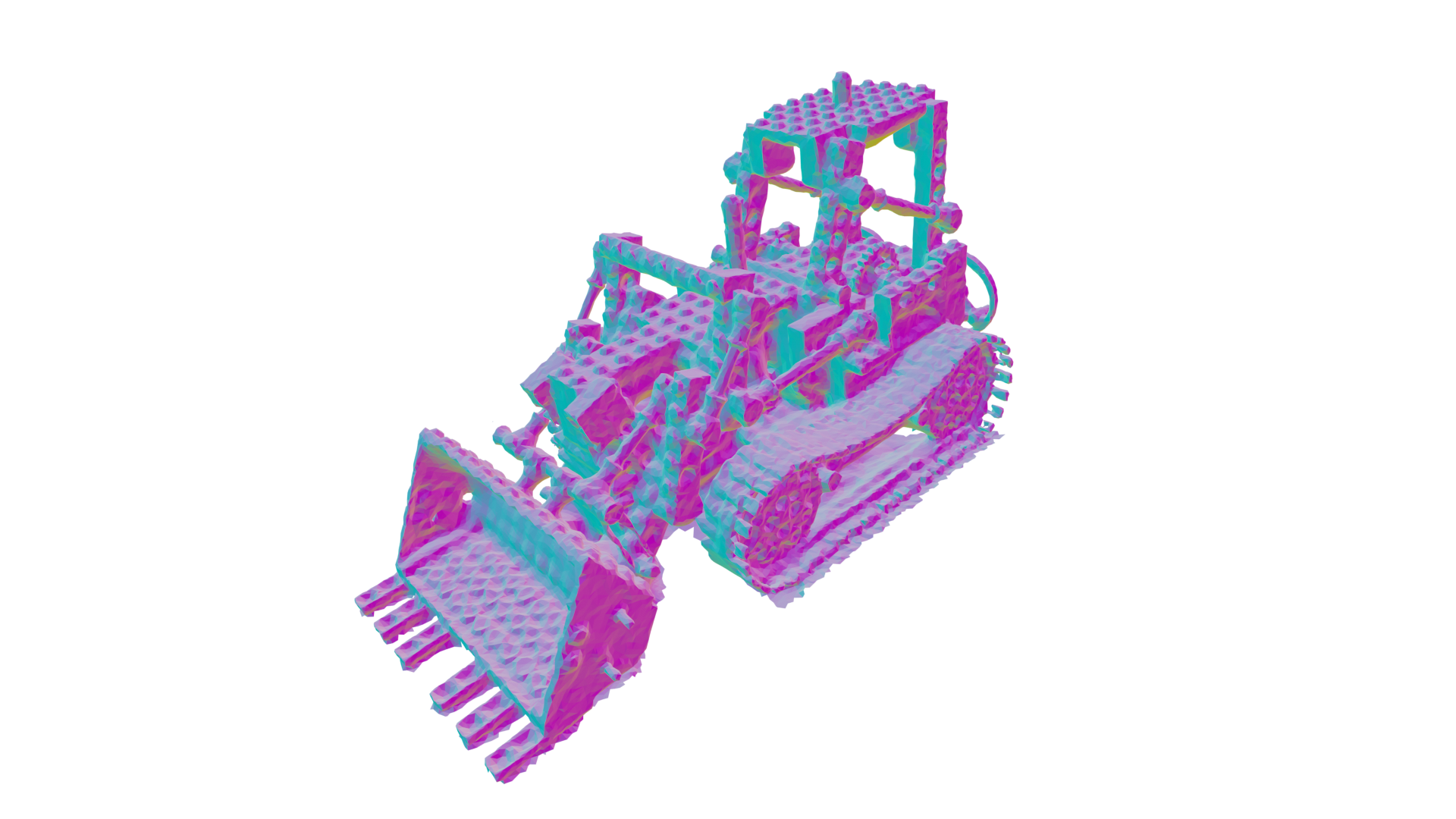

3. Binding New 3D Gaussians to the Mesh

After extracting this mesh, we propose an optional refinement strategy:

We bind new Gaussians to the mesh triangles and optimize the Gaussians

and the mesh jointly using the Gaussian Splatting rasterizer.

This optimization enables high-quality rendering of the mesh

using Gaussian splatting rendering rather than

traditional textured mesh rendering. We provide several examples below.

This results in higher performance in terms of rendering quality

than other radiance field models relying on an underlying mesh at inference.

This binding strategy also makes possible the use of traditional mesh-editing tools

for editing a Gaussian Splatting representation of a scene,

offering endless possibilities for Computer Graphics.

Example of scene composition

In the following video, we show how to compose scenes in Blender using the meshes extracted with SuGaR.

As we show at the end of the video, this composition can be rendered using the Gaussian Splatting rasterizer

and the gaussians bound to the surface of the mesh.

Example of scene edition and character animation

In the following video, we show how we are able to animate a character in Blender using the meshes extracted by SuGaR.

As we show at the end of the video, this animation can be rendered using the Gaussian Splatting rasterizer

and the gaussians bound to the surface of the mesh.

In the following video, we show how we segment and rig a character using the meshes extracted with SuGaR.

Meshing and rendering scenes from Mip-NeRF360,

DeepBlending and casual captures

Kitchen

Mesh rendered with bound Gaussians

.png)

Mesh without texture

Garden

Mesh rendered with bound Gaussians

Mesh without texture

Knight (casual capture with a smartphone)

Mesh with bound Gaussians

.png)

Mesh without texture

Robot (casual capture with a smartphone)

Mesh rendered with bound Gaussians

.png)

Mesh without texture

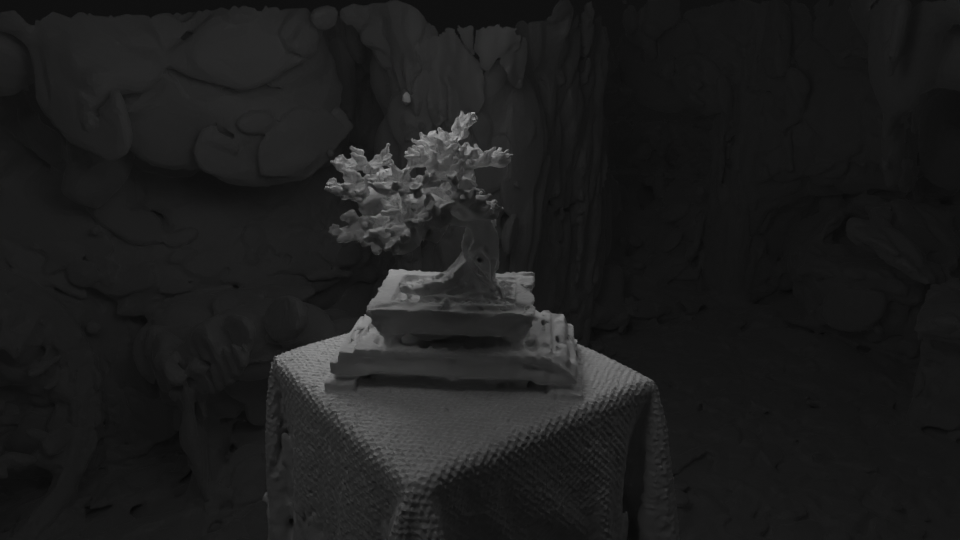

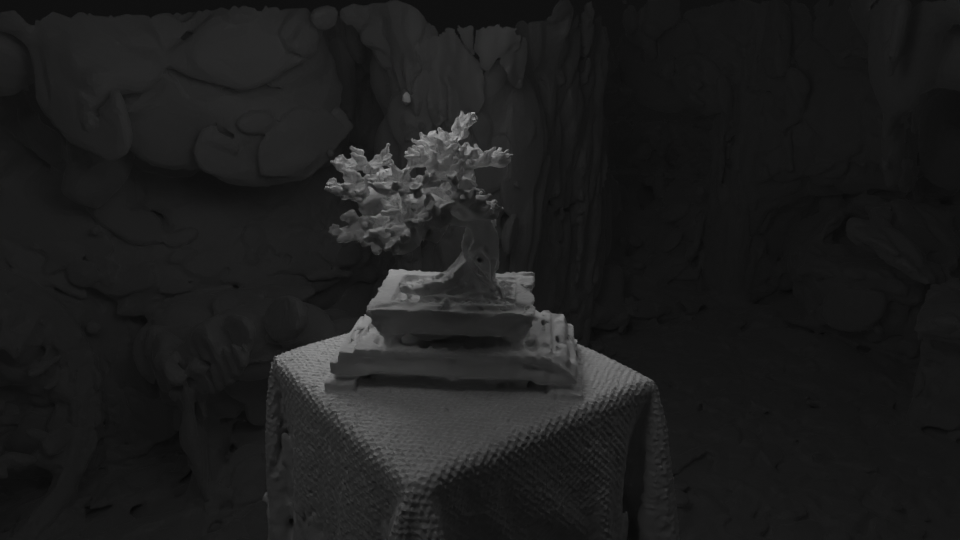

Bonsai

Mesh rendered with bound Gaussians

Mesh without texture

Playroom

Mesh rendered with bound Gaussians

Mesh without texture

Counter

Mesh rendered with bound Gaussians

Mesh without texture

Dr Johnson

Mesh rendered with bound Gaussians

Mesh without texture

Room

Mesh rendered with bound Gaussians

Mesh without texture

Resources

BibTeX

If you find this work useful for your research, please cite:

@article{guedon2023sugar,

title={SuGaR: Surface-Aligned Gaussian Splatting for Efficient 3D Mesh Reconstruction and High-Quality Mesh Rendering},

author={Gu{\'e}don, Antoine and Lepetit, Vincent},

journal={CVPR},

year={2024}

}

Further information

If you like this project, check out our previous works related to 3D reconstruction,

where we introduce models that learn simultaneously to explore and reconstruct 3D environments from RGB images:

Acknowledgements

This work was granted access to the HPC resources of IDRIS under the allocation 2023-AD011013387R1 made by GENCI.

We thank George Drettakis and Elliot Vincent for inspiring discussions and valuable feedback.

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

.png) Mesh without texture

Mesh without texture

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

Mesh without texture

Mesh without texture

Mesh with bound Gaussians

Mesh with bound Gaussians

.png) Mesh without texture

Mesh without texture

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

.png) Mesh without texture

Mesh without texture

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

Mesh without texture

Mesh without texture

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

Mesh without texture

Mesh without texture

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

Mesh without texture

Mesh without texture

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

Mesh without texture

Mesh without texture

Mesh rendered with bound Gaussians

Mesh rendered with bound Gaussians

Mesh without texture

Mesh without texture