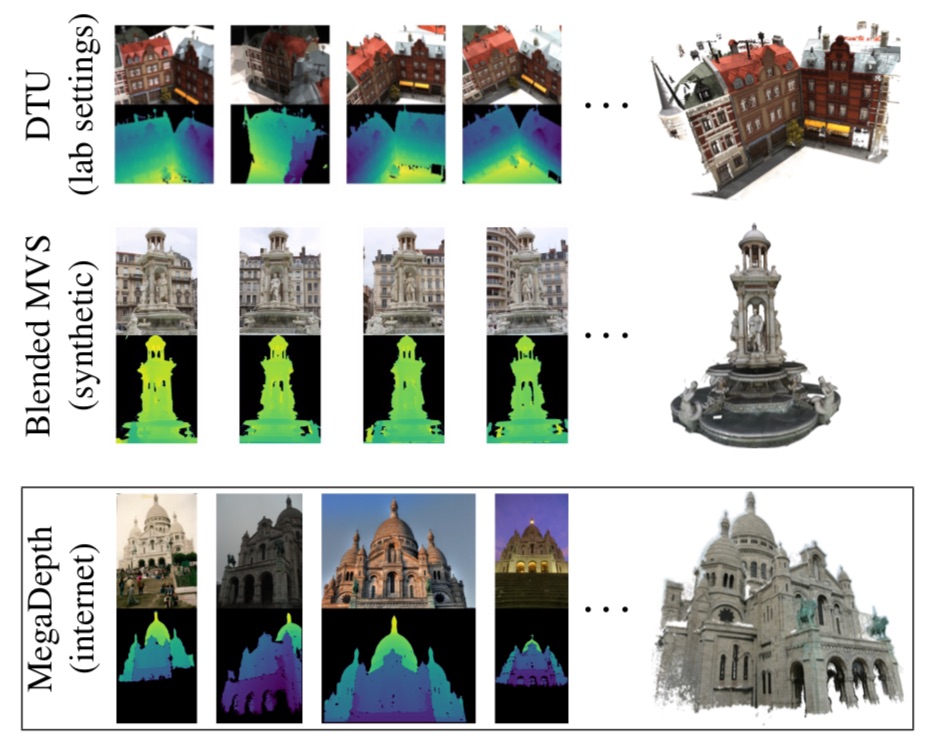

Deep multi-view stereo (MVS) methods have been developed and extensively compared on simple datasets, where they now outperform classical approaches. In this paper, we ask whether the conclusions reached in controlled scenarios are still valid when working with Internet photo collections. We propose a methodology for evaluation and explore the influence of three aspects of deep MVS methods: network architecture, training data, and supervision. We make several key observations, which we extensively validate quantitatively and qualitatively, both for depth prediction and complete 3D reconstructions. First, complex unsupervised approaches cannot train on data in the wild. Our new approach makes it possible with three key elements: upsampling the output, softmin based aggregation and a single reconstruction loss. Second, supervised deep depthmap-based MVS methods are state-of-the art for reconstruction of few internet images. Finally, our evaluation provides very different results than usual ones. This shows that evaluation in uncontrolled scenarios is important for new architectures.

@article{

author = {Darmon, Fran{\c{c}}ois and

Bascle, B{\'{e}}n{\'{e}}dicte and

Devaux, Jean{-}Cl{\'{e}}ment and

Monasse, Pascal and

Aubry, Mathieu},

title = {Deep Multi-View Stereo gone wild},

year = {2021},

journal = {International Conference on 3D Vision},

}

This work was supported in part by ANR project EnHerit ANR-17-CE23-0008 ANR-17-CE23-0008 and was granted access to the HPC resources of IDRIS under the allocation 2020-AD011011756 made by GENCI. We thank Tom Monnier, Michael Ramamonjisoa and Vincent Lepetit for valuable feedbacks.