Abstract

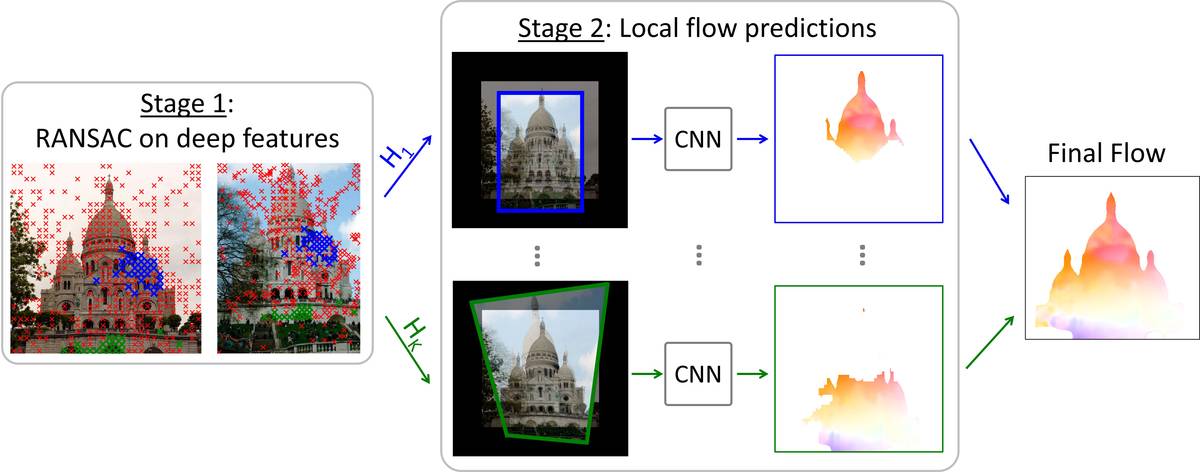

This paper considers the generic problem of dense alignment between two images, whether they be two frames of a video, two widely different views of a scene, two paintings depicting similar content, etc. Whereas each such task is typically addressed with a domain-specific solution, we show that a simple unsupervised approach performs surprisingly well across a range of tasks. Our main insight is that parametric and non-parametric alignment methods have complementary strengths. We propose a two-stage process: first, a feature-based parametric coarse alignment using one or more homographies, followed by non-parametric fine pixel-wise alignment. Coarse alignment is performed using RANSAC on off-the-shelf deep features. Fine alignment is done in an unsupervised way by a deep network which optimizes a standard structural similarity metric (SSIM) between the two images plus cycle-consistency. Despite its simplicity, our method shows competitive results on a range of tasks and datasets, including unsupervised optical flow on KITTI, dense correspondences on Hpatches, two-view geometry estimation on YFCC100M, localization on Aachen Day-Night, and, for the first time, fine alignment of artworks on the Brughel dataset.

Videos

| Result video | Short video (90 s) | Long video (10 mins) |

Visual Results:

-

Aligning a group of Internet images from the Medici Fountain (all the inputs can be seen here):

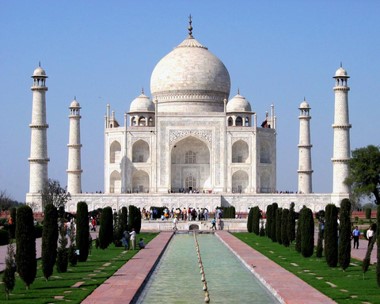

Aligning artworks (detail, more results can be found here) :

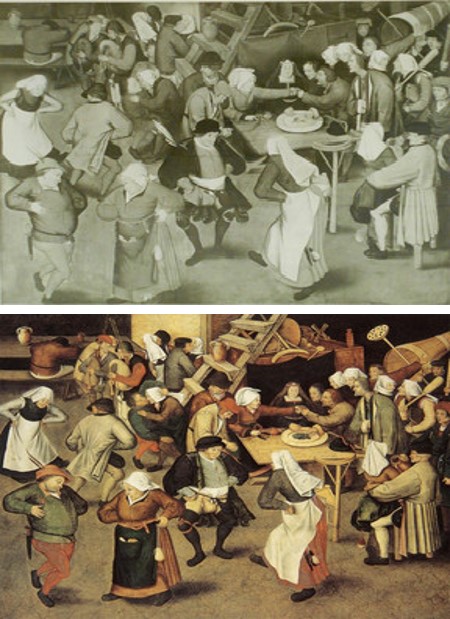

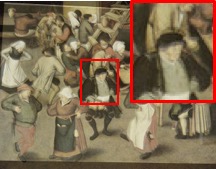

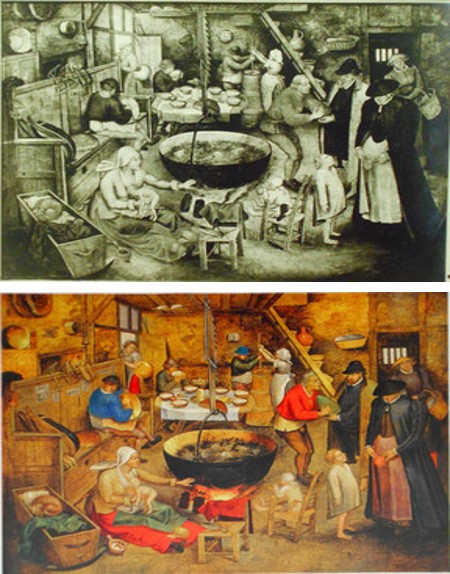

Aligning duplicated artworks, we show average images, zoomed details and flows (top: coarse flow, bottom: fine flow) :

3D recontruction 2-view geometry estimation (more results can be found here):

Texture Transfer

| Average of Inputs | Average after Coarse Alignment | Average after Fine Alignment | Animation |

|

|

|

|

| Input | After Fine Alignment | ||

| Animation | Average | Animation | Average |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Input | W/o Alignment | After Coarse Alignment | After Fine Alignment | Flows |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Source | Target | 3D Reconstruction |

|

|

|

|

|

|

| Source | Target | Texture Transfer |

|

|

|

|

|

|

|

|

|

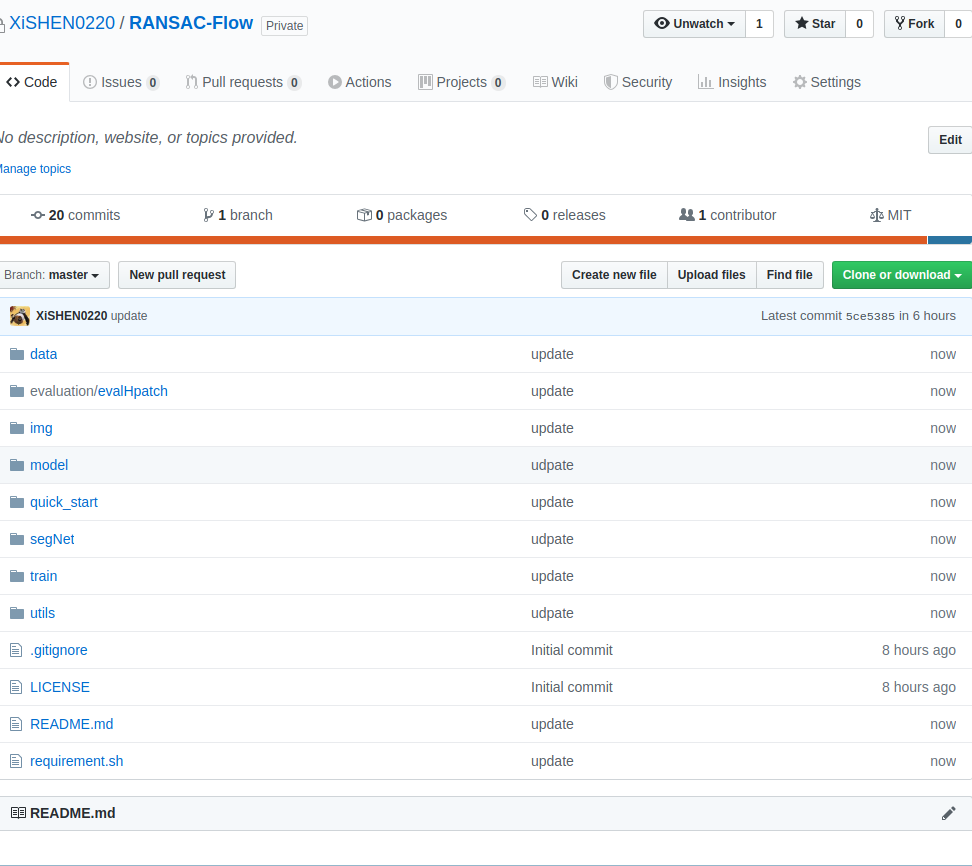

Code and Paper

To cite our paper,

@inproceedings{shen2019ransacflow,

title={RANSAC-Flow: generic two-stage image alignment},

author={Shen, Xi and Darmon, Fran{\c{c}}ois and Efros, Alexei A and Aubry, Mathieu},

booktitle={16th European Conference on Computer Vision},

year={2020}

}

Acknowledgment

This work was supported in part by ANR project EnHerit ANR-17-CE23-0008, project Rapid Tabasco, NSF IIS-1633310, grant from SAP, the France-Berkeley Fund, and gifts from Adobe. We thank Shiry Ginosar, Thibault Groueix and Michal Irani for helpful discussions, and Elizabeth Alice Honig for her help in building the Brueghel dataset.