Abstract

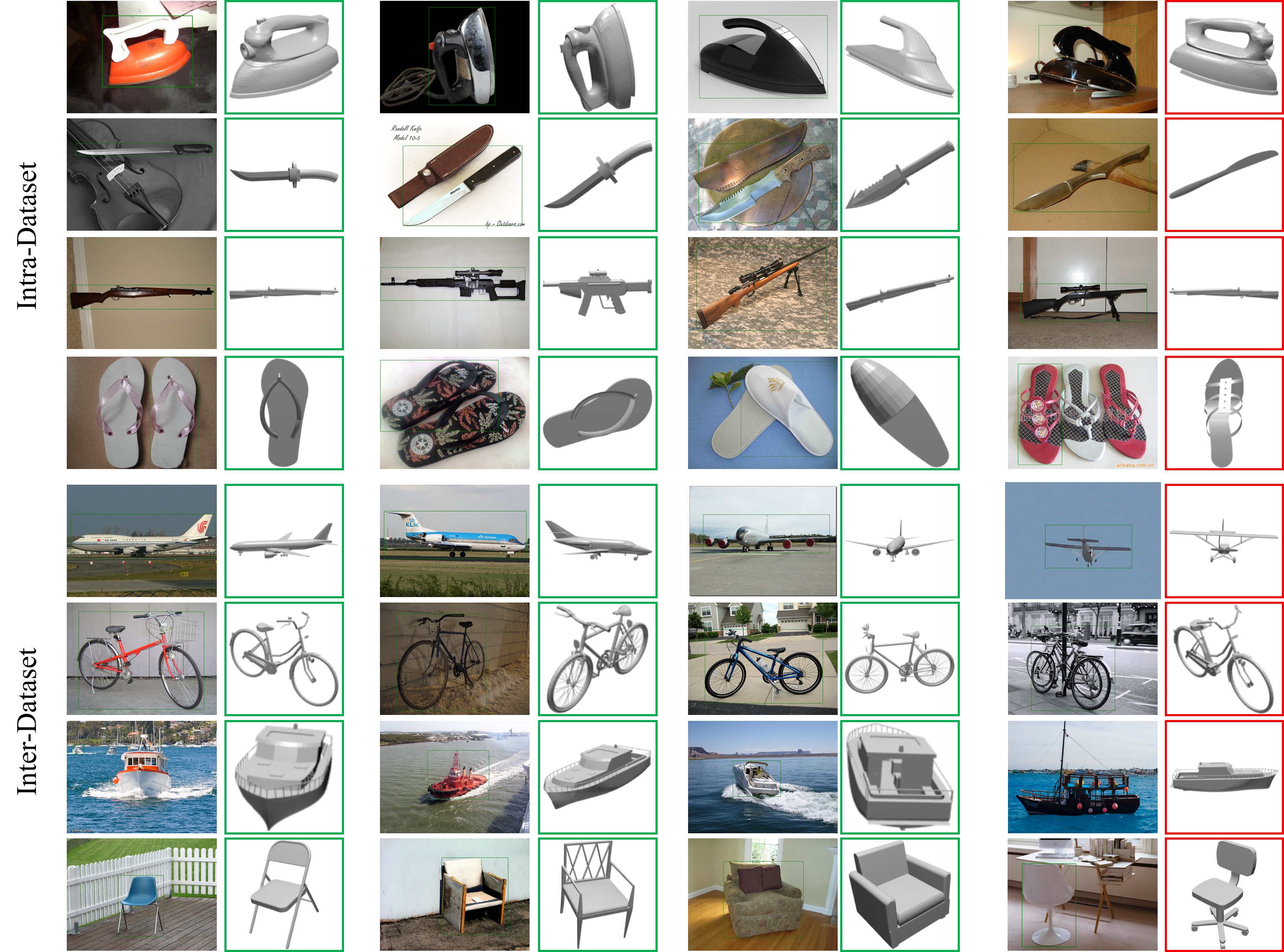

Detecting objects and estimating their viewpoint in images are key tasks of 3D scene understanding. Recent approaches have achieved excellent results on very large benchmarks for object detection and view- point estimation. However, performances are still lagging behind for novel object categories with few samples. In this paper, we tackle the problems of few-shot object detection and few-shot viewpoint estimation. We pro- pose a meta-learning framework that can be applied to both tasks, possi- bly including 3D data. Our models improve the results on objects of novel classes by leveraging on rich feature information originating from base classes with many samples. A simple joint feature embedding module is proposed to make the most of this feature sharing. Despite its simplicity, our method outperforms state-of-the-art methods by a large margin on a range of datasets, including PASCAL VOC and MS COCO for few-shot object detection, and Pascal3D+ and ObjectNet3D for few-shot view- point estimation. And for the first time, we tackle the combination of both few-shot tasks, on ObjectNet3D, showing promising results.

Videos

| Short video (90 s) | Long video (9 mins) |

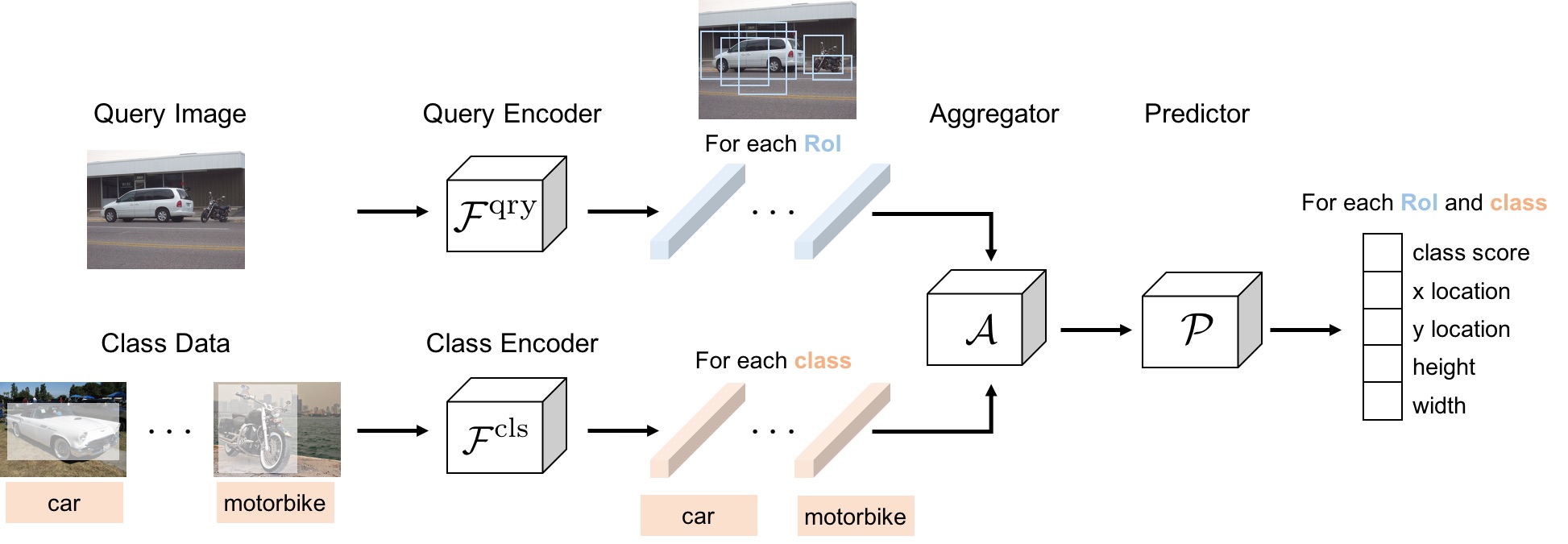

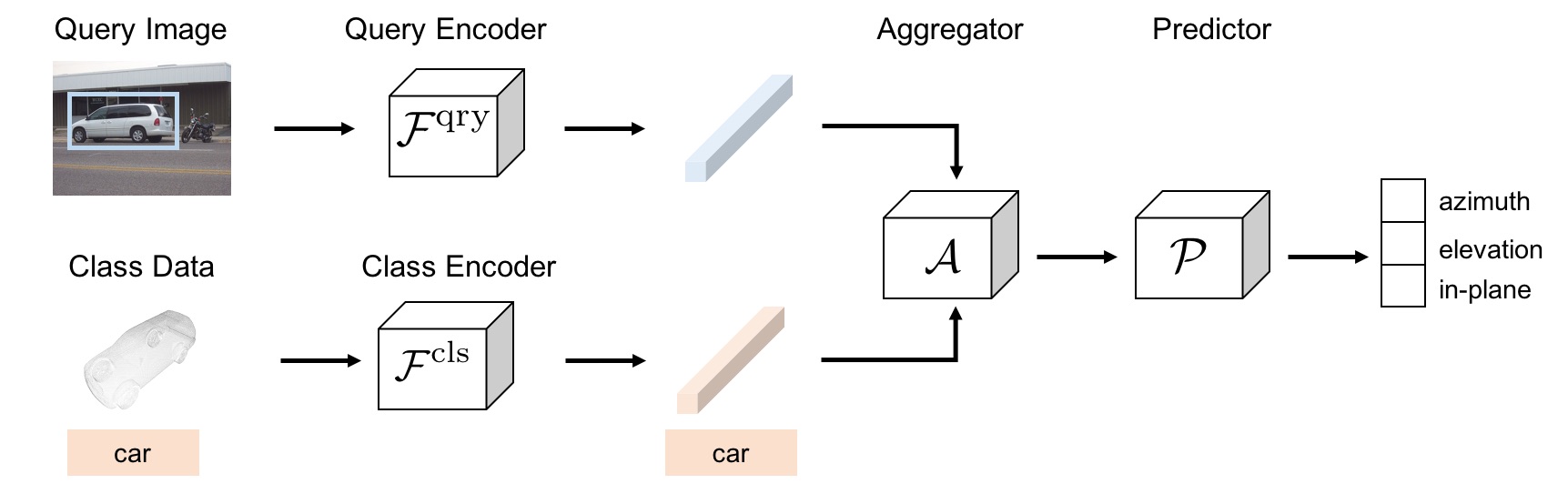

Method

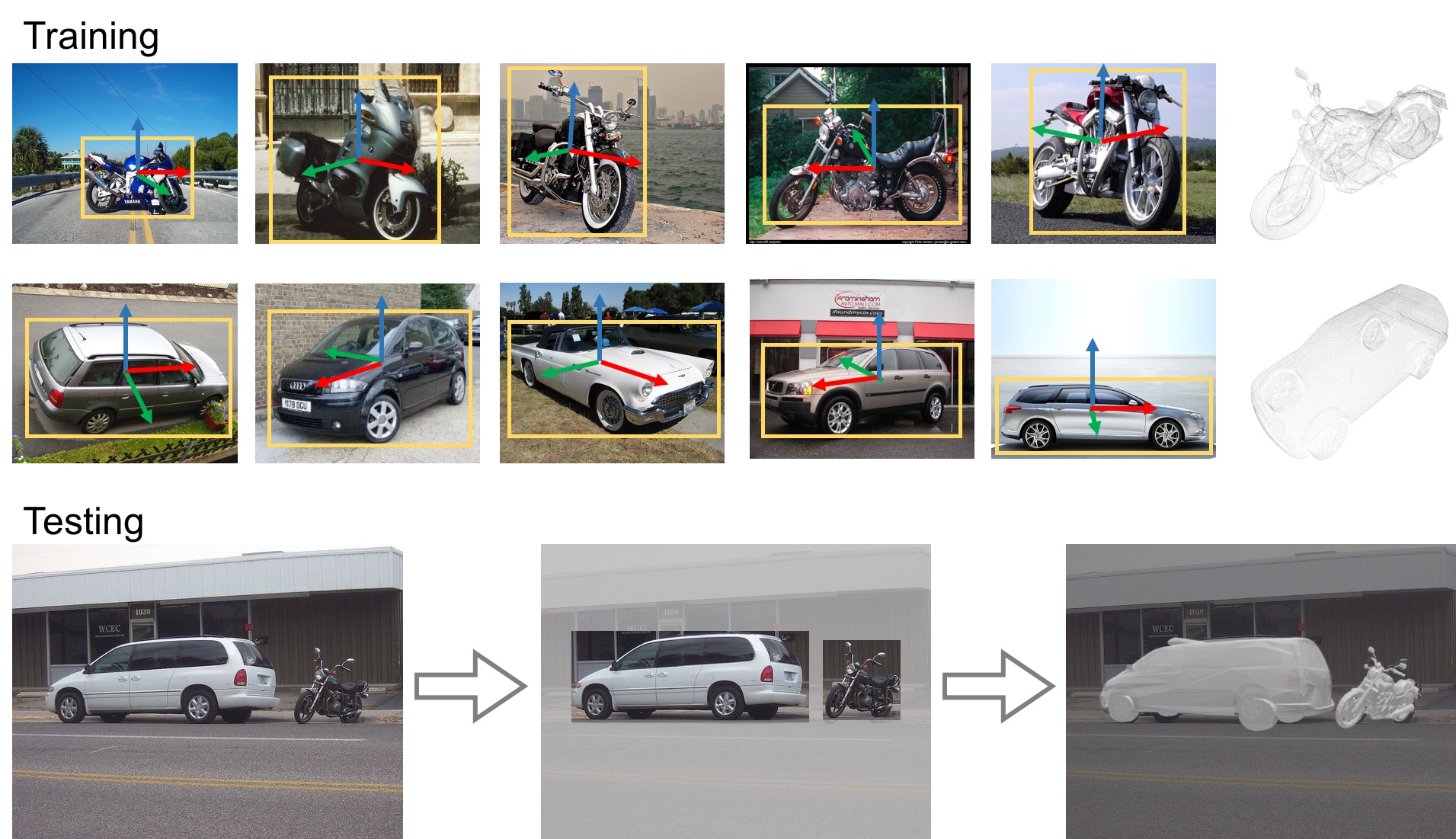

Task-Relevant Class Data

Few-Shot Object Detection

Few-Shot Viewpoint Estimation

Results

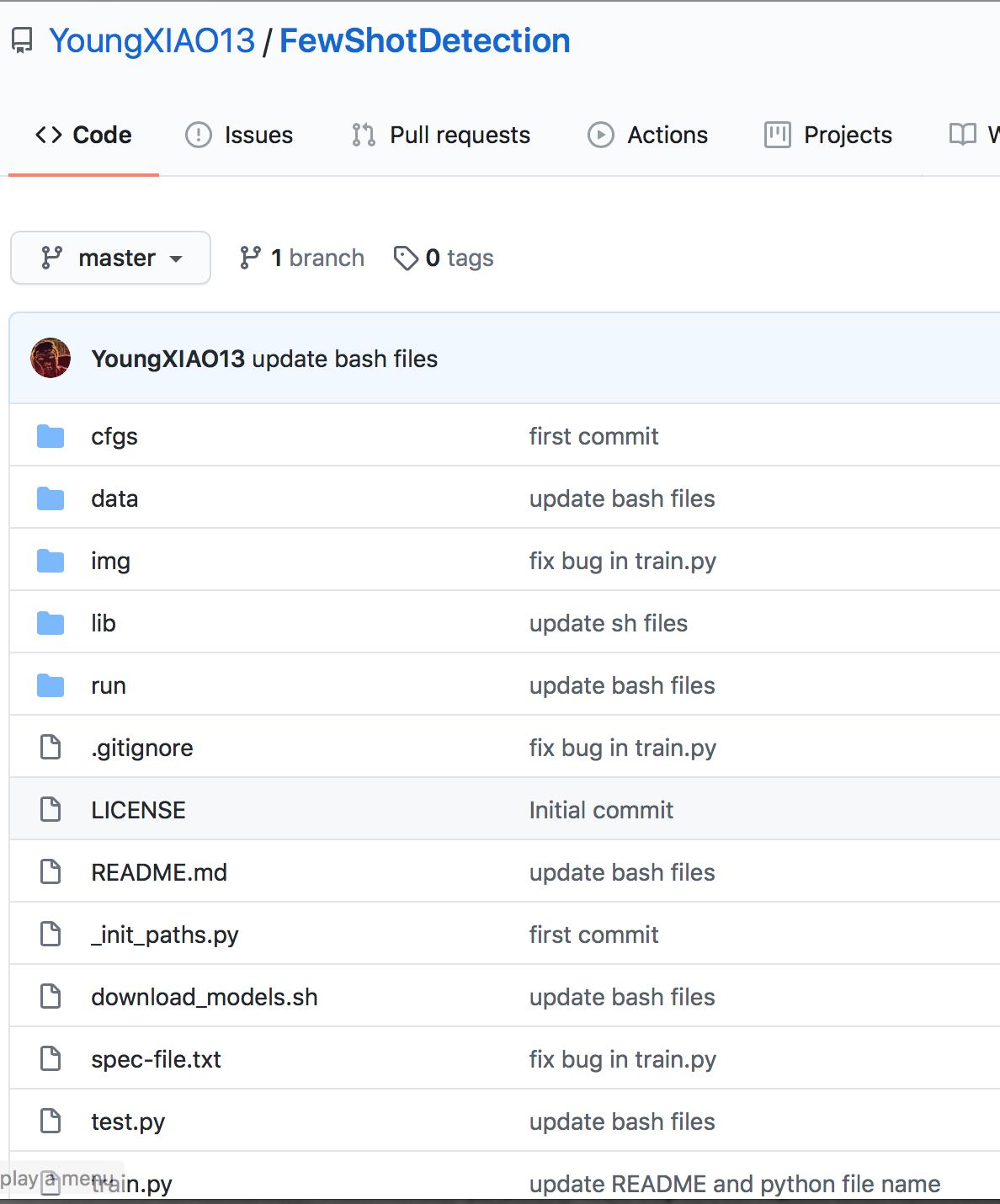

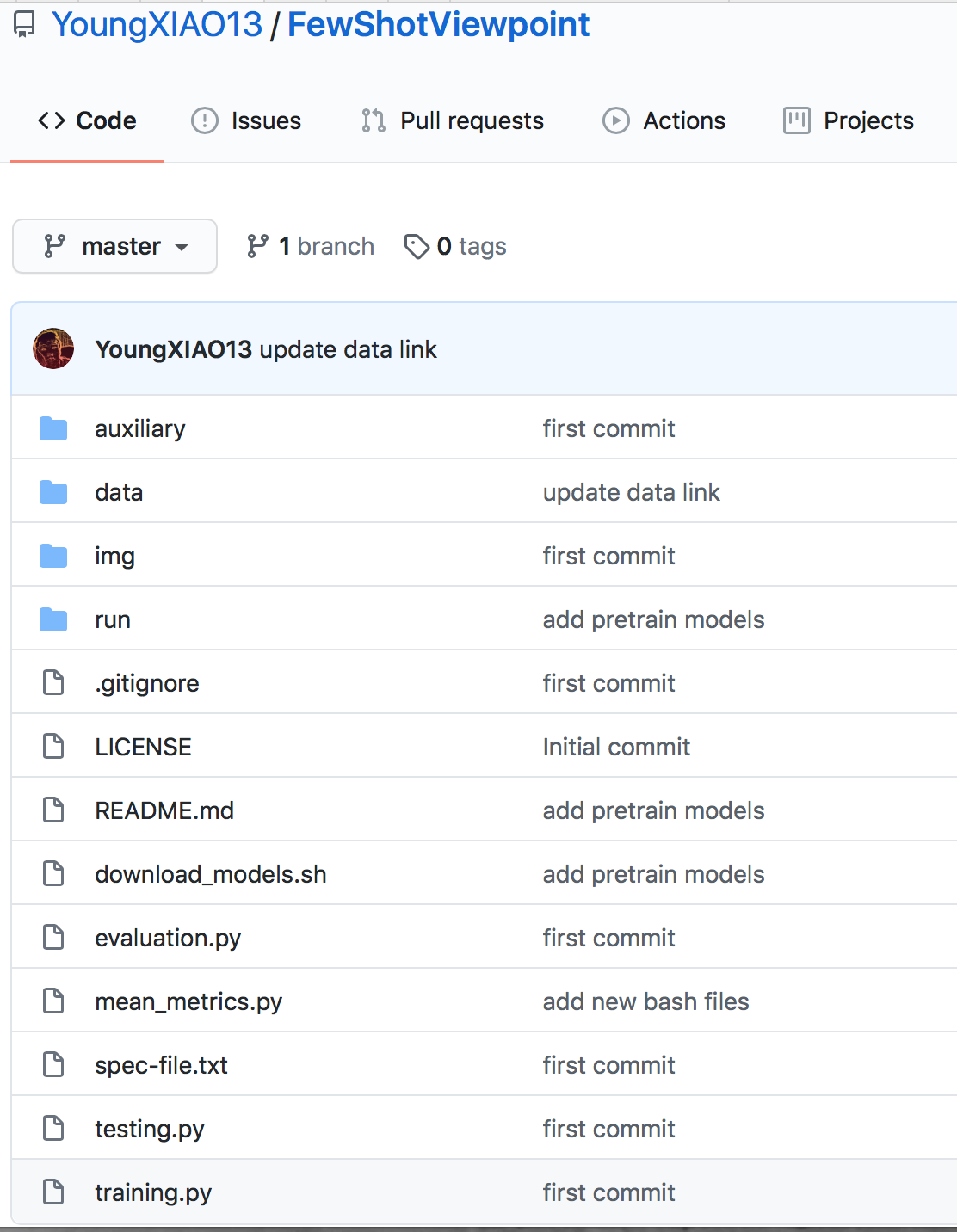

Paper, Code and Citation

To cite our paper,

@INPROCEEDINGS{Xiao2020FSDetView,

author = {Yang Xiao and Renaud Marlet},

title = {Few-Shot Object Detection and Viewpoint Estimation for Objects in the Wild},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2020}}

Acknowledgment

We thank Vincent Lepetit and Yuming Du for helpful discussions.